There are two basic approaches to cosmology: start at redshift zero and work outwards in space, or start at the beginning of time and work forward. The latter approach is generally favored by theorists, as much of the physics of the early universe follows a “clean” thermal progression, cooling adiabatically as it expands. The former approach is more typical of observers who start with what we know locally and work outwards in the great tradition of Hubble, Sandage, Tully, and the entire community of extragalactic observers that established the paradigm of the expanding universe and measured its scale. This work had established our current concordance cosmology, ΛCDM, by the mid-90s.*

Both approaches have taught us an enormous amount. Working forward in time, we understand the nucleosynthesis of the light elements in the first few minutes, followed after a few hundred thousand years by the epoch of recombination when the universe transitioned from an ionized plasma to a neutral gas, bequeathing us the cosmic microwave background (CMB) at the phenomenally high redshift of z=1090. Working outwards in redshift, large surveys like Sloan have provided a detailed map of the “local” cosmos, and narrower but much deeper surveys provide a good picture out to z = 1 (when the universe was half its current size, and roughly half its current age) and beyond, with the most distant objects now known above redshift 7, and maybe even at z > 11. JWST will provide a good view of the earliest (z ~ 10?) galaxies when it launches.

This is wonderful progress, but there is a gap from 10 < z < 1000. Not only is it hard to observe objects so distant that z > 10, but at some point they shouldn’t exist. It takes time to form stars and galaxies and the supermassive black holes that fuel quasars, especially when starting from the smooth initial condition seen in the CMB. So how do we probe redshifts z > 10?

It turns out that the universe provides a way. As photons from the CMB traverse the neutral intergalactic medium, they are subject to being absorbed by hydrogen atoms – particularly by the 21cm spin-flip transition. Long anticipated, this signal has recently been detected by the EDGES experiment. I find it amazing that the atomic physics of the early universe allows for this window of observation, and that clever scientists have figured out a way to detect this subtle signal.

So what is going on? First, a mental picture. In the image below, an observer at the left looks out to progressively higher redshift towards the right. The history of the universe unfolds from right to left.

Pritchard & Loeb give a thorough and lucid account of the expected sequence of events. As the early universe expands, it cools. Initially, the thermal photon bath that we now observe as the CMB has enough energy to keep atoms ionized. The mean free path that a photon can travel before interacting with a charged particle in this early plasma is very short: the early universe is opaque like the interior of a thick cloud. At z = 1090, the temperature drops to the point that photons can no longer break protons and electrons apart. This epoch of recombination marks the transition from an opaque plasma to a transparent universe of neutral hydrogen and helium gas. The path length of photons becomes very long; those that we see as the CMB have traversed the length of the cosmos mostly unperturbed.

Immediately after recombination follows the dark ages. Sources of light have yet to appear. There is just neutral gas expanding into the future. This gas is mostly but not completely transparent. As CMB photons propagate through it, they are subject to absorption by the spin-flip transition of hydrogen, a subtle but, in principle, detectable effect: one should see redshifted absorption across the dark ages.

After some time – perhaps a few hundred million years? – the gas has had enough time to clump up enough to start to form the first structures. This first population of stars ends the dark ages and ushers in cosmic dawn. The photons they release into the vast intergalactic medium (IGM) of neutral gas interacts with it and heats it up, ultimately reionizing the entire universe. After this time the IGM is again a plasma, but one so thin (thanks to the expansion of the universe) that it remains transparent. Galaxies assemble and begin the long evolution characterized by the billions of years lived by the stars the contain.

This progression leads to the expectation of 21cm absorption twice: once during the dark ages, and again at cosmic dawn. There are three temperatures we need to keep track of to see how this happens: the radiation temperature Tγ, the kinetic temperature of the gas, Tk, and the spin temperature, TS. The radiation temperature is that of the CMB, and scales as (1+z). The gas temperature is what you normally think of as a temperature, and scales approximately as (1+z)2. The spin temperature describes the occupation of the quantum levels involved in the 21cm hyperfine transition. If that makes no sense to you, don’t worry: all that matters is that absorption can occur when the spin temperature is less than the radiation temperature. In general, it is bounded by Tk < TS < Tγ.

The radiation temperature and gas temperature both cool as the universe expands. Initially, the gas remains coupled to the radiation, and these temperatures remain identical until decoupling around z ~ 200. After this, the gas cools faster than the radiation. The radiation temperature is extraordinarily well measured by CMB observations, and is simply Tγ = (2.725 K)(1+z). The gas temperature is more complicated, requiring the numerical solution of the Saha equation for a hydrogen-helium gas. Clever people have written codes to do this, like the widely-used RECFAST. In this way, one can build a table of how both temperatures depend on redshift in any cosmology one cares to specify.

This may sound complicated if it is the first time you’ve encountered it, but the physics is wonderfully simple. It’s just the thermal physics of the expanding universe, and the atomic physics of a simple gas composed of hydrogen and helium in known amounts. Different cosmologies specify different expansion histories, but these have only a modest (and calculable) effect on the gas temperature.

Wonderfully, the atomic physics of the 21cm transition is such that it couples to both the radiation and gas temperatures in a way that matters in the early universe. It didn’t have to be that way – most transitions don’t. Perhaps this is fodder for people who worry that the physics of our universe is fine-tuned.

There are two ways in which the spin temperature couples to that of the gas. During the dark ages, the coupling is governed simply by atomic collisions. By cosmic dawn collisions have become rare, but the appearance of the first stars provides UV radiation that drives the Wouthuysen–Field effect. Consequently, we expect to see two absorption troughs: one around z ~ 20 at cosmic dawn, and another at still higher redshift (z ~ 100) during the dark ages.

Observation of this signal has the potential to revolutionize cosmology like detailed observations of the CMB did. The CMB is a snapshot of the universe during the narrow window of recombination at z = 1090. In principle, one can make the same sort of observation with the 21cm line, but at each and every redshift where absorption occurs: z = 16, 17, 18, 19 during cosmic dawn and again at z = 50, 100, 150 during the dark ages, with whatever frequency resolution you can muster. It will be like having the CMB over and over and over again, each redshift providing a snapshot of the universe at a different slice in time.

The information density available from the 21cm signal is in principle quite large. Before we can make use of any of this information, we have to detect it first. Therein lies the rub. This is an incredibly weak signal – we have to be able to detect that the CMB is a little dimmer than it would have been – and we have to do it in the face of much stronger foreground signals from the interstellar medium of our Galaxy and from man-made radio interference here on Earth. Fortunately, though much brighter than the signal we seek, these foregrounds have a different frequency dependence, so it should be possible to sort out, in principle.

Saying a thing can be done and doing it are two different things. This is already a long post, so I will refrain from raving about the technical challenges. Lets just say it’s Real Hard.

Many experimentalists take that as a challenge, and there are a good number of groups working hard to detect the cosmic 21cm signal. EDGES appears to have done it, reporting the detection of the signal at cosmic dawn in February. Here some weasel words are necessary, as the foreground subtraction is a huge challenge, and we always hope to see independent confirmation of a new signal like this. Those words of caution noted, I have to add that I’ve had the chance to read up on their methods, and I’m really impressed. Unlike the BICEP claim to detect primordial gravitational waves that proved to be bogus after being rushed to press release before refereering, the EDGES team have done all manner of conceivable cross-checks on their instrumentation and analysis. Nor did they rush to publish, despite the importance of the result. In short, I get exactly the opposite vibe from BICEP, whose foreground subtraction was obviously wrong as soon as I laid eyes on the science paper. If EDGES proves to be wrong, it isn’t for want of doing things right. In the meantime, I think we’re obliged to take their result seriously, and not just hope it goes away (which seems to be the first reaction to the impossible).

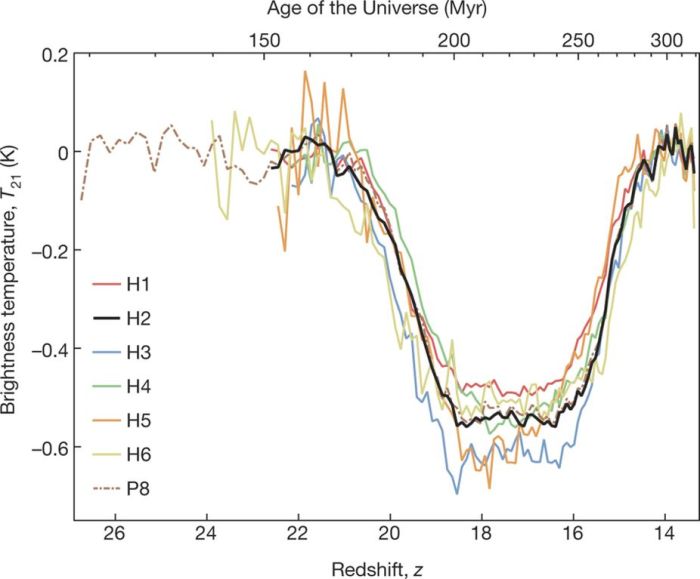

Here is what EDGES saw at cosmic dawn:

The unbelievable aspect of the EDGES observation is that it is too strong. Feeble as this signal is (a telescope brightness decrement of half a degree Kelvin), after subtracting foregrounds a thousand times stronger, it is twice as much as is possible in ΛCDM.

I made a quick evaluation of this, and saw that the observed signal could be achieved if the baryon fraction of the universe was high – basically, if cold dark matter did not exist. I have now had the time to make a more careful calculation, and publish some further predictions. The basic result from before stands: the absorption should be stronger without dark matter than with it.

The reason for this is simple. A universe full of dark matter decelerates rapidly at early times, before the acceleration of the cosmological constant kicks in. Without dark matter, the expansion more nearly coasts. Consequently, the universe is relatively larger from 10 < z < 1000, and the CMB photons have to traverse a larger path length to get here. They have to go about twice as far through the same density of hydrogen absorbers. It’s like putting on a second pair of sunglasses.

Quantitatively, the predicted absorption, both with dark matter and without, looks like:

The predicted absorption is consistent with the EDGES observation, within the errors, if there is no dark matter. More importantly, ΛCDM is not consistent with the data, at greater than 95% confidence. At cosmic dawn, I show the maximum possible signal. It could be weaker, depending on the spectra of the UV radiation emitted by the first stars. But it can’t be stronger. Taken at face value, the EDGES result is impossible in ΛCDM. If the observation is corroborated by independent experiments, ΛCDM as we know it will be falsified.

There have already been many papers trying to avoid this obvious conclusion. If we insist on retaining ΛCDM, the only way to modulate the strength of the signal is to alter the ratio of the radiation temperature to the gas temperature. Either we make the radiation “hotter,” or we make the gas cooler. If we allow ourselves this freedom, we can fit any arbitrary signal strength. This is ad hoc in the way that gives ad hoc a bad name.

We do not have this freedom – not really. The radiation temperature is measured in the CMB with great accuracy. Altering this would mess up the genuine success of ΛCDM in fitting the CMB. One could postulate an additional source, something that appears after recombination but before cosmic dawn to emit enough radio power throughout the cosmos to add to the radio brightness that is being absorbed. There is zero reason to expect such sources (what part of `cosmic dawn’ was ambiguous?) and no good way to make them at the right time. If they are primordial (as people love to imagine but are loathe to provide viable models for) then they’re also present at recombination: anything powerful enough to have the necessary effect will likely screw up the CMB.

Instead of magically increasing the radiation temperature, we might decrease the gas temperature. This seems no more plausible. The evolution of the gas temperature is a straightforward numerical calculation that has been checked by several independent codes. It has to be right at the time of recombination, or again, we mess up the CMB. The suggestions that I have heard seem mostly to invoke interactions between the gas and dark matter that offload some of the thermal energy of the gas into the invisible sink of the dark matter. Given how shy dark matter has been about interacting with normal matter in the laboratory, it seems pretty rich to imagine that it is eager to do so at high redshift. Even advocates of this scenario recognize its many difficulties.

For those who are interested, I cite a number of the scientific papers that attempt these explanations in my new paper. They all seem like earnest attempts to come to terms with what is apparently impossible. Many of these ideas also strike me as a form of magical thinking that stems from ΛCDM groupthink. After all, ΛCDM is so well established, any unexpected signal must be a sign of exciting new physics (on top of the new physics of dark matter and dark energy) rather than an underlying problem with ΛCDM itself.

The more natural interpretation is that the expansion history of the universe deviates from that predicted by ΛCDM. Simply taking away the dark matter gives a result consistent with the data. Though it did not occur to me to make this specific prediction a priori for an experiment that did not yet exist, all the necessary calculations had been done 15 years ago.

Using the same model, I make a genuine a priori prediction for the dark ages. For the specific NoCDM model I built in 2004, the 21cm absorption in the dark ages should again be about twice as strong as expected in ΛCDM. This seems fairly generic, but I know the model is not complete, so I wouldn’t be upset if it were not bang on.

I would be upset if ΛCDM were not bang on. The only thing that drives the signal in the dark ages is atomic scattering. We understand this really well. ΛCDM is now so well constrained by Planck that, if right, the 21cm absorption during the dark ages must follow the red line in the inset in the figure. The amount of uncertainty is not much greater than the thickness of the line. If ΛCDM fails this test, it would be a clear falsification, and a sign that we need to try something completely different.

Unfortunately, detecting the 21cm absorption signal during the dark ages is even harder than it is at cosmic dawn. At these redshifts (z ~ 100), the 21cm line (1420 MHz on your radio dial) is shifted beyond the ionospheric cutoff of the Earth’s atmosphere at 30 MHz. Frequencies this low cannot be observed from the ground. Worse, we have made the Earth itself a bright foreground contaminant of radio frequency interference.

Undeterred, there are multiple proposals to measure this signal by placing an antenna in space – in particular, on the far side of the moon, so that the moon shades the instrument from terrestrial radio interference. This is a great idea. The mere detection of the 21cm signal from the dark ages would be an accomplishment on par with the original detection of the CMB. It appears that it might also provide a decisive new way of testing our cosmological model.

There are further tests involving the shape of the 21cm signal, its power spectrum (analogous to the power spectrum of the CMB), how structure grows in the early ages of the universe, and how massive the neutrino is. But that’s enough for now.

Most likely beer. Or a cosmo. That’d be appropriate. I make a good pomegranate cosmo.

*Note that a variety of astronomical observations had established the concordance cosmology before Type Ia supernovae detected cosmic acceleration and well-resolved observations of the CMB found a flat cosmic geometry.

Of course, CMB in LCDM is used to measure not just the radiation temperature but also the DM fraction based upon the 2:3 peak ratio. So, you’re damned if you do and damned if you don’t in the CDM paradigm. No CDM in inconsistent with the third-peak measurement, while 21cm is consistent with no CDM and inconsistent with a meaningful amount of CDM.

You can come up with relativistic modified gravity theories that can be tuned to produce the 2:3 peak ratio in the CMB that is observed (as you are well aware TeVeS doesn’t do that, but MOG claims to and apparently it can be done with f(R) theories as well). But, it is a little bit unclear to me if a modified gravity theory that did fit the CMB, as opposed to a simpler no CDM paradigm, could also fit the 21cm result.

As you note, the radiation temperature is well calibrated by the CMB, so that leaves gas temperature and spin temperature as moving parts. My intuition is that modified gravity wouldn’t be irrelevant to gas temperature, but maybe the gravitational fields are to strong in the 10<Z<1000 era to have much of a notable modification effect quantitatively. And, I don't really have an intuition about what drives spin temperature.

To the extent that the temperature moderating effects of CDM are a thermal property of the particles distinct from their kinetic properties, I could see how they would be independent of the effects that MG gives rise to, but again, this feels like fuzzy thinking.

The 21cm issue also seems related somehow (if nothing else because it pertains to the same general part of the timeline) with evidence of early structure formation.

The impossible early galaxy problem and the black holes get too big too early problems in cosmology an the problem of metal poor structures identified at Peter Creasey, et al., "Globular Clusters Formed within Dark Halos I: present-day abundance, distribution and kinematics" (June 28, 2018). It seems that modified gravity theories, in general, tend to give rise to structure in the early universe sooner than CDM models do, providing more, correlated evidence of a problem with the LCDM model's timeline of the early universe that MG can solve with its faster early timeline.

LikeLiked by 1 person

Exactly so. Well put.

LikeLiked by 1 person

A pleasure to read and a true boomer if the signal is indeed confirmed. Great thing that this experiment can be repeated quite easily.

LikeLike

Very informative and well written blog post. It would be a great irony of history if future experiments on the dark side of the moon finally put paid to cold dark matter as a scientific hypothesis. Given that the two approaches two astronomy you refer to at the beginning of your post are already leading to a serious discrepancy in the measurement and calculation of the Hubble constant (as you have discussed in a previous post), do think this new experimental frontier in astronomy/cosmology is likely to have any bearing on this problem? Also does this new frontier potentially lead to experimental bounds on the conjectured existence of hotter “dark matter”, such as sterile neutrinos, just as it seems to do in the case of cold dark matter?

LikeLike

I certainly hope this new frontier has a bearing on these problems… as an empiricist, I view the 21cm absorption troughs as a constraint on the expansion history H(z) of the universe. Perhaps what we have now is a good but incomplete approximation to whatever the truth is. If so, then one indication of that could be a redshift-dependent departure from what the expansion history must be in LCDM. Bear in mind that LCDM itself is a departure from what the expansion history “had” to be (decelerating with q > 0), so it is already something of a strained approximation in this direction. So far a sufficient one. My hope is that IF LCDM is just an approximation and not the final answer, then departures from it will become large enough to be obvious. It is not obvious to me that this is ever going to be possible, since dark energy models with variable equations of state can be fit to pretty much any arbitrary distance-redshift relation.

As for hotter dark matter, I suppose any hot DM components must leave a mark in the 21cm power spectrum. I imagine this to be suppressed relative to LCDM at decoupling (z~200), but in modified gravity scenarios, structure forms fast and there would be more power by the end of the dark ages (z~30). Exactly how this plays out depends sensitively on the existence and mass of any hot DM, like a massive neutrino.

One thing I did not get as far as discussing was that the latest Planck constraints on the sum of neutrino mass is now tiny, 0.06 eV. That’s a narrow window. The experimental limits remain more lenient (< 2 eV, though I've hear it argued it may be down to 0.12 eV would falsify LCDM. The specific model I used in the paper has a neutrino mass of 1.1 eV: that isn’t relevant to the 21cm signal, but it is important for the CMB, as far as the model fits (L < 600 only).

LikeLiked by 1 person

Stacy,

You deserve a tremendous amount of credit for your work, critical thinking and communication skills. You are inspiring others and systematically building the evidence to change our understanding of the universe. Thank you!

The more evidence that you cite, the more convinced I become that the Equivalence Principle is not “approximately true for small increments of space and time”, as any good physicist will tell you – but that it is Fundamentally True.

Arguably this leads to the interpretation that space is only isotropc, has positive curvature and that there may be a black hole at the center of the universe (z approaches infinity).

However, if you start with the understanding that the Equivalence Principle is fundamentally true, we may find that theory and observational evidence converges.

LikeLiked by 1 person

Holding the EP to be a fundamental truth would possibly constrain the dark matter and dark energy to be a boundary condition of a closed universe. It’s kind of like a cosmic peace accord.

LikeLike

According to the abstract of the EDGES paper: “…only cooling of the gas as a result of interactions between dark matter and baryons seems to explain the observed amplitude.”

This seems to be the opposite of what you are saying: “The predicted absorption is consistent with the EDGES observation, within the errors, if there is no dark matter.” Is there a real disagreement here or am I missing something?

Also, the graphic of the history of the universe presented entails a universal reference frame. Does that inconsistency with Relativity Theory bother you, and if not, why not?

LikeLike

I believe dr. McGaugh already addressed this here: “The suggestions that I have heard seem mostly to invoke interactions between the gas and dark matter that offload some of the thermal energy of the gas into the invisible sink of the dark matter. Given how shy dark matter has been about interacting with normal matter in the laboratory, it seems pretty rich to imagine that it is eager to do so at high redshift. Even advocates of this scenario recognize its many difficulties.”

LikeLiked by 1 person

Right. The statement that some have made, various assertions to the effect that “only cooling of the gas as a result of interactions between dark matter and baryons seems to explain the observed amplitude” is the result of not considering all the possibilities. To me, the most obvious possibility is that the expansion history differs as I describe – this is nature if the universe contains no dark matter. To most cosmologists, LCDM is established beyond a reasonable doubt, so it simply never occurred to them to consider this option. Indeed, I just heard a talk on this recently in which the speaker very reasonably reviewed all the possibilities – to do with decreasing the gas temperature or increasing the radiation temperature. Neither the baryon fraction nor the expansion history were mentioned. These are known, Khaleesi.

LikeLiked by 2 people

I found this post quite interesting and definitely something that I should follow up also from other sources, however, there is something that puzzles me in you assumptions: “and the CMB photons have to traverse a larger path length to get here. They have to go about twice as far through the same density of hydrogen absorbers”.

I can understand why the photons have to go about twice as far, but what puzzles me is that you assume the same density of hydrogen. Since nucleosynthesis is already over, any expansion of the universe would only decrease that density. As a matter of fact, since we’re talking about a fixed amount of baryons, the number of interactions between the CMB photons and hydrogen should be independent of the size of the universe, the only difference being the length of the mean path between interactions which should increase by the same factor as the size of the universe increases.

Can you please elaborate a bit more about this assumption?

LikeLiked by 1 person

The density of hydrogen (Omega_b*h^2) is the same in both cases, and of courses decreases as the universe expands. But the quantitative way in which this happens differs if the expansion history is different.

LikeLiked by 1 person

Could the third peak of the CMB be explained as the sum total of all the standard model neutrinos masses, (or primordial black holes) thus also explaining the EDGES baryon only universe as you describe in your paper?

would dark matter entirely consisting of either standard model neutrinos or primordial black holes be consistent with your baryon only interpretation of EDGES?

LikeLike

No, I don’t think so. You would need such a large density of black holes to have the “right” effect in the CMB that we’d still basically have LCDM, just with black hole dark matter instead of WIMPs.

If this seems like it does not compute, you are correct. I do not see how it is currently possible to reconcile every aspect of the data. We seem to be missing something fundamental.

LikeLiked by 1 person

What is missing is any inclination on the part of the theoretical physics community to reconsider the century old assumptions that underlie their floundering cosmological model. Until that happens cosmology will remain a realm of idle conjectures constantly in need of manipulation to attain agreement with empirical observations.

Despite your sound scientific efforts, if you only consider your results in the context of the empirically challenged expanding universe model you will have a fitting problem. What is fundamentally missing in modern cosmology is the ability or willingness of the theoretical physics community to reconsider its empirically baseless assumptions.

As it stands we don’t have a scientific model of the cosmos but a mythological model of a “universe” that exists only in the human imaginations of certain reality challenged theorists (and their followers) for whom empiricism is an afterthought.

LikeLike

hi, yes that doesn’t seem to computer. what about having enough standard model neutrinos? whatever the mass of neutrinos, if you have enough of them, the sum total of masses.

could neutrinos explain both the third peak of CMB and the 21cm absorption?

LikeLike

I hope I don’t seem too annoying, but I would like to get some feedback on the idea below. I just feel it may help to resolve some of the major problems in cosmology if more people can bring their expertise to bear on it.

If dark matter and dark energy is required to explain the observed accelerations of distant objects, and this dark matter cannot be detected by any experiment nor dark energy otherwise accounted for, then is it not likely that these are the components of fictitious forces?

If the Equivalence Principle is fundamentally true, we can explain these accelerations with a choice of mass responsible for creating an equivalent gravitational field in an inertial reference frame. If this mass too cannot be detected by experiment, we could place it at the boundary of our closed universe. The dark energy and dark matter then effectively set the boundary conditions for the universe.

This alone leads to the understanding that there may be a black hole at the center of our universe, if by center we choose the space where z goes to infinity (here z is the “cosmic redshift” or by equivalence, the gravitational redshift due to the cosmic black hole). To picture this I imagine that space must be only isotropic and have a positive curvature.

Picture leaving your fellow observer (on Earth let’s say – but it could be anywhere) and travelling in a spaceship in any direction. In the absence of intervening fields you follow a curved geodesic that eventually leads back toward a Cosmic Black Hole. No matter what direction you chose, your fellow observer would begin to see your transmission become progressively redshifted as you moved towards the black hole. But since neither of you knew about the CBH, you might conclude that space was expanding. Perhaps both are valid explanations, but one has virtual dark energy, and the other a CBH.

LikeLike

Perhaps your CBH is related to the primordial BH people sometimes worry about. The center in 4D is the origin of time. As we look out in redshift, your CBH would be everywhere on the sky around us, at the beginning of time.

LikeLiked by 1 person

Maybe the CBH model is the static gravitational model in an inertial reference frame which we observe from an accelerated reference frame. Then the question is are we (and our observable universe) in orbit around this black hole?

LikeLike

“Explaining” one phenomena we haven’t been able to observe (DM) with another phenomena we can’t observe (CBH) does not seem a good idea to me.

I think we’ll be better off to not try to “explain” this yet but just keep gathering data; we are more likely to find the answer that way. What is the true magnitude and details of the discrepancy that suggested DM in the first place? It seems every month there’s a new refinement.

Sometimes theories lead to discoveries; sometimes discoveries lead to theories.

sean s.

LikeLike

Sean,

I understand that it really isn’t a simplification of the problem. Quite the opposite. The overall idea is that by developing a dualistic description of these cosmological phenomena, we might eventually be able to better undersand not only the nature of dark matter and dark energy, but also possibly why we have the vacuum catastrophe problem and the arrow of time. That is, if it is possible for someone to find this kind of solution.

LikeLike

A “dualistic description” that depends on unobservable phenomena is more likely to muddy the waters than lead to better understanding. What we now is more or better data on which we can base better understanding.

sean s.

LikeLike

@sean samis “What is the true magnitude and details of the discrepancy that suggested DM in the first place?”

Zwicky had predicted as early as 1933 that there were huge amounts of DM in the clusters. And he was right: what was invisible in his day became gradually observable to our instruments!

Perhaps your question more specifically concerns the non-baryonic DM, ie the Cold DM (… or Collisionless DM ??), the “WIMPs miracle”, etc.?

LikeLike

Funny story, historically. Zwicky found a discrepancy that was over a factor of 100 in clusters of galaxies in the ’30s and was mostly ignored at the time. Oort found a factor of 2 discrepancy in the disk of the Milky Way and was taken very seriously. Flash forward, and the Oort discrepancy has mostly gone away (exactly what is meant by that is somewhat subtle) while the discrepancy of Zwicky has clarified.

However, the amplitude of the discrepancy in clusters in much smaller now. The ~100 Zwicky found was large in part because most of the normal matter was in hot gas in the intracluster medium. It got lumped in with the rest of the dunkel matter. Recognizing and separating this component, the conventional mass discrepancy in clusters is a factor of ~6. Still lots more dark matter than normal matter, but nothing like a factor of one hundred.

Since it happened once that there was unseen but normal mass as well as the still-mysterious dark matter, we should be careful now about the nature of what is left over. There is room in the baryonic mass budget for there to be more normal matter in clusters (there is an earlier post about this), so it is conceivable that what happened before might happen again. That is to say, it is not inconceivable that what we call the dark matter in clusters now is composed of multiple components – more dark baryons, neutrinos if they’re massive enough, and whatever the “primary” dark matter really is.

LikeLike

Well, if “Dunkle Materie” was dichotomous with “visible matter”, if the matter visible to Zwicky’s eyes was only the stellar mass and if the normal matter is 1/6 of the total mass, since the stellar mass is only ~7% of the normal matter, then Zwicky was perfectly right in 1930’s against all these “spherical bastards”!

LikeLike

Right. By that standard, Milgrom was right in 1983 when he ascribed the residual mass discrepancy MOND suffers in clusters to the then newly-detected X-ray gas. That seemed a stretch at the time, but it partly came true – the ICM outweighs the stars. Just not by enough in MOND with current numbers. Not sure what it’d be scaled to 1930s distances because who cares.

Zwicky was correct that there was a discrepancy in clusters, but the amplitude of that discrepancy was – through no fault of his – badly misleading. This fallacy was repeated well into the modern day by plots illustrating that M/L goes up and up on larger and larger scales, implying that there was more and more [presumptively non-baryonic] dark matter (e.g., Fig. 2 of http://adsabs.harvard.edu/abs/1993PhR…227…13S or Fig. 1 of https://arxiv.org/abs/astro-ph/9711062). That was misleading: once all the known baryons were accounted for, the trend with scale vanishes (e.g., Fig. 16 of https://xxx.lanl.gov/abs/astro-ph/9801123). If anything, nowadays it is the smallest things that exhibit the largest discrepancies.

LikeLike

“[presumptively non-baryonic]”: In the smallest things, I think some DM is guilty (usual suspects…) but I am convinced that the non-baryonic matter is almost totally innocent (it is ridiculously small). But those who ACCUSE non-baryonic matter do not even bother to distinguish. So it’s really confusing to understand a little bit in this whole (hi)story.

Compare

and

https://youtu.be/bAs9wYHmf7g?t=196 !!

LikeLike

(I mean: at 29′, Peebles says that

Title: Masses and mass-to-light ratios of galaxies

Authors: Faber, S. M. & Gallagher, J. S. (1979)

http://adsbit.harvard.edu//full/1979ARA%26A..17..135F/0000183.000.html

“was largely responsible for persuading the astronomy community that dark matter…”)

LikeLike

Yes and yes… Faber & Gallagher was a pivotal paper in the development of the paradigm. But we as a community have often been sloppy about what we mean by “dark matter.”

Every five years for the past thirty years, I’ve heard a talk from some Impressive Person who asserted with great confidence that in “five years we’ll know what the dark matter is.” Not only has this not come true, but in retrospect, it is clear that the speaker already Knew what the answer was: WIMPs composed of the lightest supersymmetric particles. That’s always what was meant even though it was usually left unspecified. No need – It is Known, Khaleesi.

LikeLike

Zwicky, it seems, had the key to the missing mass question in his 1937 paper. On the Masses of Nebulae and of Clusters of Nebulae. The role of internal (gravitational) viscosity in the behavior of galaxies appears to be not properly accounted for in modern models of galactic dynamics.

Click to access nph-iarticle_query

LikeLike

Can you please check the link?

“Requested scanned pages are not available” – this is what I got.

Thank you.

LikeLike

The problem only comes from the series of points, so you need to erase and rewrite them:

ApJ….86..217

LikeLike