The distance scale is fundamental to cosmology. How big is the universe? is pretty much the first question we ask when we look at the Big Picture.

The primary yardstick we use to describe the scale of the universe is Hubble’s constant: the H0 in

v = H0 D

that relates the recession velocity (redshift) of a galaxy to its distance. More generally, this is the current expansion rate of the universe. Pick up any book on cosmology and you will find a lengthy disquisition on the importance of this fundamental parameter that encapsulates the size, age, critical density, and potential fate of the cosmos. It is the first of the Big Two numbers in cosmology that expresses the still-amazing fact that the entire universe is expanding.

Quantifying the distance scale is hard. Throughout my career, I have avoided working on it. There are quite enough, er, personalities on the case already.

No need for me to add to the madness.

Not that I couldn’t. The Tully-Fisher relation has long been used as a distance indicator. It played an important role in breaking the stranglehold that H0 = 50 km/s/Mpc had on the minds of cosmologists, including myself. Tully & Fisher (1977) found that it was approximately 80 km/s/Mpc. Their method continues to provide strong constraints to this day: Kourkchi et al. find H0 = 76.0 ± 1.1(stat) ± 2.3(sys) km s-1 Mpc-1. So I’ve been happy to stay out of it.

Until now.

I am motivated in part by the calibration opportunity provided by gas rich galaxies, in part by the fact that tension in independent approaches to constrain the Hubble constant only seems to be getting worse, and in part by a recent conference experience. (Remember when we traveled?) Less than a year ago, I was at a cosmology conference in which I heard an all-too-typical talk that asserted that the Planck H0 = 67.4 ± 0.5 km/s/Mpc had to be correct and everybody who got something different was a stupid-head. I’ve seen this movie before. It is the same community (often the very same people) who once insisted that H0 had to be 50, dammit. They’re every bit as overconfident as before, suffering just as much from confirmation bias (LCDM! LCDM! LCDM!), and seem every bit as likely to be correct this time around.

So, is it true? We have the data, we’ve just refrained from using it in this particular way because other people were on the case. Let’s check.

The big hassle here is not measuring H0 so much as quantifying the uncertainties. That’s the part that’s really hard. So all credit goes to Jim Schombert, who rolled up his proverbial sleeves and did all the hard work. Federico Lelli and I mostly just played the mother-of-all-jerks referees (I’ve had plenty of role models) by asking about every annoying detail. To make a very long story short, none of the items under our control matter at a level we care about, each making < 1 km/s/Mpc difference to the final answer.

In principle, the Baryonic Tully-Fisher relation (BTFR) helps over the usual luminosity-based version by including the gas, which extends application of the relation to lower mass galaxies that can be quite gas rich. Ignoring this component results in a mess that can only be avoided by restricting attention to bright galaxies. But including it introduces an extra parameter. One has to adopt a stellar mass-to-light ratio to put the stars and the gas on the same footing. I always figured that would make things worse – and for a long time, it did. That is no longer the case. So long as we treat the calibration sample that defines the BTFR and the sample used to measure the Hubble constant self-consistently, plausible choices for the mass-to-light ratio return the same answer for H0. It’s all relative – the calibration changes with different choices, but the application to more distant galaxies changes in the same way. Same for the treatment of molecular gas and metallicity. It all comes out in the wash. Our relative distance scale is very precise. Putting an absolute number on it simply requires a lot of calibrating galaxies with accurate, independently measured distances.

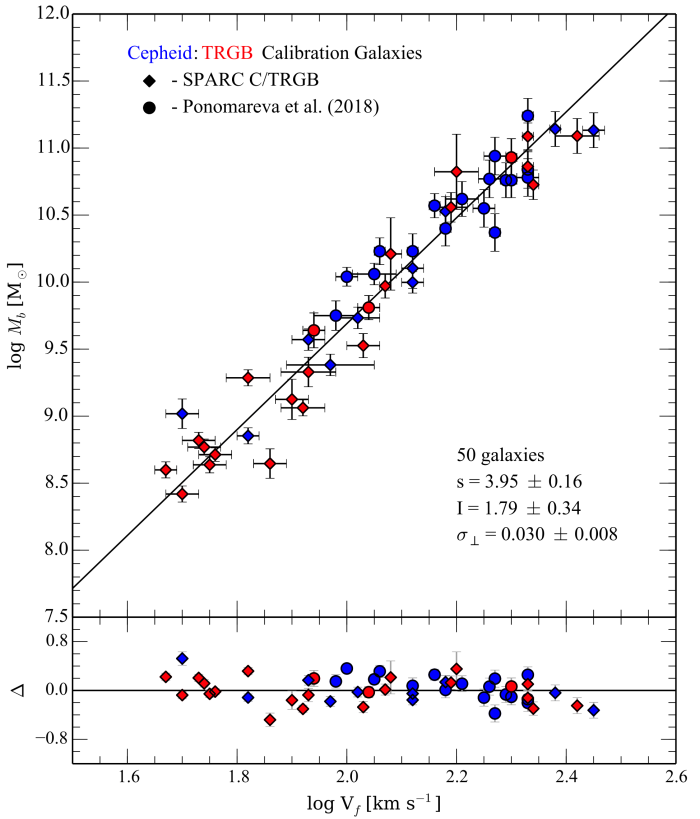

Here is the absolute calibration of the BTFR that we obtain:

In constructing this calibrated BTFR, we have relied on distance measurements made or compiled by the Extragalactic Distance Database, which represents the cumulative efforts of Tully and many others to map out the local universe in great detail. We have also benefited from the work of Ponomareva et al, which provides new calibrator galaxies not already in our SPARC sample. Critically, they also measure the flat velocity from rotation curves, which is a huge improvement in accuracy over the more readily available linewidths commonly employed in Tully-Fisher work, but is expensive to obtain so remains the primary observational limitation on this procedure.

Still, we’re in pretty good shape. We now have 50 galaxies with well measured distances as well as the necessary ingredients to construct the BTFR: extended, resolved rotation curves, HI fluxes to measure the gas mass, and Spitzer near-IR data to estimate the stellar mass. This is a huge sample for which to have all of these data simultaneously. Measuring distances to individual galaxies remains challenging and time-consuming hard work that has been done by others. We are not about to second-guess their results, but we can note that they are sensible and remarkably consistent.

There are two primary methods by which the distances we use have been measured. One is Cepheids – the same type of variable stars that Hubble used to measure the distance to spiral nebulae to demonstrate their extragalactic nature. The other is the tip of the red giant branch (TRGB) method, which takes advantage of the brightest red giants having nearly the same luminosity. The sample is split nearly 50/50: there are 27 galaxies with a Cepheid distance measurement, and 23 with the TRGB. The two methods (different colored points in the figure) give the same calibration, within the errors, as do the two samples (circles vs. diamonds). There have been plenty of mistakes in the distance scale historically, so this consistency is important. There are many places where things could go wrong: differences between ourselves and Ponomareva, differences between Cepheids and the TRGB as distance indicators, mistakes in the application of either method to individual galaxies… so many opportunities to go wrong, and yet everything is consistent.

Having followed the distance scale problem my entire career, I cannot express how deeply impressive it is that all these different measurements paint a consistent picture. This is a credit to a large community of astronomers who have worked diligently on this problem for what seems like aeons. There is a temptation to dismiss distance scale work as having been wrong in the past, so it can be again. Of course that is true, but it is also true that matters have improved considerably. Forty years ago, it was not surprising when a distance indicator turned out to be wrong, and distances changed by a factor of two. That stopped twenty years ago, thanks in large part to the Hubble Space Telescope, a key goal of which had been to nail down the distance scale. That mission seems largely to have been accomplished, with small differences persisting only at the level that one expects from experimental error. One cannot, for example, make a change to the Cepheid calibration without creating a tension with the TRGB data, or vice-versa: both have to change in concert by the same amount in the same direction. That is unlikely to the point of wishful thinking.

Having nailed down the absolute calibration of the BTFR for galaxies with well-measured distances, we can apply it to other galaxies for which we know the redshift but not the distance. There are nearly 100 suitable galaxies available in the SPARC database. Consistency between them and the calibrator galaxies requires

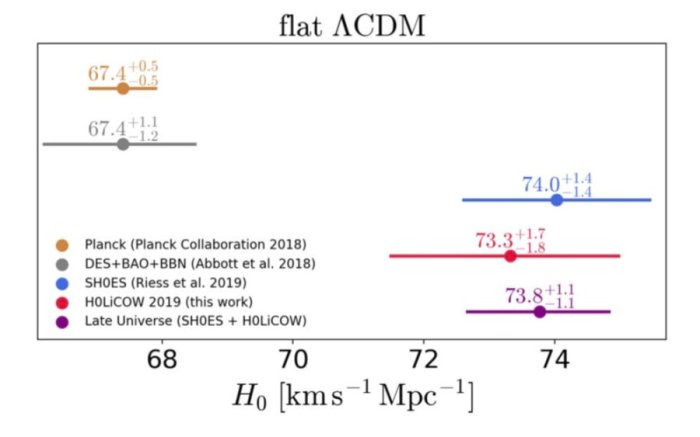

H0 = 75.1 +/- 2.3 (stat) +/- 1.5 (sys) km/s/Mpc.

This is consistent with the result for the standard luminosity-linewidth version of the Tully-Fisher relation reported by Kourkchi et al. Note also that our statistical (random/experimental) error is larger, but our systematic error is smaller. That’s because we have a much smaller number of galaxies. The method is, in principle, more precise (mostly because rotation curves are more accurate than linewidhts), so there is still a lot to be gained by collecting more data.

Our measurement is also consistent with many other “local” measurements of the distance scale,

but not with “global” measurements. See the nice discussion by Telescoper and the paper from which it comes. A Hubble constant in the 70s is the answer that we’ve consistently gotten for the past 20 years by a wide variety of distinct methods, including direct measurements that are not dependent on lower rungs of the distance ladder, like gravitational lensing and megamasers. These are repeatable experiments. In contrast, as I’ve pointed out before, it is the “global” CMB-fitted value of the Hubble parameter that has steadily diverged from the concordance region that originally established LCDM.

but not with “global” measurements. See the nice discussion by Telescoper and the paper from which it comes. A Hubble constant in the 70s is the answer that we’ve consistently gotten for the past 20 years by a wide variety of distinct methods, including direct measurements that are not dependent on lower rungs of the distance ladder, like gravitational lensing and megamasers. These are repeatable experiments. In contrast, as I’ve pointed out before, it is the “global” CMB-fitted value of the Hubble parameter that has steadily diverged from the concordance region that originally established LCDM.

So, where does this leave us? In the past, it was easy to dismiss a tension of this sort as due to some systematic error, because that happened all the time – in the 20th century. That’s not so true anymore. It looks to me like the tension is real.

The true value of Hubble’s Constant derived from geometry (‘The Principle of Astrogeometry’ on Kindle) is 70.98047 PRECISELY. It’s interesting this calculated value falls right between the latest measured findings. This tells you there is no Hubble tension, and the differences are simply due to the measuring methods, a bit like measuring the width of a room, first by a tape measure, and then by acoustic reflection. The two readings will differ by a small amount. This case is closed, and the implications of Hubble’s Constant being fixed changes the basic concepts of theoretical cosmology. Maxwell’s and Einstein’s equations are Aether based, and so C is really the speed of light in the Aether, not a vacuum. Einstein stated that in one of his autobiographies. Hunting dark matter and dark energy is in reality a study of the Aether, David Hine

LikeLike

Just an editorial question – what program do you use to generate the plots?

LikeLike

Most astronomers use matplotlib in python these days.

LikeLike

Thank you.

Should I understand that also the signal / image processing is done in python?

(I use Matlab, or the open source alternative Octave, for intensive data processing tasks – but I don’t like Matlab’s default plots)

LikeLike

Python is used for a lot of things these days, but not everything. Much of the work in this paper was doine with python, but the foundational work of measuring rotation curves and processing the Spitzer images was done with more specialized software. There is a whole “astropy” software development effort that is moving many of these things to python, but they’re not all there yet. But when it comes to making beautiful plots, the python.matlab defaults are pretty lousy. One can improve it with effort, but many of these packages seem to give little attention to the final product.

LikeLike

Stacy, does this result depend on MOND? In other words, would your result change if MOND would be wrong? It seems from your text that it does not / would not, but I ask nevertheless.

LikeLike

No, this is empirical: it holds irrespective of what the underlying cause for the BTFR is.

This is our most accurate calibration of the BTFR yet, and it remains consistent with MOND. But for the purposes of measuring H0, it is just a correlation between two quantities that can be used in this way.

LikeLike

Stacy, one more question: It seems that a higher value of H_0 would require lower amounts of cosmological dark matter. Milgrom regularly writes that MOND can explain away galactic dark matter, but not cosmological dark matter. So a higher value of H_0 should be welcome to you?

LikeLike

I don’t have a preference; I would just like to know what is correct.

Two things to deconstruct: (1) H0 and the cosmic mass density co-vary in the way you describe, so yes, higher H0 means lower Omega_m. However, it is not a huge change: it is still LCDM, just not the exact flavor of LCDM that fits the Planck CMB data. (2) MOND is a theory of dynamics at low accelerations that is an extension of Newtonian mechanics but not General Relativity. So it doesn’t really make a prediction for cosmology. If MOND is what is going on and one tries to measure Omega in a conventional, dynamical way, you will overestimate it by approximately the amount observed. So in that regard, one might hope to make cosmic dark matter “go away.” However, we need it (or something much like it) to explain the higher order peaks of the CMB and other aspects of structure formation about which MOND is mute. So… big Don’t Know.

LikeLike

I wish scientists would forget their narratives and follow the scientific method they SAY they follow so assiduosly. If they did, they would drop the narrative that the universe is expanding equally in all directions and look for other alternatives. And there is an obvious one that is plain as day. Expansion is galaxy local. That’s right, galaxies expand in to each other. That means we have a steady state universe. How can this be? It’s simple. Replace your one time inflationary big bang with intermittent parallel inflationary mini-bangs from galaxy AGN SMBH. Then everything will make total sense. You can still keep all of your measurements and 90+% of your theories, but you will need to do some reframing. So you say, how is this possible? Natarajan stated on Strogatz’s podcast than she and Rees shoe-horned SMBH into LCDM. That was a big mistake. Why not actually study SMBH and realize that those polar jets can be driven by breaches from the core? And what is the core? Probably a lattice of immutable charged Planck spheres. Wouldn’t that make total sense? So nature is based on a 3D Euclidean space permeated by immutable, equal and opposite, energy carrying, charged Planck spheres. Everything we see is emergent from this 10^-35 scale, including the spacetime which is a particulate construct. Take advantage of the lockdown and think about this idea – take a look at my blog (jmarkmorris.com) – and really THINK. Everything falls out. Everything becomes obvious. The solutions to the large problems become child’s play. And the people that read this blog could take if far farther and faster than I have.

LikeLike

Stacy,

Is it correct to say that the Plank gives a mean expansion velocity from a few hundred thousand years after the big bang to some representative later “snapshot time” as can be best inferred by observing large scale structure as it appears to us today with light arriving from different epochs, depending on how far the various elements of this structure were from us when the light was emitted; and astronomers measure the Hubble constant from the the present epoch backwards in time to nowhere near the time the cosmic microwave background radiation (CMBR) is dated to, and without thus having to infer an awkward “snapshot time” over sizeable portions of today’s observable universe.

Assuming the above it is not clear to me how far the time periods over which these two estimates of the Hubble constant are made even overlap if indeed they do to any significant degree at all; and if there has been a positive acceleration in the expansion of the universe in any significant periods of non-overlapping time, then there is at least some grounds to expect that the two inferred measurements should not be equal.

Following on from this it is also not clear to me whether or not the acceleration in the expansion of the universe over large time periods interferes with the process of creating a “snapshot time” over sizeable portions of today’s observable universe to compare with the observed fluctuations in the CMBR.

LikeLike

Very sorry for the unfortunate typo in my previous comment. Please read “the Planck Team” in replacement for “the Plank”. My mistake made writing this comment last night, no disrespect was intended.

LikeLike

I’m sure they’ve heard worse 😉

What you say is a reasonable way to look at it. The CMB data provide a very precise measurement of the angular diameter distance to the surface of last scattering at z = 1090. That’s just a few hundred thousand years after the Big Bang; 13+ billion years ago. So very much a global measurement.

We measure something much more local, at redshifts z << 1. We are doing something akin to what Hubble did: measuring distances to nearby galaxies and their correlation with redshift. While I don't think most people would call hundreds of millions of light-years "local", it is to cosmologists.

This is where it gets challenging. Direct measurements like ours are straightforward – what is the slope of the line of velocity vs. distance? but global measurements like that of Planck by construction average over the entire universe. That's better, in principle, but you also have to get everything else right – the expansion history and the variation of deceleration/acceleration that you mention. Planck doesn't measure H0 so much as it provides a simultaneous constraint on H0, Omega_m, Omega_b, Omega_Lambda, n, sigma_8, and miscellaneous other parameters. These co-vary; the result for one depends on all the others. So it is not a straight-up comparison in many respects.

The fit that Planck provides is only as good as the underlying assumptions: GR+cold dark matter+Lambda. Since we know GR doesn't play nice with quantum mechanics, and we don't know what the dark matter is (or if it really exists), and we had to re-invoke Lambda as an extra tooth fairy to keep it all patched up, I worry that these assumptions are merely the series of band-aids that get us as close as possible to approximating some underlying reality that we don't yet grasp. Tensions like that in H0 (also present in Omega_b, sigma_8, etc.) may be a hint that we've finally reached the level of precision where we can't explain away the anomalies.

LikeLike

“Can’t see the wood for the trees” applies here. The Hubble sky ‘stretching’ is described by 2 X a megaparsec X C, divided by Pi to the power of 21 = 70.98047 K / S / Mpc. For this equation (taken from Astrogeometry), a parsec is the 3.26 light years standard, and C is the speed of light in the Aether (Maxwell’s electric Aether equations). Therefore, the reciprocal of 70.98047 is ‘fixed’ at 13.778 billion light years, which is the Hubble horizon distance, and NOT the age of the universe, which must increase with time, David Hine

LikeLike

Did you forget to check that you already posted here in your watch-list?

It’s your second comment to this post with the same nonsense.

I assume you must have a watch-list because I saw you posting repeatedly the same nonsense on several other blogs.

LikeLike

Apass. So if this equation is nonsense, can you state exactly why, and then back your statement up in a proper scientific way? Please stick to the space units of that equation, and do not attempt to confuse this by introducing non applicable units and scales, and sidetracking by deviating to other things. Just because you don’t like something you are not familiar with is no proof it’s not the truth. There is the grave danger mindsets, and not true science now completely rules the Hubble Constant study. David Hine.

LikeLike

There is a grave danger of mindsets. There is also a grave danger of social media spats. Please, no spats here.

LikeLike

Tritonstation, With due respect to you, all I’m asking for is a proper reason why this Astrogeometry Hubble equation is wrong? Science is to question, and then to disprove or accept a new thing put forward. If something cannot be disproven scientifically, it’s going to be a truth. So far no one has disproven this Hubble equation, although 1000’s have tried, including several Professors. To simply ban this, or ignore it, cannot lead to any scientific progress. Disproving it with maths may even lead to a new unthought of approach regarding the Hubble Constant issue. Regards, David Hine.

LikeLike

I haven’t banned it. As I’ve noted elsewhere, I pay for this microphone. It isn’t a poster board for any and every idea anyone might have. If I wanted to delete your comments, I could do so. I have not – so far. But while I’m happy to let this stand for others to read, I do not owe you – or anyone else – a response to every comment. Life is too short.

LikeLike

Please delete this Hine garbage Tritonstation. This man is spamming these nonsens even in Dutch blogs, just delete it. See http://www.astroblogs.nl/2020/06/23/boeiend-de-eso-h0-2020-e-conferentie-deze-week/comment-page-1/#comment-138265

LikeLike

Why waste Stacy’s time when your question has already been answered:

https://telescoper.wordpress.com/2019/07/04/wolfram-alpha-and-the-principle-of-astrogeometry/

I could go on; there were 109 hits using Google. Spamming other people’s blogs with the same comment every time is guaranteed to make you unpopular.

LikeLike

At this point, I’m tempted to come up with some rude assertion about David Hine, an assertion that cannot be scientifically disproved. Therefore it must be true in all it’s rudeness!

But it doesn’t work that way. Assertions must be proved, and if they cannot be proved even enough to make them interesting, they might never be fully examined and yet remain false.

I understand Mr. Hines’s desire to be taken seriously, but he appears unwilling or unable to earn that treatment.

Ball is in his court.

sean s.

LikeLike

FYI, I’ve blogged this paper at https://dispatchesfromturtleisland.blogspot.com/2020/06/a-new-approach-to-measuring-hubbles.html

LikeLike

Tritonstation. That’s very fair, and of a very polite, and a highly professional approach. This is more than from many other scientists in the cosmology area. I can explain more of the background in private emails if you are interested, as I do not want to name scientific individuals, (who have been very abusive), openly in a public forum. However, if anyone here can sensibly challenge the Hubble equation put before you here, that will be really interesting, and could settle the Hubble issue in a neat professional way. Regards to all here, David Hine.

LikeLike

Tritonstation, I see there are the bad elements now creeping in here to attack again, so I will not respond to them here. So, I will leave it as it is, but I will be very happy to correspond with you privately, if you wish. This is my last post here, but I would love to hear from you by email to discuss the science of Hubble and the cosmos. Regards, David Hine

LikeLike

Now it’s firmly established Hubble’s Constant is ‘fixed’ by maths at precisely 70.98047 K / S / Mpc, along with its ‘fixed’ reciprocal of 13.778 billion light years, let’s now discuss what this REALLY implies about the Universe. What does it say about the Big Bang, and what is the real true age of the Universe?? This is new science about the Cosmos, and takes us back to ‘square 1’, and Maxwell’s Aether equations, David Hine

LikeLike

‘The Principle of Astrogeometry’ first appeared in a short printed booklet form, and released in 2008. More recently, in 2017, it was placed on Amazon Kindle. It was created before there was this thing called Hubble Tension. This appears to be of little relevance, and is the result of differing ways of measuring Hubble’s Constant. Measuring Hubble’s Constant supports and confirms the Astrogeometry Hubble equation is correct. This now needs to be placed on the Wikipedia Hubble chart as the Hubble Constant reference value that the measured Hubble values surround on either side. This will nicely complete the Wikipedia Hubble chart, and support the measured Hubble values, David Hine

LikeLike

I wish to thank Tritonstation for allowing the Astrogeometry Hubble Constant ‘fixing’ equation to remain on this blog. It’s openly up for sensible challenge. If it survives all oncoming challengers, it’s going to be a fundamental Universe truth. A ‘fixed’ in value Hubble Constant totally changes the basic theories about the whole Cosmos. It condemns the Big Bang concept instantly, and changes, but does not rule out the concepts of dark matter / dark energy. It also means a return to the Aether concept, on which Maxwell and Einstein used as the foundation for their beautiful equations. It’s interesting there has be no fundamental progress in theoretical Cosmology since Einstein’s Aether based Relativity equations!! There is much more in the area as to the motivation and design of these Cosmos equations. In the meantime, the Astrogeometry Hubble equation is up for open challenge, and I really appreciate and thank Tritonstation for giving it an open platform for genuine scientific scrutiny, David Hine

LikeLike

Tritonstation, I must sincerely apologise to you for the bad comments from ‘Telescoper and Co.’ that have crept into this blog. These people cannot bear anything that is a Universe truth, and prefer the dark. I have great difficulty in breaking down the agenda driven mindsets of these people, and by placing the Astrogeometry equation in many places is the only voice I have. It’s not me they despise, but what that Hubble equation implies. They cannot disprove it, and it gives the right answer, and so settles Hubble’s Constant value, and its reason for being that value. They don’t want that for several ‘dark’ reasons, but I only believe in honest open science, not hidden agendas, obtaining grants based on false theories, or elite snobbish closed science societies. I am happy if any one of these characters can legitimately disprove it. It appears they cannot, so they then resort to very unpleasant low life methods and insults, both direct and sneaky behind the back, but that will eventually discredit science institutions in the public’s eyes. I really despise these people, not for their disagreeing, for that is the essence of science, but their nasty sly tactics when they cannot disprove Astrogeometry. They want you to ban me, so the problem of Astrogeometry will not rock their boat, and they will not have to face this deep truth about the Universe. I know the Creator is watching every move, and that’s the thing that scares them, and me the most. The worst news for them is the Universe is a created entity, with a designed math framework (Maxwell). Anyway, if you ban me, I will accept that, as I am merely an uninvited guest here. I am sorry this is a too long long post, but I hope my side of it makes some sense to you. Regards, David Hine.

LikeLike

Everyone please stop.

LikeLike

This is a really a philosophical tribute to Stacy’s Blog, and indeed his whole approach to scientific enquiry.

A few years ago I attended a multi-day conference on the history and philosophy of science held at Trinity College, Cambridge (England). On one of the days there was a trip organised to Woolsthorpe Manor, the birth place of Isaac Newton, with a talk given on Newton by someone who’s name I forget. The summary of what was said is this; In the end what was really important about Newton was that he created a complete and consistent deductive system of thought, the first real one, he was what we would call a systems thinker or a systems integrator today.

The trouble with existing systems of thought, the one created by Newton especially, and the ones created by others since, is that they are designed intentionally as complete systems, and that being true, it is often really hard to make changes to them, perhaps to fit new observation evidence, without breaking them completely.

This difficulty in the face of new awkward scientific observational and experimental evidence has to be handled in one of three main ways, in order to be taken seriously by the scientific establishment I think: first choice is ignore the new evidence (often the most popular approach); or secondly, if you really can’t ignore the new data, then try to add new theoretical deductions that are nominally consistent with the existing system, postulating new unobservable entities as necessary, as you go along; or finally turn up with a completely new deductive system of thought based on a new way of thinking about the world around us, largely fully formed to explaining and describing more than before (which is almost impossible of course).

Everything else is treated as noise: all criticism, all ad hoc and singular hypotheses and theories which are not set in an existing or newly demonstrated wider system of thought really don’t cut the mustard anymore – and that is ultimately because of Newton. People have thought of endless singular ways to improve on Newton’s system, modifying the inverse square law very slightly in numerous ways for example . However nothing ever really worked until some of his major underlying assumptions and axioms were challenged in new ways that allowed new systems of deductive thought to finally emerge.

Here is the real deal: to make theoretical progress in science other than by tweaking and improving on existing systems of thought, you have to be able and willing to think not only about the physical assumptions, both tacit and explicit, but also the axioms currently underlying deductive mathematical knowledge as well. There is no school or university set up to teach the the full array of skills needed; for example the art of self-criticism, the ability to not take your own or existing currently accepted theories too seriously, the ability to avoid closing your mind to early in your life to other ideas, other possibilities, other productive ways to think that you may from time to time encounter.

In the tension between the two main methods of measuring the Hubble constant there exists either something really significant that calls for a new system of thought, or something that hasn’t been found yet will turn up, which can be moulded readily, in line with existing thinking . In this and other situations like it, no one knows in advance which way the dice will fall. The odds are normally heavily on the latter. There is I think nothing wrong with making a big intellectual bet one way or the other; however the real the trick is not to go all in, either way, always hold some chips in reserve.

LikeLike

I liked the way that Richard Feynman described doing science: The first principle is that you must not fool yourself — and you are the easiest person to fool.

We can suggest a number of possibilities for the tension in H0, including:

1) The models predicting the measured temperature fluctuations of the CMB are wrong, giving a wrong value of H0;

2) The models are correct but something happened between about z~1000 and z~10, to give two different H0 values [1];

3) The universe is inhomogenous on scales much larger than usually assumed.

The clue that there might be something wrong with the models is that they overpredict the power in the temperature fluctuations at low angular frequencies (corresponding to angles on the sky between 6 and 90 degrees); (2) is just a recogniion that we are caclulating H0 at very different times in the universe’s history; (3) the cosmological principle is an assumption and we need to ask what deviation from that assumption would be sufficient to account for the difference.

Here we need theory to drive observational tests, but as James rightly points out it is difficult to find people with the breadth of knowledge to create any theory that is significantly different from what we have already [2]

[1] Anything that happened after the end of BBN could also affect H0, because we make the assumption that the physics of BBN was the same as today’s physics (so really possibility (1) here).

[2] We know that the Standard Model doesn’t deal with non-zero mass neutrinos, but hopefully this is a tweak rather than anything more.

LikeLike

Interesting comment. I would personally punt for a mixture of (1) and (2) in your list.

I see from your blog your attending Kings College. I few years back I attended a Short course on ‘standard model’ cosmology which I enjoyed, given at the Royal Institution by Dr Malcolm Fairbairn of Kings.

I remember asking him whether there was some continuous function of acceleration that both be fitted to both the early accelerated expansion of the universe termed “inflation” and more recent accelerated expansion we observe today. I think the conclusion of the short conversation was that we did not have enough constraints available (between then and now) to define such candidate functions with any certainty.

This a shame.

Naively I don’t see why we can’t develop a range of simple computer models to test a range of hypothetical continuous functions for plausibility, as a talking point as much as anything. A simple function would be 1/r. The acceleration in expansion of the universe is today approximately c^2/R (perhaps just by coincidence), with c being the speed of light, and R being the radius of the observable interface. This immediately suggests a 1/r function if c remains constant.

The simplest model for varying speed of light models is to postulate that the observed rate of the accelerated expansion does not change with time, with c would then be proportional to the square root of r.

Maybe I will think further on this when I find time.

LikeLike

Interesting. C has to be constant in speed in relation to the Aether, but the Aether itself that carries the light is Relativistic (Einstein / Maxwell). This is catered for in the Pi divided by 21 part of the Astrogeometry Hubble equation, David Hine.

LikeLike

Sorry, In my haste, I made typo. I should have said “This is catered for in the Pi to the power of 21 part of the astrogeometry equation” Apologies, David Hine

LikeLike

For some reason I don’t understand I ended up with the word “interface” instead of “universe” in above comment.

LikeLike

James, it’s purely a typo. We all make them when we blog in haste. When reading the post, after posting (of couse!!), the typo stares you in the face!! All part of the fun! Regards, David Hine

LikeLike

I’ve just typo’ed again with the word couse, which was supposed to be ‘course’, of course!! Should go to ‘Spec Savers’!! David Hine

LikeLike

What I said above maybe nonsense. There may be exist no plausible continuous function of positive acceleration that links the accelerated expansion in era of inflation with the accelerated expansion the universe appears to be undergoing very much nearer to the present day.

I certainly made the unfortunate mistake of mixing up the special acceleration at which MOND appears, approximately c^2/R approximately 1.2 E-8 cm per second squared (perhaps by coincidence), with the accelerated expansion of the universe related to the cosmological constant.

The point I really wanted to make I think is: if the MOND acceleration used to describe the observed dynamics of galaxies is related somehow to the size of the observable universe, and the accelerated expansion of universe has definitely changed over time with the size of the universe, then the question is does there exist a relationship somehow between the two accelerations which can be used to infer the maximum extent to which the accelerated expansion of the universe changes over time, at least back to the oldest MOND galaxies we can currently observe .

It a long time since I last got my head around this stuff, resulting in lots of mistakes, sorry. Cosmology is a difficult and mind blowing subject at least for me.

LikeLike

Yes, it is hard to wrap one’s head around all this.

The MOND acceleration scale is of the same order, numerically, as the cosmic acceleration, so perhaps there is a connection. The simplest thing one can do is scale a0 with the product of the speed of light and the Hubble constant. That should vary with the Hubble parameter, but is not obviously observed. The Tully-Fisher relation does not appear to evolve out to a redshift of one, beyond what you expect for the evolution of stars, so that implies a0 is constant over that range.

Other things are possible. A more promising relation is with the cosmological constant, which is what is thought to drive the cosmic acceleration. But how that connection occurs, if there is one, is unclear.

LikeLiked by 1 person

James, an interesting post. The framework you are describing applies to development of previous ideas, or perfection of a factory process, such as making a better faster computer. However, in science, occasionally theories have to be abandoned simply because they become a blind alley. If no real progress is being made, this is telling you that track is a dead end. This now applies to Hubble Constant hunting, and it’s going nowhere now (stalemate). So being Jewish, we think that there comes a time when a totally different approach is required. That’s exactly what Einstein did with his very Jewish theories of Relativity. Fortunately for the very radical undisciplined Einstein, the faithful support of Eddington saved the day. In one of Einstein’s autobiographies, Einstein stated that Eddington said “I will handle those difficult stuffy professor types!”. Fortunately, Eddington grasped Einstein’s maths, and that was how Einstein combated the intense opposition to his new ‘undisciplined’ ways. Unfortunately, I don’t have an “Eddington” who is able to understand Aether based maths. This equation does the job, and tells us the exact ‘fixed’ value of Hubble’s Constant. As long as no one can disprove it scientifically, it stands, David Hine.

LikeLike

All good comments. Certainly Feynman’s point is important – a lot of people are fooling themselves.

LikeLike

Oh dear. Poor old Eric Laithwaite has vanished again. Gravitational levitation in overdrive?? David Hine

LikeLike

Enough, already.

LikeLike

Stacy, can I get your opinion on a theme repeated a few times here by David Hine? He’s written that “If something cannot be disproven scientifically, it’s going to be a truth.” Stacy, do you think that’s correct?

It seems wrong to me.

sean s.

LikeLike

I hate to enter this debate. The following things shouldn’t need to be said. The particular quote you refer to is an obvious falsehood. That the story related by the Star Wars films literally happened a long time ago in a galaxy far away cannot be disproved scientifically. That hardly guarantees that it happened. By the same token, his assertions about the precise value of the Hubble constant are complete gibberish. I let them stand as a test for rational thought: I’m not going to justify my assessment, leaving it as an exercise for the reader. It is not a challenging exercise.

LikeLiked by 2 people

Hi Tritonstation. I value your reply to Sean’s statement, and I appreciate very much you letting my posts remain here. As more measured Hubble results come in, a pattern is now forming. It looks like 71 is going to be right in the centre of things. It appears gibberish, because the Pi to the power of 21 part is an Aether concept derived from Maxwell. This is not well understood, and very difficult to explain to anyone who has been ‘educated’ in today’s colleges and schools. The simple picture is portrayed in Astrogeometry, but the reality is far FAR deeper. I do not want to draw Tritonstation into any dispute over this, as this could damage his reputation with his peers. So Sean, please do not try to stir it up for Triton by drawing him into an argument on this. I have done as Trion asked and kept my head down until your remarks designed to stir it up. Let’s just sit back, and see what future Hubble results come in. In a while, we will see the real pattern forming on which to then draw conclusions. That’s the way forward in this branch of Cosmology. Regards, and thanks, David Hine.

LikeLike

I am not and will not try to draw anyone into any argument. Tritonstation’s response confirms the view I’ve held since the first time I saw the comment in question. Being a mere layperson, I sought only information, and now that I have it, I shall resume my previous relative silence.

Carry on.

sean s.

LikeLike

Hi Sean, My apologies to you for assuming a stir up motive. I have encountered that so many times by those who cannot stand anything that does not fit with their mindsets. So please excuse me for being ‘suspicious’. Cosmology is a branch of physics that is full of aggressive condemnation, because the truths of the Universe are mostly hidden from us. Most of Cosmology is based on pure conjecture, and any theory can crash overnight as new ‘evidence’ comes in!!! Look how Newton was toppled by Einstein, using only Maxwell’s equations as his foundation. Regards, David Hiner

LikeLike

Not a problem, David. In the ordinary course of internet-dispute, your suspicions were reasonable and easily relieved. This ain’t my first rodeo; and clearly not yours either.

; )

sean s.

LikeLike

Thankyou Sean, I have had ‘Rodeos’ with many who are not remotely willing to take the long view, sit back, and wait for further evidence, before condemning something different. The worst ones are the stuffy scientific institutions who hate ANY ordinary public ‘outsider’ daring to challenge their ‘narrow closed shop veiwpoint’, as if it’s sacrosanct and not up for open debate!! Clearly, that’s not how science should be done. I wish again to thank you and Tritonstation for allowing this ‘sit back and wait’ period on this fascinating Hubble Constant issue. More measured results will always be coming in, and I regularly view the Wikipedia Hubble chart to see how the calculated geometry ’71’ value compares to the other measured values coming in. Especially with Hubble’s Constant, I feel that is the right scientific approach, and seeing what patterns emerge, before ruling out the geometry calculated approach. Regards, David Hine.

LikeLike

Can’t resist commenting about Jim Schombert conclusion that the universe is in fact a mere 12.6 billion years old. This makes me feel over a billion years younger. (If the quarks that compose me are a billion years younger than so am I).

🙂

LikeLike

As good as it is to feel a billion years younger, Jim did not say this. He made a new measurement of the Hubble constant. The age of the universe is the reciprocal of that in the special case of a coasting universe in which the expansion neither accelerates nor decelerates. Properly accounting for that only shaves a couple hundred million years off. So I’m not sure where the 12.6 number even comes from. It appears that someone in the UO press office thought that the expansion rate of the universe was too obscure to be interesting, so translated it into an age. They also didn’t think it was interesting enough for an actual press release; the web page they wrote was, as best we can tell, for internal university consumption. But the web is visible to all, so some news sites picked up on it anyway. This is the short version of things that can go wrong in communicating science to the public, as the press trumpets that we measure this particular age for the universe when in fact this quantity is nowhere mentioned in the paper.

LikeLike

Without wishing to cause a rodeo, using the calculated geometry method of determining Hubble’s Constant gives the fixed value of 70.98047. From this, its reciprocal is 13.778 billion light years, which is the Hubble Horizon distance ONLY. With this calculated method (Astrogeometry), derived from Maxwell’s Aether based equations, Hubble’s Constant is ‘fixed’, so this 13.778 number cannot increase with time, so is NOT the age of the universe. The age of the universe is something very VERY different, and outside the scope of this forum. To go into that here will get me banned, but I will discuss it willingly if permitted by Triton Station, David Hine.

LikeLike

It is striking that the conventionally inferred age for a universe that first decelerates then accelerates its expansion rate happens to come out so close to the result for a coasting universe, 1/H0. One might even consider that a coincidence problem of the same nature that launched the Inflation paradigm.

And yes, going on at greater length about this will get you banned.

LikeLike

Have we now entered Hubble stalemate? I put it to you to say where this is wrong:- 2 X a mega parsec X C, divided by Pi to the power of 21 = 70.98047 K / S / Mpc For this equation, a parsec is 3.26 light years. If you can’t sensibly disprove this equation, your maths ability may not be adequate to understand it. That’s nothing to be ashamed of, as I am a total duffer at digital circuit design. We have a good man ‘on board’, who is a wizard at digital. He designed DAB Radio for Norway and the UK.!!!!!!! I’m no lover of Wikipedia for their too secular bias, but please don’t knock their Hubble Charts, or their Hubble diagrams without proper reason. You are being a ‘science snob’, and trash anything that questions your gods at NASA (No Aliens Seen Anywhere). I regard being banned as a ‘win’. The Hubble Constant will test most of the current cosmology theories, and many of those are now looking decidedly false. A true scientist will always refer to Wikipedia, which is a valuable reference place. The beauty of Wikipedia is it tries to be as comprehensive as possible. They admitted they do not understand the Astrogeometry equation, so could not include ’71’ in their Hubble Chart. That was a fair response, but one or two there rattled their prams and spat out their dummies over that ‘elegant’ equation -joy!!!!. No one likes to be beaten. Science is about accepting something different to study. If you don’t do that, you are a ‘stalemated cowed scientist’. Do something brave and original, and if it upsets your paymasters, you know you have discovered a truth!!! Truth won’t get you a Nobel, because you have to grovel and tell big lies for that in the cosmology area. Regards David Hine.

LikeLike

Your equation is meaningless. There’s nothing special about a Megaparsec. You could just have easily inserted miles or kilometers or furlongs there. There is no reason to raise pi to the 21st power. If that didn’t work, you could have raised it to the 22nd. Or 20th, or whatever. This is pure numerology, devoid of meaning.

No good deed goes unpunished. I’ve let you say lots and I haven’t banned you – yet. I did discontinue comments on the latest post because too much is enough already. I’m not hosting an intergalactic kegger here.

LikeLike

I enjoy your blog. Personally I’d be happy to see you crack down on the intergalactic kegger a bit sooner. The ‘noise’ distracts from your signal. My worry is you’ll take the whole blog down in disgust.

LikeLiked by 1 person

This is a valid concern. Apparently I am obliged to learn things about controlling posting here that I had preferred to leave to the honor system.

LikeLike

Yes, sorry we can’t all be better. I’m copying the intro to the comment section from Peter Woit’s blog ‘Not even wrong’

quote:

Informed comments relevant to the posting are very welcome and strongly encouraged. Comments that just add noise and/or hostility are not. Off-topic comments better be interesting… In addition, remember that this is not a general physics discussion board, or a place for people to promote their favorite ideas about fundamental physics. Your email address will not be published. Required fields are marked.

end quote:

A very civil comment section. I think he reviews comments before posting.

LikeLiked by 2 people

Yes, Peter Woit’s rules are good ones to apply here. It’s almost as if he has had enough experience with the many social mishaps that happen on the internet to classify and describe each of them.

LikeLike

Sabine Hossenfelder has similar rules. But her comment section doesn’t accept anonymous (i.e. without a google account) comments. So although I find sometimes something that I would like to comment… I can’t.

I do hope that the solution you’ll find will not ban anonymous users.

Anyway – even if I won’t be able to comment, I’ll still read your posts (for which I’d like to thank you because they are really down to earth in a world full of noise!)

LikeLiked by 2 people

The nonlinear Hubble flow (vs constant like speed of light constant c as starting postulate of relativity) was resolved in mid 1990’s in Dr Suntola’s unified system concept of Dynamic Universe. It makes present c decelerating together with the expansion speed C4 of Riemann 4-radius R4 as nonlinear function of 1/sqrt(R4). The simple physical law of 0-energy balance provides the dynamic “Hubble flow” of dC4/C4=-1/2 dR4/R4 per TIME unit of Mpc=3.262 M ly as -35.5 km/s/Mpc=-1.15 10^-18/s. Or H0=71 km/s/Mpc=2.3 10^-18/s near z=0 of differential Mpc distance unit.

I have tabulated these values at various z distances such as z=3 where H0=(z+1)^1.5 or 8 times present rate 71 km/s/Mpc with c=C4=2 times present c or 600,000km/s and R4=3.45 Bly. The biggest DU impact on GR is time T4=9.2/8 = 1.15 B yrs or present age is 9.2 B yrs since the turning point T4=R4=0 when measured with today’s slowed ticking rate of atomic clock. See Suntola DU books or find my posts at various web sites commenting HOLiCOW et al recent test cases.

LikeLike

David Hine. The 0-energy balance starting point of Suntola DU goes even deeper than nonlinear Hubble flow as it removes the GR starting postulate of constant c by connecting it with the variable contraction/expansion speed C4 of METRIC 4th radius R4. Away goes need of Big Bang, Planck constant, Dark Energy/Matter, Equivalence Principle etc while agreeing with past Earth bound proofs of GR/QM.

Because of different scale factors of cosmic distances vs time (and its inverse or frequency) today’s H0=71 km/s/Mpc at z=0 of dR4 differential Mpc is slowing down. It has been much higher (vs lower values of LCDM closer to the EFFECTIVE H0_eff=35.5 km/s/Mpc at z=0) in past with large z values. It also causes rethinking of Gravitational Wave theories and related cosmic space mapping and surveying technologies such as deep space GPS and 4/5-D photogrammetry using accurate and automated multi-image stereo mensuration, see my 12/2013 jogs paper and its references.

Also read both DU books by Suntola from his science society sites. Of course, DU has proven wrong many recent Nobel committees, including the latest two days ago. Suntola’s derivation of BH critical radius, minimum orbital period time and smooth capture of surrounding material near Sag A* was confirmed by observations years ago and repeatedly published in books, papers and annual DU seminars.

LikeLike

This is not an 8chan chat board. Many comments by David Hine have been removed, so some of the above responses may now appear without context.

LikeLiked by 2 people

Derivation of Hubble’s Law and the End of the Darks Elements

https://www.scirp.org/html/91689_91689.htm

LikeLike

One month to get an answer (most likely of acceptance) from a journal, a paper with 9 references in total of which 6 to wikipedia and one for an online equation solver… I think these say a lot about the quality of the paper.

I understand it is an open access journal, but based on what I can see, it is a predatory journal…

LikeLiked by 1 person

I think there should also have been a credit too for Google Translate for translating the paper from Portugese into English. 😦

Also (self-)plagiarism; here is the same article in another journal: https://www.medcrave.org/index.php/OAJMTP/article/view/18/html

LikeLike