A unique prediction of MOND

One curious aspect of MOND as a theory is the External Field Effect (EFE). The modified force law depends on an absolute acceleration scale, with motion being amplified over the Newtonian expectation when the force per unit mass falls below the critical acceleration scale a0 = 1.2 x 10-10 m/s/s. Usually we consider a galaxy to be an island universe: it is a system so isolated that we need consider only its own gravity. This is an excellent approximation in most circumstances, but in principle all sources of gravity from all over the universe matter.

The EFE in dwarf satellite galaxies

An example of the EFE is provided by dwarf satellite galaxies – small galaxies orbiting a larger host. It can happen that the stars in such a dwarf feel a stronger acceleration towards the host than to each other – the external field exceeds the internal self-gravity of the dwarf . In this limit, they’re more a collection of stars in a common orbit around the larger host than they are a self-gravitating island universe.

A weird consequence of the EFE in MOND is that a dwarf galaxy orbiting a large host will behave differently than it would if it were isolated in the depths of intergalactic space. MOND obeys the Weak Equivalence Principle but does not obey local position invariance. That means it violates the Strong Equivalence Principle while remaining consistent with the Einstein Equivalence Principle, a subtle but important distinction about how gravity self-gravitates.

Nothing like this happens conventionally, with or without dark matter. Gravity is local; it doesn’t matter what the rest of the universe is doing. Larger systems don’t impact smaller ones except in the extreme of tidal disruption, where the null geodesics diverge within the lesser object because it is no longer small compared to the gradient in the gravitational field. An amusing, if extreme, example is spaghettification. The EFE in MOND is a much subtler effect: when near a host, there is an extra source of acceleration, so a dwarf satellite is not as deep in the MOND regime as the equivalent isolated dwarf. Consequently, there is less of a boost from MOND: stars move a little slower, and conventionally one would infer a bit less dark matter.

The importance of the EFE in dwarf satellite galaxies is well documented. It was essential to the a priori prediction of the velocity dispersion in Crater 2 (where MOND correctly anticipated a velocity dispersion of just 2 km/s where the conventional expectation with dark matter was more like 17 km/s) and to the correct prediction of that for NGC 1052-DF2 (13 rather than 20 km/s). Indeed, one can see the difference between isolated and EFE cases in matched pairs of dwarfs satellites of Andromeda. Andromeda has enough satellites that one can pick out otherwise indistinguishable dwarfs where one happens to be subject to the EFE while its twin is practically isolated. The speeds of stars in the dwarfs affected by the EFE are consistently lower, as predicted. For example, the relatively isolated dwarf satellite of Andromeda known as And XXVIII has a velocity dispersion of 5 km/s, while its near twin And XVII (which has very nearly the same luminosity and size) is affected by the EFE and consequently has a velocity dispersion of only 3 km/s.

The case of dwarf satellites is the most obvious place where the EFE occurs. In principle, it applies everywhere all the time. It is most obvious in dwarf satellites because the external field can be comparable to or even greater than the internal field. In principle, the EFE also matters even when smaller than the internal field, albeit only a little bit: the extra acceleration causes an object to be not quite as deep in the MOND regime.

The EFE from large scale structure

Even in the depths of intergalactic space, there is some non-zero acceleration due to everything else in the universe. This is very reminiscent of Mach’s Principle, which Einstein reputedly struggled hard to incorporate into General Relativity. I’m not going to solve that in a blog post, but note that MOND is much more in the spirit of Mach and Lorenz and Einstein than its detractors generally seem to presume.

Here I describe the apparent detection of the subtle effect of a small but non-zero background acceleration field. This is very different from the case of dwarf satellites where the EFE can exceed the internal field. It is just a small tweak to the dominant internal fields of very nearly isolated island universes. It’s like the lapping of waves on their shores: hardly relevant to the existence of the island, but a pleasant feature as you walk along the beach.

The universe has structure; there are places with lots of galaxies (groups, clusters, walls, sheets) and other places with very few (voids). This large scale structure should impose a low-level but non-zero acceleration field that should vary in amplitude from place to place and affect all galaxies in their outskirts. For this reason, we do not expect rotation curves to remain flat forever; even in MOND, there comes an over-under point where the gravity of everything else takes over from any individual object. A test particle at the see-saw balance point between the Milky Way and Andromeda may not know which galaxy to call daddy, but it sure knows they’re both there. The background acceleration field matters to such diverse subjects as groups of galaxies and Lyman alpha absorbers at high redshift.

As an historical aside, Lyman alpha absorbers at high redshift were initially found to deviate from MOND by many orders of magnitude. That was withoug the EFE. With the EFE, the discrepancy is much smaller, but persists. The amplitude of the EFE at high redshift is very uncertain. I expect it is higher in MOND than estimated because structure forms fast in MOND; this might suffice to solve the problem. Whether or not this is the case, it makes a good example of how a simple calculation can make MOND seem way off when it isn’t. If I had a dollar for every time I’ve seen that happen, I could fly first class.

I made an early estimate of the average intergalactic acceleration field, finding the typical environmental acceleration eenv to be about 2% of a0 (eenv ~ 2.6 x 10-12 m/s/s, see just before eq. 31). This is highly uncertain and should be location dependent, differing a lot from voids to richer environments. It is hard to find systems that probe much below 10% of a0, and the effect it would cause on the average (non-satellite) galaxy is rather subtle, so I have mostly neglected this background acceleration as, well, pretty negligible.

This changed recently thanks to Kyu-Hyun Chae and Harry Desmond. We met at a conference in Bonn a year ago September. (Remember travel? I used to complain about how much travel work involved. Now I miss it – especially as experience demonstrates that some things really do require in-person interaction.) Kyu thought we should be able to tease out the EFE from SPARC data in a statistical way, and Harry offered to make a map of the environmental acceleration based on the locations of known galaxies. This is a distinct improvement over the crude average of my ancient first estimate as it specifies the EFE that ought to occur at the location of each individual galaxy. The results of this collaboration were recently published open-access in the Astrophysical Journal.

This did not come easily. I think I mentioned that the predicted effect is subtle. We’re no longer talking about the effect of a big host on a tiny dwarf up close to it. We’re talking about the background of everything on giant galaxies. Space is incomprehensibly vast, so every galaxy is far, far away, and the expected effect is small. So my first reaction was “Sure. Great idea. No way can we do this with current data.” I am please to report that I was wrong: with lots of hard work, perseverance, and the power of Bayesian statistics, we have obtained a positive detection of the EFE.

One reason for my initial skepticism was the importance of data quality. The rotation curves in SPARC are a heterogeneous lot, being the accumulated work of an entire community of radio astronomers over the course of several decades. Some galaxies are bright and their data stupendous, others… not so much. Having started myself working on low surface brightness galaxies – the least stupendous of them all – and having spent much of the past quarter century working long and hard to improve the data, I tend to be rather skeptical of what can be accomplished.

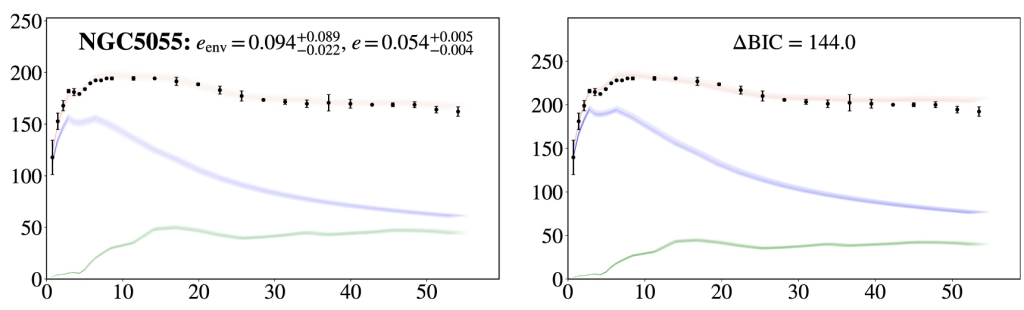

An example of a galaxy with good data is NGC 5055 (aka M63, aka the Sunflower galaxy, pictured atop as viewed by the Hubble Space Telescope). NGC 5055 happens to reside in a relatively high acceleration environment for a spiral, with eenv ~ 9% of a0. For comparison, the acceleration at the last measured point of its rotation curve is about 15% of a0. So they’re within a factor of two, which is pretty much the strongest effect in the whole sample. This additional bit of acceleration means NGC 5055 is not quite as deep in the MOND regime as it would be all by its lonesome, with the net effect that the rotation curve is predicted to decline a little bit faster than it would in the isolated case, as you can see in the figure below. See that? Or is it too subtle? I think I mentioned the effect was pretty subtle.

That this case works well is encouraging. I like to start with a good case: if you can’t see what you’re looking for in the best of the data, stop. But I still didn’t hold out much hope for the rest of the sample. Then Kyu showed that the most isolated galaxies – those subject to the lowest environmental accelerations – showed no effect. That sounds boring, but null results are important. It could happen that the environmental acceleration was a promiscuous free parameter that appears to improve a fit without really adding any value. That it declined to do that in cases where it shouldn’t was intriguing. The galaxies in the most extreme environments show an effect when they should, but don’t when they shouldn’t.

Statistical detection of the EFE

Statistics become useful for interpreting the entirety of the large sample of galaxies. Because of the variability in data quality, we knew some cases would go astray. But we only need to know if the fit for any galaxy is improved relative to the case where the EFE is neglected, so each case sets its own standard. This relative measure is more robust than analyses that require an assessment of the absolute fit quality. All we’re really asking the data is whether the presence of an EFE helps. To my initial and ongoing amazement, it does.

The figure above shows the amplitude of the EFE that best fits each rotation curve along the x-axis. The median is 5% of a0. This is non-zero at 4.7σ, and our detection of the EFE is comparable in quality to that of the Baryon Acoustic Oscillation or the accelerated expansion of the universe when these were first accepted. Of course, these were widely anticipated effects, while the EFE is expected only in MOND. Personally, I think it is a mistake to obsess over the number of σ, which is not as robust as people like to think. I am more impressed that the peak of the color map (the darkest color in the data density map above) is positive definite and clearly non-zero.

Taken together, the data prefer a small but clearly non-zero EFE. That’s a statistical statement for the whole sample. Of course, the amplitude (e) of the EFE inferred for individual galaxies is uncertain, and is occasionally negative. This is unphysical: it shouldn’t happen. Nevertheless, it is statistically expected given the amount of uncertainty in the data: for error bars this size, some of the data should spill over to e < 0.

I didn’t initially think we could detect the EFE in this way because I expected that the error bars would wash out the effect. That is, I expected the colored blob above would be smeared out enough that the peak would encompass zero. That’s not what happened, me of little faith. I am also encouraged that the distribution skews positive: the error bars scatter points in both direction, and wind up positive more often than negative. That’s an indication that they started from an underlying distribution centered on e > 0, not e = 0.

The y-axis in the figure above is the estimate of the environmental acceleration based on the 2M++ galaxy catalog. This is entirely independent of the best fit e from rotation curves. It is the expected EFE from the distribution of mass that we know about. The median environmental EFE found in this way is 3% of a0. This is pretty close to the 2% I estimated over 20 years ago. Given the uncertainties, it is quite compatible with the median of 5% found from the rotation curve fits.

In an ideal world where all quantities are perfectly known, there would be a correlation between the external field inferred from the best fit to the rotation curves and that of the environment predicted by large scale structure. We are nowhere near to that ideal. I can conceive of improving both measurements, but I find it hard to imagine getting to the point where we can see a correlation between e and eenv. The data quality required on both fronts would be stunning.

Then again, I never thought we could get this far, so I am game to give it a go.

Very Cool! One suggestion from a communication standpoint is that I initially missed that on figure 5, e-env vs e that the horizontal scale was 10X larger. Perhaps an additional chart, with the same scaling on both axis might emphasize the impressive match.

Question, what is the magnitude of the error bars on the NGC 5055 data points? 1 sigma?, I share your feeling that the sigma levels are often misleading (people assume everything is normally distributed, which is likely not the case here) but sigma level is inherently distribution agnostics so still useful.

Final question, Obviously your e-env estimates would be based on baryonic evidence, assuming DM did not exist. This suggests to me, given the giant DM halos that are usually assumed to exist that in the Lambda CDM models that the intergalactic e-env would be a lot higher. Is that correct?

LikeLike

Thanks!

How to best calculate the environmental acceleration is an important and still-open question. One has to have MOND or DM halos, and both give a boost over Newton with stars only. At a first cut, it is not obvious which is higher. My intuition says that MOND will have a smaller local effect (less mass) but also have a longer reach thanks to the 1/r dependence. Regardless, this is one place I think we need to improve, and should be able to make fairly quick progress.

For NGC 5055, the formal measurement & 1 sigma uncertainty is e = 0.054 ± 0.005 and e_env = 0.102 +0.086/-0.021. That’s 1.7 sigma different, but I really don’t trust the formal uncertainty in the environmental estimate for the reasons you raise.

LikeLike

Hi. Stripping away the interpretation somewhat, would it be fair to say that what you’ve shown is that rotation curves fall off a little more in the outermost parts than no-efe mond would predict?

LikeLike

No, I don’t think it would be fair to say that. I’m not usually a stickler for this sort of thing, but words matter, and the phrasing you use sets a false standard. There is no such thing as MOND without an EFE. Nor is it always that rotation curves fall off a little faster, since some of them are still rising at the last measured point, nor do all galaxies show the same effect – the rotation curves of isolated galaxies do not fall off faster, just those where there is enough of an EFE to detect.

I’m happy to separate what we know empirically from what is predicted theoretically. Hence empirical relations like the radial acceleration relation (RAR) and the baryonic Tully-Fisher relation (BTFR). It is a logical possibility that these relations might exist without necessarily being identical to what is predicted by MOND. So the first task is to establish what the empirical relations are; the second is to apply them as a test of theory. So far, they look exactly like what MOND predicts, so in practice it is challenging to “strip away” the obvious interpretation. For example, I bent over backwards to do this and be completely empirical in the 2016 RAR paper, but one person kept complaining that the result looked like MOND but MOND couldn’t possibly be right, so, by extension and logical fallacy, the data couldn’t possibly be right. He seemed incapable of separating theory from data. Using MOND as you do here aggravates that attitude.

So you might rephrase what you say to be that *some* rotation curves show a bit less of a mass discrepancy than the empirical RAR predicts. Even that is a bit fraught, as the low acceleration slope of the RAR could in principle be anything, and not the 1/2 of perfectly flat rotation curves. But yes, what we detect is a skew of some of the data to the low g_obs side of the RAR with slope fixed to 1/2.

LikeLike

Interesting work. Many people miss one of the main points of MOND, which is not that modified gravity is an alternative explanation to dark matter, or vice versa, but rather that there are things natural in MOND but very unnatural in dark matter. The interpretation of observations involving the EFE is one of them. Another is the ability to predict dynamics from visible mass, which is a much stronger statement than explaining a flat rotation curve.

Do you think that wide binaries in the outskirts of the Milky Way (where the EFE should be low enough that MOND effects are observable) will provide the definitive test for MOND?

LikeLike

One would hope. But there have already been so many definitive tests, that I am too jaded to believe there is any one that will earn recognition as such.

LikeLike

Assuming that one finds that wide binaries have non-Keplerian orbits, I would be surprised if anyone could come up with a non-MOND explanation which is even just a tiny bit credible.

How far off is this? Some claim to have seen evidence already, but more sceptical people (even within the MOND camp) think that it is still too early. Will Gaia be enough?

On the other hand, it should be possible to already identify systems for which one would expect MOND effects to be visible, and if none are observed, then I would be surprised if anyone could come up with a MOND explanation which is even just a tiny bit credible.

LikeLike

The solar system is around 1.8 a0, so one has to look pretty far out to be comfortably in the MOND regime. I don’t know if one will be able to do that with Gaia data or not. I suspect in principle yes, in practice, not clearly enough for a clean test.

The deeper issue is what we consider to be credible. There are some galaxy formation simulations that claim to explain the observed MOND phenomenology. I do not find these to be satisfactory. You will not be challenged to find people with lower standards who assert that these models work just fine. Some have gone as far as to call such behavior “natural.” That’s patently absurd: the very definition of incredible.

What I have seen, over and over again, is that any explanation that gives the “right” (comfortable) answer is accepted uncritically. I see no reason why it would be different for binaries. We’re already well past the regime of accepting incredible explanations and on into the regime of science as social construct.

LikeLike

> I would be surprised if anyone could come up with a non-MOND explanation which is even just a tiny bit credible.

To chime in on this bit. I’m afraid there already are non-MOND explanations for observations like this. I remember reading a response paper to Pittordis and Sutherland by Clarke called “The distribution of relative proper motions of widebinaries in GAIA DR2: MOND or multiplicity?”. Their argument is essentially that the projected on-sky velocity (which is what is almost always measured) can also be caused by unbound pairs or “hidden triples”.

The first is a form of denial and is (very) hard to disprove. It isn’t *really* a wide binary, it just looks that way because the two stars were born in the same region of space and therefore just happen to have similar on-sky velocities. Or they might be chance projections of nearby stars that just happen to be making a close pass by one another. The only way to eliminate this possibility would be to get the complete 3D velocity and full determination of the orbits. Given that the orbital periods involved in wide binaries could be on the order of tens of thousands of years this probably won’t be possible in practice.

The second argues that the binary system is actually a trinary system. One of the “stars” would really be an unresolved close binary being orbited by the other widely separated star. This is very similar to dark matter logic. This is also practically unfalsifiable because we can always specify that the supposed hidden companion is a star of the same type (which wouldn’t show up spectroscopically) on a ridiculously tight orbit. Or for that matter that it is a non-accreting black hole on a wide enough (but still tight) orbit that it couldn’t be detected using the radial velocity method.

Throw in some ridiculously high orbital eccentricities and matching even clear MOND signals in the outer galaxy becomes possible within Newtonian dynamics.

LikeLike

Excellent examples! Perhaps we should name those unseen components Vulcans.

LikeLike

Oh, the irony. That’s a really apt name!

LikeLike

For those not familiar with the history of astronomy, about 150 years ago, a hypothetical planet—dubbed Vulcan because of its proximity to the Sun—was postulated to orbit closer to the Sun than Mercury in order to explain an unexplained feature in Mercury’s orbit (precession of perihelion to an extent larger than could be understood by the effects of the other planets, possible oblateness of the Sun, and so on). The first success (a “postdiction”) of general relativity was explaining that effect. Thus, in this case, “modified gravity” won over “dark matter”. (In the case of Neptune, “dark matter” was the answer, though I don’t think that anyone had proposed a “modified gravity” explanation for the anomalous motion of Uranus.)

A while back, I wrote a review of an enjoyable account of the search for Vulcan.

LikeLiked by 1 person

I was pleased to see this in my news feed a couple of days after reading your blog. I had hoped that it would help me get a better understanding of EFE. Instead –

From sci-news.com

“I was skeptical by the results at first because the external field effect on rotation curves is expected to be very tiny,” Dr. McGaugh said.

“We spent months checking various systematics. In the end, it became clear we had a real, solid detection.”

“Skepticism is part of the scientific process and understands the reluctance of many scientists to consider MOND as a possibility,” she added.

I totally empathise ;(

LikeLike

Lindsay, Stacy, Tracy, Lynn, Shannon, Lee, Ashley, …

LikeLiked by 1 person

Apologies for leaving out Morgan, Kelly, and Reese.

LikeLike

Why are you posting (what seems like) a random list of first names?

LikeLiked by 1 person

The list of names in not really random. Phillip is making, let’s say, fun, of the sci-news site where they considered the name Stacy as a woman’s name. All the names that Phillip posted are man’s names.

LikeLike

All are commonly used for both men and women.

LikeLike

How could I have forgotten Leslie? I was reminded of the name because musician Leslie West just died.

Of course, if we add short forms like Chris, there are many more possibilities.

Note also that the German name Kirsten and the English name Shirley both used to be exclusively male names; now they are (almost) exclusively female.

And names like Madison were originally surnames (and even have “son” in them, though used for women). Then there are even more obvious surnames such as McKenzie used as given names.

LikeLike

Madison became a first name due to the movie Splash, in which Darryl Hannah takes the name “Madison” from Madison, Ave.

LikeLike

Hmm. Madison Bumgarner, Madison Cawthorn are both males from North Carolina, born post-1984, which suggests that either their parents named their boys after a fictional mermaid or there is another derivation (perhaps regional) for the usage. In fact, the male given-name pre-dates the movie. Splash! did apparently give rise to the wide spread female usage. See:

https://www.babynameshub.com/boy-names/madison.html

LikeLike

And as I can see, that article has, in the comments, exactly the attitude that I expected. Just to quote – “When TeVeS went, MOND went with it. So I’m not sure why you bring it up as if it would work – this was yesterday’s ideas and it didn’t work.”

LikeLiked by 1 person

To be fair, one can find practically anything in online comments. In any case, implying that ruling out TeVeS rules out MOND belies a huge ignorance.

LikeLiked by 1 person

Well yes, you can find anything in the online comments.

But I find it important also how vocal they are. Currently, the commenter that expressed that opinion is the most vocal on the page (the most comments, the longest comments) and judging by his tone, I’m not sure you can engage in a constructive discussion with him. He made his decision and he deliberately dismisses any new information. I mean, he doesn’t even acknowledge the results from the paper – he just dismisses them as nonsensical because MoND was ruled out years ago and because in “the old cottage industry of trying to predict spiral galaxy disk rotation […] LCDM does better than competitors since 2015”.

How can you approach a civil discussion with commenters like this and how can you assure that your points rest visible inside all the gish gallop?

LikeLike

I recognize the commentator from other blogs. 😦

I doubt that one can convince him, but one might convince some spectators that he doesn’t have all the answers.

LikeLike

This attitude is unfortunately common, and I’m getting impatient with it. I stipulated in https://arxiv.org/abs/1404.7525 the criteria by which I would change my mind, and challenged others to do the same. If this person cannot or will not elaborate criteria that would lead him to abandon dark matter – to decide it is wrong, never mind what else might be right – then s/he is engaging in an act of faith, not science.

Of course, before we can agree on an interpretation, we have to agree on the facts. The assertion that LCDM has done better at predicting rotation curves “since 2015” is the opposite of true, as I explicitly showed, again, in https://arxiv.org/abs/2004.14402. But I can’t help being morbidly curious – what paper do they think has this success?

LikeLike

Regarding the paper he uses – difficult to say as he posted only a contracted link to, I believe, astrobites site.

And I assume the page address must include dark matter-halo as the contracted link ends in “er-halo”.

However, searching on astrobites I could find several articles with dark-matter-halo in the title from 2015 and none of them claimed superiority over competitors. In fact, some of them were attempts to save LCDM…

LikeLike

Thank you for this post. In the figure for NGC 5055, the vertical axes showing the rotational velocity seem to be wrong. The data points in the two panels appear to be at the same heights but the left panel runs from 0 to 250 km/s whereas the right panel runs from 0 to 300 km/s. I assume that the observed velocities have not changed – or am I missing something.

LikeLike

Sharp eyes! They do not deceive you. One of the details of Bayesian analysis is the treatment of nuisance parameters – in this case, the inclination of the galaxy. The inclination is measured independently, but only to some accuracy. So the fit is permitted to adjust the inclination within the bounds its uncertainty, which leads to corresponding changes in velocity [which varies as 1/sin(i)]. One of the reasons the fit with the EFE is so much better in this galaxy is that it there is basically no need to adjust the inclination. Without the EFE, the fit is happier pushing it pretty much as far as it will go. The mathematical “price” for exercising this flexibility is one of the things that drives the difference in the assessment of fit quality.

LikeLike

It seems to me that if EFE holds for stars as a whole, then it should hold for all the components of a star (e.g. Hydrogen atoms) individually. Stars are gravitationally bound systems of hot gasses (mostly plasma), just as a galaxy is a gravitationally bound system of “hot” stars, stars with center of mass kinetic energy. If that is the case, then the outer layers of a star will be in the fully Newtonian regime due to the gravitational acceleration from the inner layers. As one progresses further inside the star, more and more of the matter will be in spherical shells outside that do not cause gravitational acceleration due to the Shell Theorem. Thus, MOND deviations from Newtonian mechanics will really only be happening to the matter at the center of the star. This would seem to indicate that the current value of a0 might be wrong due to the assumption that the entire mass of stars in the outskirts of galaxies is affected by MOND corrections.

It also seems that only gravitational acceleration can be considered in MOND calculations, as all particles in a star are undergoing very high accelerations due to the hot, plasma nature of stars. Basically charged particles in very strong electromagnetic fields. This would seem to rule out the inertial interpretation of MOND and allow only a purely gravitational MOND effect.

Perhaps all of this has already been discussed in published papers or previous posts on this blog, but I am not that familiar with the literature on this subject. Any pointers would be welcome.

LikeLike

OK, I found an explanation at the bottom of this page:

http://astroweb.case.edu/ssm/mond/EFE.html

Under the title “Not the Internal Field Effect.” Essentially, the external field effect goes from large systems to smaller sub-systems, but not the reverse. This is justified by quoting the weak equivalence principle, “the motion of a particle – be it a billiard ball or a solar system – is independent of its internal structure or composition.” I would feel better about this if Einstein didn’t use the weak equivalence principle to come up with GR, which disagrees with MOND. However, there is a consistency to using this kind of rule, and MOND is an empirical theory not a fundamental one, so question answered for now.

And, of course, observationally, GR is wrong on galactic scales unless you add invisible matter all over the place.

LikeLike

“And, of course, observationally, GR is wrong on galactic scales unless you add invisible matter all over the place.”

As one can tell from my comments here and elsewhere, I am not unsympathetic to MOND. Not at all. But I think that statements like that above really turn people away from MOND. GR says nothing about sources. GR knows nothing about whether matter is dark or not. It knows nothing about baryons. Yes, GR would be wrong without dark matter on galactic scales, but statements like that above make it sound like dark matter is some sort of deus ex machina. It might be abused as such in some cases, but dark matter per se is not some sort of “epicycle”. The alternative is to assume that at the time GR was formulated, astronomers must have learned about all sorts of matter via non-gravitational means.

Some people go further and claim that GR has already been falsified, and dark matter is some sort of ad-hoc fix. Anyone familiar with the history of astronomy knows that on many occasions various types of matter was first discovered via gravitational interactions, i.e. as dark matter. Most were later detected in some other way. True, most of those examples involved non-baryonic matter, but, again, GR knows nothing about baryons.

MOND has so many strengths that I am puzzled why some MOND supporters stoop to strawman arguments, which helps rather than hurts their case.

LikeLike

> Anyone familiar with the history of astronomy knows that on many occasions various types of matter was first discovered via gravitational interactions, i.e. as dark matter. Most were later detected in some other way.

Neptune falls into that category. So do exoplanets discovered using microlensing, radial velocity or timing. I wouldn’t necessarily call that “many”. What other examples did you have in mind?

LikeLike

Unseen companions in multiple-star systems, for example. Or the classic: dark matter in galaxy clusters. Much of this has now been detected (hot gas, etc.). (Interestingly, it could just about all be baryonic.)

LikeLike

I don’t know if anyone is interested, but I posted on the arxiv:2009.14613 the rudiments of a quantum theory of gravity that predicts MOND and the EFE from the a simple model of the quantum physics of neutrinos. I cannot predict the value of the critical acceleration in this theory, but that the critical parameter is an acceleration is completely clear.

LikeLike

Apass – I have encountered this behavior often enough I wrote about it a long time ago: http://astroweb.case.edu/ssm/mond/cosmicblackshirts.html

Ted: Thanks for finding the internal field effect on the website you note. I wrote it to answer exactly that question, though I sometimes forget it is there. It is a challenging concept to wrap one’s head around. But it basically comes down to the over-under point where an object must be treated as an ensemble of quantum mechanical atoms or can be treated as a macroscopic billiard ball. In MOND, that over-under point is a0. For the solar system, that’s about 7000 AU out – everything inside that is just part of the billiard ball so far as the orbit of the center of mass of the solar system in the Galaxy is concerned.

Phillip: I’m not aware of anyone saying that dark matter in itself is epicyclic. It is the explanations for MOND-like behavior in terms of GR + dark matter + adiabatic compression + feedback (+feedback + feedback +feedback etc. because there are multiple kinds of feedback each with a multiplicity of implementations) that becomes epicyclic. We just keep adding more and different variations of feedback until we get the answer we want.

LikeLike

In his article, Merritt claims that the standard model of cosmology “has been falsified many times since the 1960s”. Back then there was no adiabatic compression, feedback, or even any detailed idea about structure formation. Rotation curves are flat, ergo GR is wrong. That’s the tenor.

Again, those who have looked at it in detail know that the narrative isn’t that simple, but it is often presented that way.

Yes, Torbjörn Larsson and other internet commentators might be similarly dismissive of MOND, but he is not championed by mainstream cosmologists.

LikeLike

Phillip: Actually, I’m agnostic on MOND vs GR. Really we’re talking MOND vs Dark Matter. Nobody has solved GR exactly in order to get the motion of stars at the outskirts of galaxies. It’s really Newtonian Mechanics and Newtonian gravity that have failed, which is not a novel finding. SR, GR and Quantum Mechanics showed Newtonian limitations long ago. Who knows if some clever soul might be able to tease GR corrections out of the pure theory that would explain rotation curves, lensing and such without either DM or MOND. Perhaps something to do with the origin of inertia in a Mach’s principle sense?

I feel about the same about dark matter and the lack of an Internal Field Effect (now that I know about it). They are both ad-hoc additions to the theory to overcome somewhat obvious problems that exist without them. They can both be utilized in a fairly rigorous mathematical context, and they both do solve the problems they are intended to solve. As both you and our host point out they are both based in a tradition of previous science that has resulted in positive advancements in knowledge.

The whole epicycle analogy comes from DM being basically a fitting function with infinite degrees of freedom. The existence of the LCDM model, that tries to show how large structures evolve in the universe, is basically an acknowledgment of that and an attempt to add constraints. Which many times works quite well and sometimes seems to get stretched quite a bit via “feedback” to solve outlier cases, as our host mentions.

Of course, MOND is also a fitting function, but only has one degree of freedom in it’s simplest form. This is appealing in an Occam’s Razor sort of way, but, unfortunately, has already been shown to disagree with certain large scale phenomena. So various relativistic forms of MOND (shouldn’t they call them MOGRD, or maybe MORD?) are being worked on that also fit the CM background and anomalous lensing data.

So, it’s somewhat natural, emotionally, to feel that MOND’s relative simplicity is superior to the unobservable complexities of DM where the entire galaxy of stars and planets is really just some tiny component of a much larger structure we cannot see. Of course I say that as a part of a biosphere that is a tiny film on top of a huge planet, most of which we can only infer indirectly via seismic waves and gravity, with a few odd features like volcanoes and magnetic fields that require us to posit complex structures beneath our feet that we cannot see.

LikeLike

I’ve skimmed a bit deeper the paper and there is something that bothers me a bit. The paper says: “We perform this calculation within the standard ΛCDM context”. My problem would be how can you be sure that what you see is the true signature of the EFE and not some artifact resulting from, maybe, a too large assumed mass in the environment (i.e. the dark mater halos).

I’m tented to think that at large separation, MoND and DM should yield similar effects as DM is added as needed to account for the difference between seen and expected behavior (let’s say as to mimic MoND)?

And one additional question not directly related to this current paper but triggered by my question. When you said that you were able to predict the first-to-second peak ratio without DM – the measured valued is based on the Planck data? But is the Plank data processed to eliminate the foreground sources assuming a CDM model? If yes – isn’t it the same problem with the estimation of the effect of the environment that bothers me from this paper. Does a MoNDian assumption change something in the map (i.e. since the gravitational wells extend further, their effect on the CMB will be larger and considering them would result in, maybe, smaller temperature variations?)

Again – if yes, isn’t it a bit of circular reasoning to construct the CMB map using a LCDM model and then use this map to derive the free parameters for the LCDM model? Am I wrong and the CMB map is agnostic to the distribution of the mass in the universe?

LikeLike

“And one additional question not directly related to this current paper but triggered by my question. When you said that you were able to predict the first-to-second peak ratio without DM – the measured valued is based on the Planck data?”

This prediction was long before Planck and is of historical interest. A no-DM prediction (nothing explicitly MOND about it) got the ratio of the first two peaks right. The CDM flavour of the month didn’t. But now we have detected 7 peaks and standard ΛCDM fits them all well. Yes, one fits the parameters to the data. That’s a normal part of science. But the whole point of the CMB missions is to determine the parameters. Many people made predictions of what the power spectrum would look like for various combinations of parameters, even before the first peak was detected. And, no, one cannot fit an arbitrary power spectrum with the given parameters. The interesting thing is that no additional parameters were necessary in order to fit the CMB data (though the baryonic density is higher than what was expected).

Even some MOND supporters note that standard cosmology fits the CMB really well.

“But is the Plank data processed to eliminate the foreground sources assuming a CDM model?”

I am not a CMB guy, but I’m pretty sure that the answer is “no”.

“Again – if yes, isn’t it a bit of circular reasoning to construct the CMB map using a LCDM model and then use this map to derive the free parameters for the LCDM model?”

How else would you do it? Much science works like that: assume a model, fit some parameters from the data. What would be circular would be assuming parameters of the model then fitting them from the data. The map is essentially observed, and one interprets it in the context of a theory. &LambdaCDM; isn’t the only game in town; there are lots of papers trying to understand the CMB as produced by topological defects, but that model has been ruled out since it can’t fit the data.

“Am I wrong and the CMB map is agnostic to the distribution of the mass in the universe?”

False dichotomy. The elimination of foreground sources doesn’t depend on a model of structure formation.

LikeLike

I would like to improve our estimation of the EFE from the environment. I have some ideas as to how to do this, but this is what we can do now.

The prediction of the CMB peak ratio is a theoretical entity that is independent of any data set. Planck is merely the latest to corroborate it.

The chief issue in data processing is the removal of non-cosmological foregrounds (e.g., dust in the Milky Way, synchrotron radiation, etc.) These can in principle be done right empirically without leaning on the model in a circular way, and I think and hope the situation is OK where the first few peaks occur. You do need to worry about the circular issue you raise at higher L (finer resolutions) where there is a contribution to the fluctuations from gravitational lensing. That depends on the rate of structure formation. One of the ancillary predictions of MOND in this context is that structure will form faster so there will be more of this than is built into LCDM. I think that is part of what is driving the tension with H0: as we go to higher L, we get more power from lensing than we expect in LCDM, so we have to turn up the amount of power (mostly through sigma8 & Omega_m) to produce that. Omega_m and H0 covary, so H0 gets driven smaller (it also helps to make the universe a tad older).

So yes – you are right to worry about the underlying assumptions informing the answer in a circular way. That’s why we continue to need independent measurements of these quantities. But I don’t think this is a particular concern for the first two peaks.

Put another way: my simple ansatz got 1:2 right and the excess power at L > 1000 right but 2:3 wrong.

LikeLike

I think Phillip and I just said the same thing.

I do take one exception: it wasn’t merely the LCDM flavor du jour. It was LCDM. What we call LCDM now is not really the same as LCDM then – it has been tilted and spiked with extra baryons. Fitting is fine, but only within the range constrained by other parameters – we wouldn’t accept H0=967 as a consistent answer just because that is the best fit.

We did know the parameters of LCDM well enough in 1999 to predict the range of possibilities. The observed second peak fell significantly outside that range. It came as a direct shock to everyone who was paying attention at the time, and it isn’t just a matter of parameter fitting. It is a matter of allowing ourselves to turn the knob just as far as we need, and other constraints be damned. In this case, we fit our way out of the hole by allowing the baryon density to exceed what had been the hard bounds on it from BBN.

I could accept that we were wrong about BBN by a little, but *at the time* this was a factor of two on something that had tiny error bars. Omega_b*h^2 = 0.0125 was Known until suddenly it was 0.0225. Things change by more than there error bars in cosmology all the time, so maybe that is all that happened. On the other hand, there looks like a classic case of confirmation bias, as D/H only got the “right” answer after the CMB fits said what it was. Saying it is all fine in retrospect is just gaslightling ourselves.

The test I proposed hinges on believing BBN as the constraint it was thought to be at the time. If that was wrong, it ain’t my fault. I reviewed some aspect of this in https://arxiv.org/abs/0707.3795. I note also that in the no-CDM model, the 1:2 peak ratio runs to basically the same value for any plausible baryon density, while it varies greatly in LCDM. So even now it is a remarkable coincidence that LCDM fits have to be tuned to match the one unique value that falls out of no-CDM when they could have done a much larger variety of things.

LikeLike

Two ideas: no dark matter and higher baryon density. Both fit the data CMB data. More baryons was unexpected. On the other hand, one could argue that there is evidence for dark matter independent of things which can be explained by MOND, so no dark matter at all is also a leap of faith to some extent. But the situation was unclear. Two theories, both fit the data. The interesting thing is that they both make a prediction: the height of the third peak. The no-dark-matter scenario can’t fit that, while ΛCDM does without any additional tweaking.

To get back to my criticism of Merritt, he devotes several pages to this in his recent book. His solution so that MOND works for the third peak: dark matter! After going on and on about why dark matter is bad to save other appearances, it is OK if it is to save MOND appearances. His suggestion: a sterile neutrino. Has never been detected in the lab, is an idea from particle physics, was invented to fit the data, and so on, things which he uses to argue against dark matter in other contexts.

As those interested in MOND know, there are many things on galaxy scales which are much more natural in MOND, predictions have been confirmed, there is only one additional free parameter and various estimates agree. It is really impressive. On the other hand, there are areas where ΛCDM does very well and MOND doesn’t. Don’t take it from me, take if from Bob Sanders, who is one of the main MOND players. The first part of his book about MOND (he has written a couple of others) sings the praises of ΛCDM in cosmology. Credit where it is due. Of course, he also points out the areas where MOND does better. Fair and balanced.

Another of his books is about the black hole at the centre of the Milky Way. Naturally, the black hole itself cannot be seen. Until very recently, the only evidence for it is that the motions of stars could be explained only by assuming dark matter. Of course, practically everyone agrees with the dark-matter explanation here, but the point is that dark matter per se is not always an absurd ad-hoc explanation.

LikeLike

When you say “assuming dark matter” you mean “assuming dark matter at the right unobservable “temperature” and in the right unobservable “density” spread about in just the right “unobservable” locations to work for the purposes of curve fitting and saving General Relativity from refutation”. You can’t claim General Relativity as proof while at the same time holding the theory up using “dark matter” crutches.

The hypothesis of sterile neutrinos and the hypothesis of dark matter have exactly equivalent philosophical status: They both work for the purpose for which they were introduced but there is absolutely no independent evidence for their existence, despite a monumental effort from the experimentalists to look for them (particularly CDM).

What is so annoying about avid dark matter proponents and supposedly “independent” particle physicists who claim to never have heard of MOND (and I’m not including you in this because at least you’ve heard of MOND and are actively engaging with the theories pluses and minuses), is they think the cold dark matter hypothesis has already won all battles to make it the one accepted scientific theory to allow school children everywhere to be uncritically institutionally indoctrinated in it; its rather like celebrated Phlogiston theory was for many Eighteenth Century chemists and natural philosophers and that didn’t end well.

Just because you represent science in some sense and you can claim (rightly in my opinion) to be fair minded, that in itself won’t stop the huge consequences for science funding and science education if the dark matter hypothesis is eventually proved wrong just like the Phlogiston theory was after 100 years

LikeLike

I”When you say “assuming dark matter” you mean “assuming dark matter at the right unobservable “temperature” and in the right unobservable “density” spread about in just the right “unobservable” locations to work for the purposes of curve fitting and saving General Relativity from refutation”. You can’t claim General Relativity as proof while at the same time holding the theory up using “dark matter” crutches.”

A good example of over-the-top rhetoric. Read the context. Are you claiming that MOND explains the dynamics at the centre of the Milky Way?

Note that cosmological dark matter, apart from the idea that it is cold, needs essentially no additional assumptions.

Read up on the difference between the cosmologists’ dark matter, the astronomers’ dark matter, the particle physicists’ dark matter, and the structure-formation dark matter and come back.

LikeLike

The hypothesis of sterile neutrinos and the hypothesis of dark matter have exactly equivalent philosophical status: They both work for the purpose for which they were introduced but there is absolutely no independent evidence for their existence, despite a monumental effort from the experimentalists to look for them (particularly CDM).

I agree completely. Of course, sterile neutrinos are a form of dark matter. That is why it is so strange that Merritt trots them out for saving MOND, with none of the criticism of similar dark-matter candidates to save ΛCDM.

What is so annoying about avid dark matter proponents and supposedly “independent” particle physicists who claim to never have heard of MOND (and I’m not including you in this because at least you’ve heard of MOND and are actively engaging with the theories pluses and minuses), is they think the cold dark matter hypothesis has already won all battles to make it the one accepted scientific theory to allow school children everywhere to be uncritically institutionally indoctrinated in it;

I agree, but it is easy to find MOND proponents claiming that MOND has won all the battles and that GR has been disproven.

No school children are being indoctrinated with ΛCDM. Anyone who knows the history of the current standard model knows that it was something that the proponents had to fight strongly for, based mainly on observations, as before (usually not for good reasons) most believed in some sort of CDM without Λ. By the same token, many cosmologists know exactly how it feels trying to convince the community of a minority opinion: been there, done that.

That is not to say that the consensus might change again, but people have to be convinced. Telling them that they are indoctrinated mindless hidebound defenders of the orthodoxy trapped in a Kuhnian paradigm does not help. It makes the discussion more difficult.

its rather like celebrated Phlogiston theory was for many Eighteenth Century chemists and natural philosophers and that didn’t end well.

Historical analogies are not always accurate. At the moment, the situation is unclear. One could just as easily (and wrongly) compare MOND to phlogiston.

Just because you represent science in some sense and you can claim (rightly in my opinion) to be fair minded, that in itself won’t stop the huge consequences for science funding and science education if the dark matter hypothesis is eventually proved wrong just like the Phlogiston theory was after 100 years

Most of the results of such funding would still be very useful. All the same, I would like to see more support for MOND. But that means convincing more people that it is right, or at least worth looking into. One can argue that the mainstream folks have it easier because they get funded even if they unfairly criticize MOND, but that’s the way it is.

There are rumours that the 2023 Texas Symposium on Relativistic Astrophysics might be in the States. That would be a good chance try to organize some sort of constructive event. Yes, have some review talks by the major players both sides, but maybe also some talks by converts. Have a talk on the problems of MOND and the advantages of ΛCDM by a MOND proponent, and vice versa. Have some discussion meetings with 10–20 people (my experience is that such meetings are very fruitful). Of course, even if it is not in the States one could try to come up with such a programme. (The 2021 Texas Symposium is to be in Prague, assuming that it is not cancelled due to the corona pandemic.)

LikeLike

During the night, someone sent me an email saying that ΛCDM is dead. 🙂 The evidence: MOND predictions have been confirmed. True. But some haven’t (see the CMB discussion here). Some ΛCDM predictions have been confirmed. Some haven’t. It’s not that simple.

By coincidence, yesterday I participated in a video conference with Max Tegmark, who used to do cosmology but now does research into machine learning and so on. His latest project: using machine learning to classify biases in the news. Perhaps something similar could be done elsewhere. 🙂

LikeLike

The email was a submission to the sci.astro.research newsgroup, of which I am one of the moderators. I posted it, then replied with my own post (which, since the group is moderated and I don’t moderate my own posts, might not show up until a day or two). Pretty much the equivalent of Torbjörn Larsson (the commentator at the other website) mentioned above, but from the other side. 🙂

LikeLike

When you say “assuming dark matter” you mean “assuming dark matter at the right unobservable “temperature” and in the right unobservable “density” spread about in just the right “unobservable” locations to work for the purposes of curve fitting and saving General Relativity from refutation”. You can’t claim General Relativity as proof while at the same time holding the theory up using “dark matter” crutches.”

A good example of over-the-top rhetoric. Read the context. Are you claiming that MOND explains the dynamics at the centre of the Milky Way?

Note that cosmological dark matter, apart from the idea that it is cold, needs essentially no additional assumptions.

Read up on the difference between the cosmologists’ dark matter, the astronomers’ dark matter, the particle physicists’ dark matter, and the structure-formation dark matter and come back.

LikeLike

I come from a background of Geophysics on the scientific side, but now philosophy of science is my main interest. One has to recognise when there is an “inverse problem” to solve in science, choosing from an infinite possible different distributions of invisible “dark matter” in and amongst galaxies assuming any given proposed theory is true (as yet MOND is not immune to this, as some dark matter is still needed at present ).

For example in geophysics looking for oil bearing strata from seismic surveys or gravity surveys. The maths will deliver a large number of possible solutions and then one has to physically drill holes to constrain the vast number of allowable solutions to a handful of possibles. Statistical computer models of the likely physical history of strata formation won’t wash it: even though in cosmology the statistical equivalent is considered good evidence for cold dark matter distribution in any given galaxy!

Admittedly light bending by mass density distributions can help with constraining density and location of dark matter, assuming GR or some alternative theory is correct; so despite what I said in my rhetoric above, there is a observational-theory self-consistency test possible for each theory, which is now being followed up on. The latest results, assuming GR is true, are that the dark matter that exists in and amongst galaxy clusters is more clumpy than expected, perhaps more like dark baryonic matter than cold dark matter.

All I am trying to point out is that these are early days in the science of cosmology and a the very highest degree of scepticism must be maintained in regard to all proposed theories in this realm. Trying to create sociological bandwagons too early can do enormous long term damage to science.

LikeLike

Agreed. 🙂

LikeLike

With respect to the friendly type of physicists and cosmologists and sympathy for astronomers, your fields are incredibly far off track. It is time to unify GR and QM and turn LCDM inside out. How can you even do science when you don’t know any of the following:

1. Universe background = flat space (nothing but dimensions) and linear time (unidirectional)

2. Universe ingredients = equal and opposite, Lp immutable, energy carrying point charges. |e/6|

3. Two free parameters = average volumetric density of point charges and energy carried by them.

4. The first structure to form is the tau dipole which is a positive point charge and a negative point charge orbiting each other according to a modified Coulomb’s Law that describes these point charges.

Now, get paper and pencil and draw that dipole.

Imagine them orbiting each other.

What happens when you do work W on one point charge and apply h-bar j-s of energy in the direction of orbital velocity?

Does each point charge perceive the electric field from its partner to be emanating from a point on a line that goes through the center of the orbit? No.

The speed of the electric field, c, is important.

The tau dipole is a system that:

a. stores energy in h-bar increments (1 to Planck frequency increments)

b. reduces its radius for every h-bar inrement

c. increases point charge fraction of c speed for every h-bar increment

d. reduces wavelength for every h-bar increment

in other words the tau dipole is

i. a 143 bit energy accumulator

ii. a stretchy ruler

iii. a variable clock

iv. the basis of spacetime

The fields of physics, cosmology, and astronomy are lost and getting more lost due to a series of faulty conclusions over the past 133 years. Here is where this model leads:

5. QM’s quantum is emergent. Energy is continuous and structure quantizes it with the dipole.

6. QM’s uncertainty is the pole in the tau dipole circuit at h-bar/2 which is the tipping point in energy transactions. Hey Schrodinger’s cat just escaped the box!

7. The outer orbital shell in all fermions is a dipole. It can accept one h-bar every 1/2 revolution. This is QM spin.

8. Einstein’s spacetime geometry is implemented by tau dipoles or structure they form

9. Einstein’s observer needs to move from low energy spacetime to the Euclidean frame.

10. From the Euclidean frame its a lot easier now to see what is happening.

11. Standard matter particles are constructs of these point charges.

12. Constructs generally have a lot more apparent energy (i.e., mass) than spacetime aether.

13. The dipole shell(s) of standard matter constructs are effectively an alternating current circuit.

14. Gravity = standard matter climbing the gradient of spacetime aether energy.

15. EFE does well at large scales, but the EFE fail at the physical level of point charges.

16. The ONLY fundamental quanta are the point charges

and some huge implications

17. Bang, inflation, expansion, shrink, bounce are all galaxy local via the SMBH.

18. Koide’s formula describes the field interactions of three orthogonal nested tau dipoles at different radii.

19. Hence the explanations for fermion generations.

20. An electron CONTAINS a muon. A muon CONTAINS a tau. Abundant energy.

21. Each dipole shell layer can shield some or all of the energy it encapsulates.

22. An electron neutrino is three tau dipoles but only partial energy screening. Hence oscillation.

23. Photons are made of point charges : 6/6

24. Anti-matter can not survive long in our universe unless it is containment.

25. A proton CONTAINS a positon. A neutron CONTAIN an anti-neutrino. Nothing is missing.

26. I have a preliminary hunch that what we call anti-matter is a construct where the outer shell is at a integer + 1/2 frequency. That is what happens when you apply an h-bar to a dipole – a 1/2 revolution increase to spin 1/2 constructs, e.g., fermions. My guess is that 0.5 frequency shells react with integer frequency shells

27. The strong force is between each containment shell and the next shell, or the payload if the innermost shell.

28. It seems that W and Z are like a can opener for dipole shells, so perhaps those bosons have the ‘anti-matter’ energies with integer + 0.5 frequency.

29. No singularities. No wormholes. No many worlds.

30. Multiverse = pocket universe = a SMBH eruption of point charge plasma and recycling.

31. A free tau dipole is the tau neutrino. 1/1

I could go on and on. Check out my Breakthrough Days posts on my blog at jmarkmorris.com

I’m a creative problem solver, not a scientist.

I have not found a sponsor that would enable me to publish on arXiv.

Cheers!

J Mark Morris

LikeLike

Show me a CMB power spectrum calculated from your theiry.

LikeLiked by 1 person

theiry —> theory

LikeLike

Haven’t gotten there yet. Still working out the puzzle in easier areas for me to make progress. It is five steps forward one back at this point. Example: #26 above may be wrong. Perhaps it is clockwise vs counterclockwise execution of the same wave equation.

LikeLike

Let’s try something simpler. How about predicting from your model the value of g-2 for the electron, the muon and the tau and explaining why g-2 is different for the three identically charged leptons. If you cannot make predictions that can be tested against experiment, no-one will take you seriously. Richard Feynman explains the essence of the scientific method here:

https://fs.blog/2009/12/mental-model-scientific-method/

There are many other places you can find this, it’s from his lectures “The Character of Physical Law” given at Cornell in 1964. You can find all of them on YouTube.

LikeLike

Well, understanding how the quantum numbers emerge from the structure is on my list and I am making progress on decoding the point charge geometry for the standard model particles. See the two tables in today’s blog post. https://johnmarkmorris.com/2020/12/26/npqg-december-26-2020/. The second table is new today and I’m really pleased with how the structure is revealing itself. It is looking like shell after containment shell. And that’s actually what Koide’s formula is describing, the field interactions of three orthogonal rotating dipoles. So maybe a month or three by myself to make progess on the quantum numbers. I already deduced spin. Spin tells you how much of a dipole revolution is required to transfer an h-bar j-s. So with two point charges in the dipole it takes 1/2 revolution to boost frequency by 0.5. Each particle gets h-bar/2 j-s and the dipole locks that in by dropping radius. Then I will be able to look at g-2. Now, most of you here could probably go far faster than I if you would choose to engage with the NPQG model. By the way the model is indicating that these nested dipole shells can act like a faraday cage and shield the energy of the next layer which is more energetic. The model is predicting that an electron contains a muon, and a muon contains a tau. That is not known to science yet.

I liked Feynman’s description of the scientific method, especially starting with the educated guess or intuition. Now we could have a discussion on Popper and Kuhn, but the problem is that I anticipate that the people here hold the scientific method in high regard. Well, there is going to need to be a lot of root cause analysis of all the wrong turns of the last 133 years because the scientific method and scientists are going to take a huge hit to credibility based on this point charge architecture of the universe. I made a video this week that goes through the top ten errors https://youtu.be/6N3Iaw8GYVM.

The article you linked says “The scientific method refers to a process of thought based on integrating previous knowledge, observing, measuring, and logical reasoning.” For me, integrating priors means figuring out where the scientists got the narrative wrong, and sadly it is a colossal set of mistakes, with no disrespect to the scientists. Where the scientific method goes wrong is it assumes that a set of priors is correct. Even with many tensions in GR/QM/LCDM that did not cause the fields to go back and find these major incorrect conclusions. So there is a lot of danger in taking narrative conclusions too far. Who told LeMaitre to work backwards to a single event? The theories don’t specify a single event. However, I do see how it was easy to miss after Einstein erred and said spacetime was a geometry. That makes folks think some abstract geometry is expanding. Now, perhaps if Michelson-Morley had not conclude there was no aether then Einstein might have gone with an aether. Then when we saw evidence of expansion scientists would have said, where is all that new aether coming from? Then, hmm we’re looking for something huge and powerful that goes bang. Then by the time Natarajan and Rees came along and started realizing that nearly every galaxy has an SMBH they wouldn’t have carelessly said, oh sure, that fits with LCDM. If they had made the proper conclusion then galaxy local inflation and expansion just fall out as obvious. Oh my, what a mess y’all are going to have on your hands to clean up. Yet it will be fun because everything will make so much more sense.

I want to be clear that I am not a scientist. I am a creative problem solver. I am not bound by the scientific method, nor peer reviewed publications. That’s good because had I gone into these fields there is no way I would have found the solution to nature if I had to conform to the current set of incorrect priors.

Did you think about the most basic point charge dipole and how it implements so much of what we are missing?

For 2.5 years I have been ignored or bullied by scientists who think I am a nut. Well, I am certain that 20,000 physicists and cosmologists are nuts for believing the nutty priors. I give astronomers a pass because they are a lot kinder and I think it is really going to upset them when they figure out how badly the physicists and cosmologists failed.

Best, Mark

LikeLike

I just realized it is Planck’s constant h and not h-bar as I wrote above. It turns out that while using h-bar was convenient for physicsts math, it obfuscates the true nature of what is happening. Nature does’t click off in h-bar units of energy in 1/(2pi) radians. It exchanges an h every QM spin x revolutions of the tau dipole.

LikeLike

I have no idea if anyone is actually grokking what I have been saying, or if they ignore it or dismiss it. However, you might want to look at this post where I have decoded the standard model in terms of point charges and even better, am deciphering the geometrical structures of fermions, photons and the other bosons. It is truly amazing. As far as I am concerned GR and QM are now unified. I have no idea how long it will take physicists to recognize this, but it is as plain as day if someone will just seriously take a look. So as I have said before this is going to hit LCDM the hardest believe it or not because it is the most inside out. That is sort of a mixed blessing. On the one hand, it is going to be a ton of work to recast all the theories. Seriously you might want to start over from ground zero. Lots of things in LCDM will plug back in, but some won’t. On the positive side, it will be nice for all of the cosmologists and astronomers to move into a tension free future (let’s say reduce tensions 100x?) : https://johnmarkmorris.com/2021/01/19/npqg-january-19-2021-planar-bosons-on-the-photon-train/

p.s. Malus’s Law and polarization is fully explained by point charge model of photons as six electrinos, six positrinos, possibly geometrically as an electron neutrino coupled to an electron anti-neutrino — or a set of planar dipoles orthogonal to the direction of motion.

p.p.s. I have no sponsor required to publish on arxiv, and the journals balk, so I self publish on my blog.

LikeLike

Hi Stacy, in my search for a simple explanation for gravity, would you say it could be explained simply as “the energy in matter causing a disturbance in the cohesive forces of space itself”?

Instead of saying the earth pulls an apple downward, that the energy within the Apple is inclined to bounce into areas of space which have been loosened to a greater extent by the energy of the earth?

This could also provide an explanation for inertia and momentum, in that the energy within the Apple is attracted to the Apple’s own location in space of which it has loosened by its own energy. That space reclaiming it’s hold after something has passed through, equalises the forces required to disturb space in the forward direction.

That a particle has an energy density enough to have loosened space where it is easier to bounce in on itself rather than travel outwards in straight lines?

What are your thoughts?

LikeLike

Such descriptions aren’t very helpful unless you can do something quantitative with them.

LikeLike

Hi Philip, a simple description that gave understanding of the mechanism behind gravity would provide direction and inspiration to those searching. Having a picture on the box makes it easier to know where the puzzle pieces go and which ones are upside down.

LikeLike

I‘m not knocking it per se. Many people think more visually than mathematically (Einstein is a good example). However, it is easy to come up with pictures, but not easy to show that they are relevant to reality.

I‘m not the first to claim this:

I often say that when you can measure what you are speaking about, and express

it in numbers, you know something about it; but when you cannot measure it,

when you cannot express it in numbers, your knowledge is of a meagre and

unsatisfactory kind.

—William Thomson, Lord Kelvin

LikeLike

There are many discussions of this in the popular literature. I don’t think I can add to them here.

LikeLike