There is a rule of thumb in scientific publication that if a title is posed a question, the answer is no.

It sucks being so far ahead of the field that I get to watch people repeat the mistakes I made (or almost made) and warned against long ago. There have been persistent claims of deviations of one sort or another from the Baryonic Tully-Fisher relation (BTFR). So far, these have all been obviously wrong, for reasons we’ve discussed before. It all boils down to data quality. The credibility of data is important, especially in astronomy.

Here is a plot of the BTFR for all the data I have ready at hand, both for gas rich galaxies and the SPARC sample:

A relation is clear in the plot above, but it’s a mess. There’s lots of scatter, especially at low mass. There is also a systematic tendency for low mass galaxies to fall to the left of the main relation, appearing to rotate too slowly for their mass.

There is no quality control in the plot above. I have thrown all the mud at the wall. Let’s now do some quality control. The plotted quantities are the baryonic mass and the flat rotation speed. We haven’t actually measured the flat rotation speed in all these cases. For some, we’ve simply taken the last measured point. This was an issue we explicitly pointed out in Stark et al (2009):

If we include a galaxy like UGC 4173, we expect it will be offset to the low velocity side because we haven’t measured the flat rotation speed. We’ve merely taken that last point and hoped it is close enough. Sometimes it is, depending on your tolerance for systematic errors. But the plain fact is that we haven’t measured the flat rotation speed in this case. We don’t even know if it has one; it is only empirical experience with other examples that lead us to expect it to flatten if we manage to observe further out.

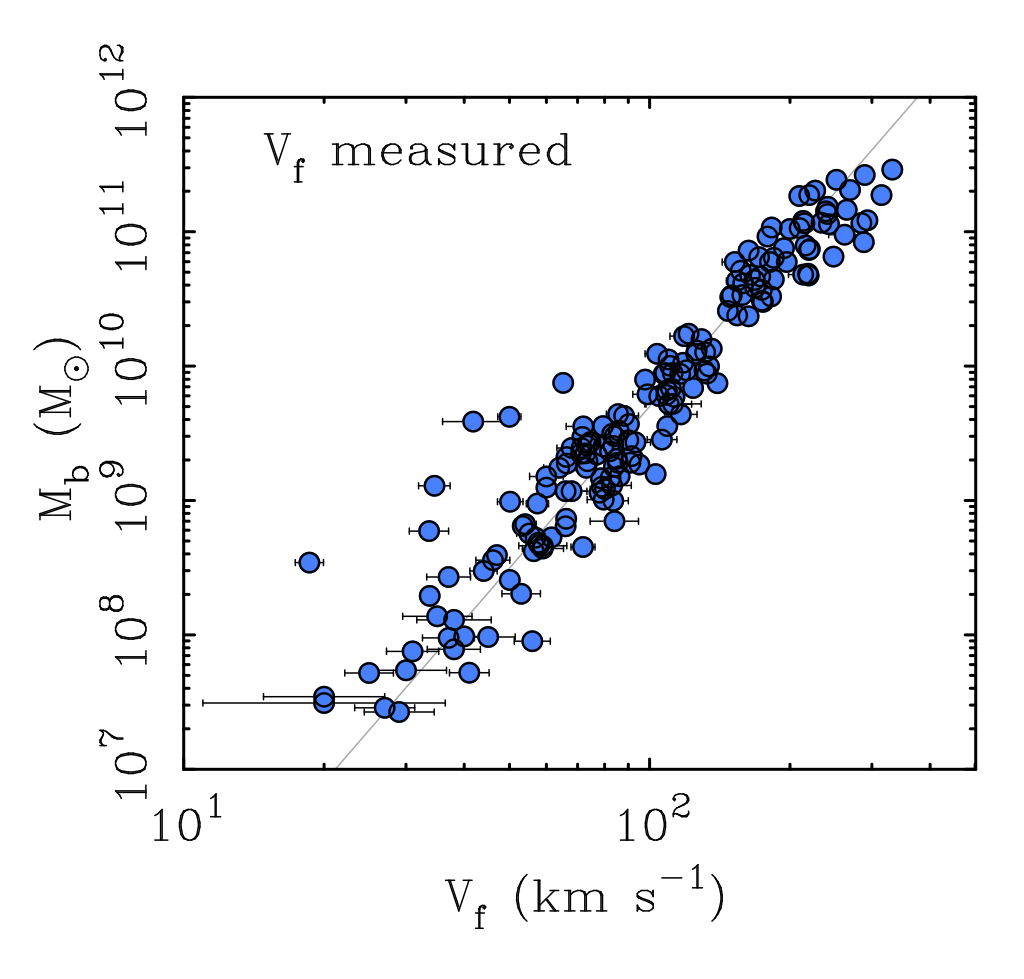

For our purpose here, it is as if we hadn’t measured this galaxy at all. So let’s not pretend like we have, and restrict the plot to galaxies for which the flat velocity is measured:

The scatter in the BTFR decreases dramatically when we exclude the galaxies for which we haven’t measured the relevant quantities. This is a simple matter of data quality. We’re no longer pretending to have measured a quantity that we haven’t measured.

There are still some outliers as there are still things that can go wrong. Inclinations are a challenge for some galaxies, as are distances determinations. Remember that Tully-Fisher was first employed as a distance indicator. If we look at the plot above from that perspective, the outliers have obviously been assigned the wrong distance, and we would assign a new one by putting them on the relation. That, in a nutshell, is how astronomical distance indicators work.

If we restrict the data to those with accurate measurements, we get

Now the outliers are gone. They were outliers because they had crappy data. This is completely unsurprising. Some astronomical data are always crappy. You plot crap against crap, you get crap. If, on the other hand, you look at the high quality data, you get a high quality correlation. Even then, you can never be sure that you’ve excluded all the crap, as there are often unknown unknowns – systematic errors you don’t know about and can’t control for.

We have done the exercise of varying the tolerance limits on data quality many times. We have shown that the scatter varies as expected with data quality. If we consider high quality data, we find a small scatter in the BTFR. If we consider low quality data, we get to plot more points, but the scatter goes up. You can see this by eye above. We can quantify this, and have. The amount of scatter varies as expected with the size of the uncertainties. Bigger errors, bigger scatter. Smaller errors, smaller scatter. This shouldn’t be hard to understand.

So why do people – many of them good scientists – keep screwing this up?

There are several answers. One is that measuring the flat rotation speed is hard. We have only done it for a couple hundred galaxies. This seems like a tiny number in the era of the Sloan Digitial Sky Survey, which enables any newbie to assemble a sample of tens of thousands of galaxies… with photometric data. It doesn’t provide any kinematic data. Measuring the stellar mass with the photometric data doesn’t do one bit of good for this problem if you don’t have the kinematic axis to plot against. Consequently, it doesn’t matter how big such a sample is.

Other measurements often provide a proxy measurement that seems like it ought to be close enough to use. If not the flat rotation speed, maybe you have a line width or a maximum speed or V2.2 or the hybrid S0.5 or some other metric. That’s fine, so long as you recognize you’re plotting something different so should expect to get something different – not the BTFR. Again, we’ve shown that the flat rotation speed is the measure that minimizes the scatter; if you utilize some other measure you’re gonna get more scatter. That may be useful for some purposes, but it only tells you about what you measured. It doesn’t tell you anything about the scatter in the BTFR constructed with the flat rotation speed if you didn’t measure the flat rotation speed.

Another possibility is that there exist galaxies that fall off the BTFR that we haven’t observed yet. It is a big universe, after all. This is a known unknown unknown: we know that we don’t know if there are non-conforming galaxies. If the relation is indeed absolute, then we never can find any, but never can we know that they don’t exist, only that we haven’t yet found any credible examples.

I’ve addressed the possibility of nonconforming galaxies elsewhere, so all I’ll say here is that I have spent my entire career seeking out the extremes in galaxy properties. Many times I have specifically sought out galaxies that should deviate from the BTFR for some clear reason, only to be surprised when they fall bang on the BTFR. Over and over and over again. It makes me wonder how Vera Rubin felt when her observations kept turning up flat rotation curves. Shouldn’t happen, but it does – over and over and over again. So far, I haven’t found any credible deviations from the BTFR, nor have I seen credible cases provided by others – just repeated failures of quality control.

Finally, an underlying issue is often – not always, but often – an obsession with salvaging the dark matter paradigm. That’s hard to do if you acknowledge that the observed BTFR – its slope, normalization, lack of scale length residuals, negligible intrinsic scatter; indeed, the very quantities that define it, were anticipated and explicitly predicted by MOND and only MOND. It is easy to confirm the dark matter paradigm if you never acknowledge this to be a problem. Often, people redefine the terms of the issue in some manner that is more tractable from the perspective of dark matter. From that perspective, neither the “cold” baryonic mass nor the flat rotation speed have any special meaning, so why even consider them? That is the road to MONDness.

“Another possibility is that there exist galaxies that fall off the BTFR that we haven’t observed yet. It is a big universe, after all.” — “Astronomy Fact: There are top many galaxies” https://xkcd.com/2596/ (Have a silly day!)

LikeLiked by 1 person

One of Karl Popper’s great achievements was to recognise falsifiability as the key tenet of scientific theories. If I was looking for counter-examples to the BTFR, I would be looking at galaxies that fall below the BTFR line (even just a little below it). In your third plot there are a few galaxies with quite short error bars in the velocity axis, where more accurate measurements could be a good test. If better measurements bring the points back towards the line, then all well and good; if not then you have your ‘black swan’.

LikeLike

The unknowable unknown that causes most of the residual scatter is the stellar mass-to-light ratio. I’ve assumed a constant here; real galaxies will vary by 0.1 – 0.15 dex. That by itself pretty much explains all the scatter, which is already vastly smaller than it should be.

LikeLike

Has anyone noticed Milgrom’s newest detailed analysis of a specific AM (Angular Momentum) relation? It’s apparently calculable from MOND and gives similar plots as the BTFR, but with gas percentage giving a constant factor difference (as predicted). Interesting stuff!

LikeLike

The root problem here is not the dark matter paradigm but the intellectual sclerosis that is at the heart of the theoretical physics community. Leaving aside the baroque mess in particle physics, the fundamental problematic cosmological paradigm is that of the “Expanding Universe”. The EU is the foundational assumption of LCDM and that is all it is – an assumption. That this assumption has been raised to the level of unassailable dogma within the cosmological community is a scientific travesty.

That no research into alternative, non-expanding cosmologies can be published let alone receive funding within the “open-minded” scientific academy means that contemporary cosmology is not a science but a belief system, one that is impervious to scientific criticism. No amount of contradictory evidence is going to overcome the dark matter paradigm. LCDM is a cult and its true believers don’t care about your factual evidence.

Dark matter is only one of four structural elements propping up the EU paradigm, the others being inflation, causally-interacting spacetime, and dark energy. All four elements are essential to the EU paradigm and none of them are part of the Cosmos we actually observe. LCDM, with its many free parameters which are freely augmentable as needed, can of course be massaged into agreement with actual observations. That’s a mathematical trick as old as Ptolemy. Such manipulated agreements have no scientific merit in light of the overwhelmingly negative empirical evidence for the model’s structural elements.

The claim that LCDM is a great success because everybody says so is fatuous nonsense; science isn’t a popularity contest. The model does not make substantive predictions only post-dictions. This dates back to the alleged successful CMB predictions which ranged over an order of magnitude and did not encompass the observed value.

Again, the problem here is not that the EU paradigm is almost certainly wrong. Being wrong is a necessary part of the scientific endeavor. It is the rigid inability of the cosmological community to acknowledge the shortcomings of their models based on that paradigm, and at the very least to engage in an open-minded investigation of non-EU models that is scientifically inexcusable. The grip of the EU cult on publishing and research-funding needs to be broken before cosmology can return to being a science. Until that happens, Dr. McGaugh, you will rail in vain; your good work will not move the closed minds of the EU cult.

LikeLike

Contrary to what Lawrence Cox said above, the key figures in the philosophy of science in relation to the current status of physical cosmology and the Lambda CDM model are Kuhn and Lakatos, rather than Popper as naively argued. Thomas Kuhn was the philosopher of science who came up with the concept of scientific paradigms and paradigm shifts in science, and Imre Lakatos was the philosopher who came up with the concepts of progressive and degenerative research programs. Both Kuhn and Lakatos showed that no theory in science could be 100% falsified in the naive Popperian sense, nor could any theory be 100% verified. Instead, according to Kuhn, at any time in the history of science, there is a dominant theory which is usually considered by the scientific establishment to be the best model of existing observations of this universe, which is the scientific paradigm at that time. However, new observations are made that contradict the existing scientific paradigm, and eventually the contradictions accumulate to the point where an existing paradigm is overturned and the scientific establishment shifts to a new paradigm.

Kuhn’s paradigm roughly corresponds to the dominant research program in Lakatos’s terminology. According to Lakatos, a research program consist of the theoretical core that must be maintained or else the research program would simply collapse, as well as a number of auxiliary hypotheses that could be modified or abandoned if the hypothesis contradicts reality. A research program is progressive if the auxiliary hypotheses in the research program provide greater explanatory or predicative power to the research program, and it is degenerative if the auxiliary hypotheses are only added post hoc merely to explain discrepancies between the research program and observations. Furthermore, Lakatos emphasizes that every research program/paradigm/theory has conflicts with observations, but nevertheless, a research program is able to continue unabated if the research program continues to be progressive. Within each research program, there are also many subprograms which are each individually at some level of progressive vs degenerative.

There are two things in physical cosmology to consider here: the state of Lambda CDM as a model of physical cosmology and the state of general relativity as a theory of gravity.

Lambda CDM is very clearly a degenerative research programs at this point in time, and plenty of intractable problems and conflicts with experimental observation in Lambda CDM are already voiced in the scientific media (who call this entire situation a “cosmology crisis”), both on the dark energy side (conflicts with the Hubble rate and large scale structures) and the CDM side (as detailed about in this blog). Furthermore, we see alternatives to CDM such as superfluid dark matter, self-interacting dark matter, and so forth, and alternatives to the cosmological constant such as quintessence or even the lack of dark energy completely explained by unaccounted factors in general relativity itself, all being explored by theorists as well. These indicate that the status of Lambda CDM is already weakened considerably from the past few decades, and I wouldn’t be surprised if within the next 10 years Lambda CDM would be abandoned entirely and replaced by a new standard model of cosmology.

Whether general relativity as the dominant theory of gravity in fundamental physics would also be a casualty of this paradigm shift is dependent on whether any replacements for CDM are simple enough. If alternative dark matter theories become too complex, then purely by Occam’s razor, directly modifying gravity would become simpler than adding more and more particles and fields and interactions between such particles and fields like epicycles in orbits. However, the effects of replacing general relativity with something like a relativistic MOND would be much more profound in theoretical physics than merely replacing the Lambda CDM model in astrophysics and cosmology; in particular, decades of research in quantum gravity such as asymptotic safety, string theory, loop quantum gravity, and Peter Woit’s Euclidean twistor unification would suddenly become outdated as they all assume general relativity to be true.

LikeLike

Reading the comments of the blog posts on this blog lead me to come across this article by Alexander Deur: [Effect of the field self-interaction of General Relativity on the Cosmic Microwave Background Anisotropies](https://arxiv.org/abs/2203.02350), which is a sign that the simplest alternative to Lambda CDM according to Occam’s razor might simply be taking into account gravitational self-interaction and other non-linear gravitational effects in general relativity (such as backreaction), rather than any new fields or particles or modified gravity.

LikeLike

Hello Madeleine,

I certainly agree with your point that LCDM is well described by Lakatos’ degenerate research program. However your subsequent discussion focuses on the Lambda and CDM which are, in terms of Lakatos’ model, auxiliary hypotheses; they are not the theoretical core “that must be maintained or else the research program would simply collapse.” This is clear from your discussion of possible alternatives to both dark matter and dark energy. Any such change would leave the theoretical core intact and its status as a degenerate research program unaltered.

As you point out, what makes a research program degenerate is a theoretical core that requires auxiliary hypotheses “… only added post hoc merely to explain discrepancies between the research program and observations.” Any replacements for Lambda and CDM would fit that description and leave modern cosmology saddled with its current degenerate program. What needs to be reevaluated is the theoretical core.

I would suggest that this theoretical core consists of two foundational assumptions adopted a century ago:

1. The Cosmos is a unified, coherent, simultaneous (non-relativistic) entity that can be successfully modeled as such using a relativistic gravitational model derived in the context of the solar system.

2. The cause of the cosmological redshift is some form of recessional velocity.

Combined, those two assumptions can be characterized as the Expanding Universe paradigm. The EU paradigm is the reason the program is degenerate; dark matter or dark energy are symptoms of the problem (auxiliary hypotheses), not the cause. Unfortunately, the socio-economic incentives of the modern scientific academy are not conducive to a reconsideration of the theoretical core.

As to the matter of General Relativity, I think a clear distinction has to be drawn between Einstein’s GR and that of the mid-20th century revisionists, Dicke, Wheeler, et al. The latter version, replete with a reified spacetime and conjectures of mathematical convenience passed off as “principles” (the Einsteinian and Strong equivalence principles) needs to be discarded and replaced by Einstein’s original understanding of his theory. That version of GR still has some shelf-life remaining I think, but like all gravitational models to date it only describes the gravitational effect, not the gravitational mechanism. Regards,

Bud

LikeLike

Certainly, one can hardly argue with the principle of general relativity as a fundamental physical principle. The various forms of the equivalence principle, on the other hand, presume that we already know what mass is – which we clearly don’t.

LikeLike

Hi Robert,

Interesting, but I think we’d be imposing on Dr. McGaugh’s patience and hospitality to pursue that discussion here so I’m going to copy your comment and post it on my blog for further elaboration: https://thisislanduniverse.com/blog/

If you object of course, I’ll remove it. Best,

Bud

LikeLike

Sure. You can also visit my blog where I have elaborated my point of view, for example in a post entitled “The definition of mass” a month ago.

LikeLike

“As you point out, what makes a research program degenerate is a theoretical core that requires auxiliary hypotheses “… only added post hoc merely to explain discrepancies between the research program and observations.” Any replacements for Lambda and CDM would fit that description and leave modern cosmology saddled with its current degenerate program. What needs to be reevaluated is the theoretical core.”

You might be right that Lambda and CDM might just be auxiliary hypotheses. But the Friedmann–Lemaître–Robertson–Walker metric and the Friedmann equations are certainly part of the theoretical core of cosmology developed in the 1920s and 1930s, and the assumptions that the FLRW metric rests upon, such as isotropy and homogeneity, are also being called into question by recent observations, such of that of the CMB dipole.

“As to the matter of General Relativity, I think a clear distinction has to be drawn between Einstein’s GR and that of the mid-20th century revisionists, Dicke, Wheeler, et al. The latter version, replete with a reified spacetime and conjectures of mathematical convenience passed off as “principles” (the Einsteinian and Strong equivalence principles) needs to be discarded and replaced by Einstein’s original understanding of his theory. That version of GR still has some shelf-life remaining I think, but like all gravitational models to date it only describes the gravitational effect, not the gravitational mechanism. Regards,”

This is somewhat orthogonal to the point I was trying to make, which is that replacing Einstein’s general relativity with a MOND theory as advocated on this blog is more radical than replacing stuff like Lambda or the FLRW metric in the LCDM model.

LikeLike

“… the assumptions that the FLRW metric rests upon, such as isotropy and homogeneity…”

In FLRW, the metric is assumed to apply to a unified, coherent, simultaneous entity, a Universe, to which are then ascribed the properties of isotropy and homogeneity. The axiomatic (true by definition) nature of that Universe assumption is clearly in evidence in the very first sentence of the Conclusions section of the Snowmass paper you cite (https://arxiv.org/pdf/2203.06142v1.pdf):

“The present tensions and discrepancies among different measurements, in particular the H0 tension as the most significant one, offer crucial insights in our understanding of the Universe.”

What is clear in that the sentence is that the extensive list of “tensions” between the LCDM model and observations documented in the paper has not evoked, on the part of its numerous authors, any willingness (or perhaps ability) to reconsider the model’s core foundational assumption – the existence of a vast unitary entity – the Universe.

This unwillingness or inability persists despite the fact that the assumed Universe’s existence is contradicted by a central tenet of relativity theory (the absence of a universal frame) and, that well established physics (the finite maximum speed of light and the redshift-distance relationship) argue against the existence of a physically meaningful “Universe” on a scale commensurate with our cosmological observations.

The situation with respect to LCDM is exactly analogous to the situation with Ptolemaic cosmology prior to Copernicus. There was no pathway to derive heliocentrism by mathematically tinkering with the geocentric model. Progress wasn’t possible until the geocentric assumption was discarded and replaced with heliocentrism.

The Cosmos is almost certainly not a unified, coherent, simultaneous entity (the Universe assumption) and until that assumption is, at very minimum, subject to reconsideration, modern cosmology will remain an incoherent, unscientific mess, a degenerate research program engaged in a never ending quest for auxiliary hypotheses and parameters to prop up its untenable core – see section D. “The Road Ahead: Beyond ΛCDM” in the Snowmass paper.

If cosmology abandons FLRW but maintains the Universe assumption the resulting model will differ superficially from the current one but describe just another imaginary mathematical construct bearing in its particulars, as with ΛCDM, no relationship to the observed Cosmos.

LikeLike

The recent Snowmass paper on cosmology https://arxiv.org/abs/2203.06142v1 has the following statements:

“Obviously, variations of cosmological H0 across the sky with the flat ΛCDM model make any discrepancy between Planck’s determination of H0 with the same model and local H0 determinations a moot point. Given the rich variety of the above observations, and the differences in underlying physics, it is hard to imagine that the CMB dipole direction is not a special direction in the Universe. The status quo of simply assuming that it is kinematic in origin, especially since it is based on little or no observational evidence, may be untenable. If this claim is substantiated, not only do the existing cosmological tensions in H0 and S8 need revision, but so too does virtually all of cosmology. In short, great progress has been made through the cosmological principle, but it is possible that data has reached a requisite precision that the cosmological principle has already become obsolete.”

and

“Given that the magnitude of the matter dipoles are currently discrepant with the magnitude of the CMB dipole, systematics aside, this implies that the Universe is not FLRW. Note that this discrepancy between the matter and radiation dipole, if true, is once again an example of an early Universe and late Universe discrepancy, reminiscent of H0 and S8 tension. In effect, a breakdown in FLRW could explain both H0 and S8 tension, as recently highlighted [160].”

Since Snowmass is fairly mainstream, these statements seem to indicate that the most likely breakdown of LCDM is likely to come from abandoning the FLRW metric and the cosmological principle rather than abandoning dark energy or dark matter.

LikeLike

In case you haven’t come across it already, you would likely enjoy _A Philosophical Approach To MOND_ by David Merritt.

LikeLike

Madeleine, I think you have completely missed the point of my comment. Popper’s achievement was replacing the idea of verificationism (as in the Vienna Circle approach) with falsificationism, and even though naive Popperism has been rejected, the ideas of both Lakatos and Kuhn still depend on falsificationism. Indeed, when discussing Lakatos and his ‘research programme’ you need to look to Feyerabend, not Kuhn, for a different approach to what constitutes science. Kuhn’s idea of ‘revolutions’ with particular reference to Copernicanism is based on an inaccurate reading of events which would now largely be rejected by historians of science.

And please note that I spell my first name with a U not a W, as ought to be clear.

LikeLike

Wikipedia’s article on LCDM appears to have a longer section on challenges to LCDM than successes of LCDM: https://en.wikipedia.org/wiki/Lambda-CDM_model

LikeLike

I am having a naive question. How do you exactly calculate the Rotation Curve of each galaxy?

Starting from the spectrum, studying the HI line and taking into account the M/L, you find the mass, right? I have seen you have plotted so many of RCs but I cannot find a simple recipe to do it myself (assuming I am having a spectrum!)

LikeLike

That is what graduate tuition is for.

How one goes about this depends on the kind of data you have. There are many papers written about this. One example is https://arxiv.org/abs/astro-ph/0107326

LikeLike

Contrary to what is written above, LCDM is a great success because everybody says so. Science is a popularity contest.

The problem is when mainstream scientists “think that _everybody_ says so”, reject that there is a “cosmology crisis” and stop listening to dissident voices like that of Stacy McGaugh and Pavel Kroupa.

Lambda and CDM are auxiliary hypotheses because they were just invented to fix problems with the Big Bang model. Modifying general relativity will not resolve the problem. Many more assumptions should be examined first.

LikeLike

I’m not sure what your point is here.

You say that the problem is that mainstream scientists “think that everybody says so” that [LCDM is a great success] and reject that there is a “cosmology crisis”, when all evidence points to that mainstream scientists do recognise that there is crisis in the field of cosmology. There are the Hubble tension, the S_8 tension, the CMB dipole, amongst other discrepancies showing that the concordance model has issues that it is unable to resolve, numerous conferences in the past 2 years dedicated to finding alternatives to the concordance model to resolve said issues, such as the Snowmass 21 conference mentioned above, and mainstream media such as Forbes and Space.com publishing articles stating that there is a “cosmology crisis” with the concordance model.

There is a separate crisis involving the tensions between observation and the CDM part of the model, but that is largely ignored by the mainstream at the moment, and for that I would agree with you. However, you go on to say that mainstream scientists should listen to the likes of Stacy McGaugh and Pavel Kroupa, and then two sentences after say that Stacy McGaugh and Pavel Kroupa are wrong because modifying general relativity will not solve problems with the Big Bang model. Modifying general relativity is exactly what the likes of Stacy McGaugh and Pavel Kroupa are proposing when they advocate for MOND or RelMOND on this blog, as they propose the modified gravity theories MOND or RelMOND as a replacement for CDM. CDM, you said, was an auxiliary hypothesis invented to fix problems with the Big Bang model.

Mainstream cosmologists by and large are examining other assumptions with the concordance model first, which is why there have been much more attention directed to the cosmological principle, FLRW, and dark energy than towards CDM, because the issues currently discussed in the cosmology crisis by and large don’t really have much to do with CDM. With current trends, it is quite conceivable that the concordance model will be completely replaced with a completely different model, yet CDM is one of the only parts of the concordance model that remains intact in the new model. The issue, according to the likes of Stacy McGaugh and Pavel Kroupa, is that not enough mainstream scientists are willing to entertain the notion that modifying general relativity might be needed to resolve the problems, and count you in as one of them with your last two sentences.

LikeLike

Concordance cosmology is failing miserably, everybody can see that. And that’s not new, cosmology has been in a state of crisis for decades, always on the brink of falling apart. “Big Bang not yet dead but in decline” they were reporting in 1995 (http://www.nature.com/articles/377099a0)

There are so many crises in cosmology the situation is completely hopeless. With a name like ‘concordance’, it is a shame that noting fits: EDGES reports too much signal, the optical background radiation is too strong, large scale structures that shouldn’t exist are observed, early galaxies have fantastic star forming rates, galaxies like El Gordo can’t be simulated, etc. There are dozens of articles published each year in astronomy journals that contain the word “surprising”! Despite all that, the Big Bang model is artificially maintained alive with a trio of hypotheses: dark matter, inflation and dark energy, three things for which there is no direct observational support.

Since these hypotheses have only produced more headaches, it is clear that they should all be discarded. Cosmologists should go back a few decades and resume the development of cosmological models based on todays large scale observational surveys, but not on old misguided hypotheses.

Cosmologists suffer from an acute “plan continuation bias”. Alternatives to the concordance model are either add-ons (new particles, new fields, etc.) or tweaks (varying neutrino masses, MOND, etc.) that rely on the correctness of three bad hypotheses. Non-mainstream media refers to those alternatives as “epicycles”, that says it all. arxiv.org/abs/2103.01183 gives a large sample of failed alternatives, and that’s just for the Hubble tension.

Astronomers like Kroupa and McGaugh (and a few others) are doing two very important jobs:

1) they look at the data and show that the dark matter hypothesis does not work;

2) they show that nobody understands why MOND fits some observations but not others.

Personally, I think MOND is a phenomenological manifestation of a more fundamental phenomenon that is not related to gravity. From this point of view, McGaugh and Kroupa are not wrong, they are just using available tools to make computations and comparisons with data. But don’t count me in with the MONDians, I’m still with Newton’s a=GM/r^2.

My short comment was meant to point out that even if dark matter doesn’t exist, cosmologists are not rejecting that hypothesis despite the efforts of competent astronomers. I can’t imagine what it will take for them to abandon dark energy and inflation.

That, is the real crisis in cosmology.

LikeLike

Hi Louis,

“Since these hypotheses have only produced more headaches, it is clear that they should all be discarded. Cosmologists should go back a few decades and resume the development of cosmological models based on todays large scale observational surveys, but not on old misguided hypotheses.”

I agree to a point, but if you only discard the auxiliary hypotheses (inflation, DM, DE) but leave the “expanding universe” paradigm intact you’re going to be stuck with the same model dependent problems those hypotheses try unsuccessfully to address. OTOH, if you drop the “expanding universe” assumption at the heart of the standard model, the need for the auxiliary hypotheses evaporates. A big bang for the buck you might say.

So cosmologists need to go further back than a few decades, they need to go back a century and consider alternatives – to the foundational assumptions at the heart of FLRW and to the redshift equals recessional velocity assumption. The real crisis in cosmology is that the socio-economic structure of the scientific academy seems designed to prohibit such a scientifically reasonable and fully justifiable effort.

The insistence by the the standard model’s proponents, that their miserable failure of a model is in fact a great success, is unscientific nonsense. Somehow, some way, the scientific academy has to clear a space for the investigation, both theoretical and observational, of non-expanding cosmological models. Until that becomes possible, the current crisis will continue unabated.

At root the crisis in cosmology is an institutional problem. Failure is a necessary part of the scientific endeavor, but when failure goes unacknowledged, stasis ensues and scientific progress becomes impossible.

LikeLike

So cosmologists need to go further back than a few decades, they need to go back a century and consider alternatives – to the foundational assumptions at the heart of FLRW and to the redshift equals recessional velocity assumption.

They have — dozens of alternatives. Every one has failed.

LikeLiked by 1 person

Dozens??? Name two formal, published studies of non-expanding-universe cosmologies that have been done in the last 50 years – make it 100 years. Just two – documented.

LikeLike

Zwicky, F. (1929). “On the Redshift of Spectral Lines Through Interstellar Space”. Proceedings of the National Academy of Sciences. 15 (10): 773–779.

Finlay-Freundlich, E. (1954). “Red-Shifts in the Spectra of Celestial Bodies”. Proc. Phys. Soc. A. 67 (2): 192–193.

D.F. Crawford, “Photon Decay in Curved Space-time”, Nature, 277(5698), 633–635 (1979).

LaViolette P. A. (April 1986). “Is the universe really expanding?”. Astrophysical Journal. 301: 544–553.

LikeLike

Interesting. I’ll comment after I’ve read the two papers that aren’t behind a paywall. Only one seems to be an actual cosmology.

LikeLike

The failure of dozens of alternatives does not imply that there is no alternative. Just that we have not yet considered the right one.

LikeLike

Great advice, Gena!!

LikeLike

I believe a crucial acid test of any attempt to solve current cosmological puzzles, without invoking Dark Matter (DM), is to explain the apparently irrefutable offset of the gravitational potential wells in the Bullet Cluster that are associated with the galactic clusters, and cleanly separated from the huge gas clouds. This is, for sure, a thorn in the side of any model that dispenses with DM. The Bullet Cluster has long been touted as definitive proof of the existence of DM within the LCDM paradigm, and for good reason. LCDM has a simple dynamical interpretation of how this came about that, taken alone, is rather straightforward, and meets the criteria of Occam’s Razor.

According to a physics.stackexchange posting: https://physics.stackexchange.com/questions/210401/bullet-cluster-and-mond in the standard DM picture the gravity lensing profile of the Bullet Cluster indicates that 9% of the baryonic mass is in hot gas, 11% in galaxies, within the two clusters, and the remaining 80% in DM that is centered on each cluster. Assuming this is correct, this is at variance to what I’ve read for years that “most” of the baryonic mass is in the hot gas clouds, usually cited to be about 90%. This certainly improves the picture and viability of non-DM alternatives like MOND. I’m not exactly sure how MOND addresses the very large gravity wells associated with the clusters in the Bullet Cluster system, but Milgrom seems to be saying that there is a baryonic component, aside from the galaxies, that passes unhampered through the collision zone, remains with the galaxy clusters, and thus enhances their gravitational potential. I gather this from his statement in the “MOND Pages” (Milgrom’s perspective on the Bullet Cluster); “When two clusters collide head on the gas components of the two just stick together and stay in the middle, while the rest (galaxies plus this extra component I spoke of) just go through and stay together.”

Deur’s quantum gravity approach also has a non-DM mechanism to explain the lensing data for the Bullet Cluster. It is nicely explained at Andrew Ohwilleke’s site: http://dispatchesfromturtleisland.blogspot.com/p/deurs-work-on-gravity-and-related.html under “Why does the Bullet Cluster behave as it does?” Here Andrew explains that the approximately spherically symmetric gas clouds revert to a 1/r^2 gravitational force law in Deur’s self-interacting graviton approach, and thus evinces little apparent DM. In contrast, the galaxies in the clusters, behaving almost like point masses, cause the self-interacting gravitons to more or less form “flux tubes” enhancing the gravitational pull between them, so they mutually orbit faster, thereby mimicking a greater amount of mass than is actually there.

A problem I see with this is that, while this mechanism would indeed mimic a greater perceived gravity field, it would not equate to actual increased gravity outside of the cluster, which is indicated by the lensing. But I’m a bit groggy this AM, so maybe I’m overlooking something here that negates this argument.

LikeLike

As an afterthought to the last paragraph in my previous comment it occurred to me that any lensing contour lines interior to the perimeter of either galaxy cluster (in the Bullet Cluster) could be affected by the greatly concentrated gravitational force along the ”flux tubes” interconnecting the individual galaxies. That is, if a significant number of the distorted background light sources, used to map the contours, happen to intercept line segments connecting individual galaxies in the clusters, that could serve as evidence for the existence of these flux tubes. In fact, if such a case could be convincingly demonstrated it would be quite compelling evidence for Deur’s quantum gravity model.

LikeLike

I wonder how the DM paradigm can be abandoned when most galaxy formation models begin with a Dark Matter halo drawing in normal matter. It seems to me that redefining the nature of that halo has to be one of the first steps.

LikeLike

Pavel Kroupa suggests a reasonable way to systematically start moving away from this mess without panic:

Just listen for three minutes if you’re short on time.

Five of the visible attendees smile at the question, including Stacy… very telling!

LikeLike

Although it only peripherally impinges on the topic of this post (the BTFR) I am finding the thread over at Physicsforums titled: “Do Gravitons Interact with Gravitons”, which discusses Deur’s work, highly informative.

https://www.physicsforums.com/threads/do-gravitons-interact-with-gravitons.1002590/page-2

LikeLike

Has someone from the MOND-community ever publicly reviewed/commented the theory of A. Deur?

It would be interesting to know what the MOND-experts say about it.

LikeLike

I just wanted to say that comments about other theories being completely thrown out if GR is modified/overthrown are quite overblown. Any theory is going to need to at least approximate GR the same way GR approximates Newton, and there’s a decent chance it will be more relativistic and not less if we consider historical trends (Newton took some inspiration from Galileo even if he didn’t quite have the mathematical apparatus without tensors to take it all the way, Einstein went even further and considered it in the context of Maxwell and light as an invariant which is why Newton formulated in modern relativistic form is still not the same as Einstein’s relativistic equations).

Further, programs such as asymptotic safety have absolutely nothing to do with GR: asymptotic safety is the idea that the running of the gravitational couplings becomes ‘safe’ at high energies (rather than say blowing up to infinity), pretty much almost _any_ quantum gravity field theory is going to have gravitational couplings (even if it ends up being some sort of trivial coupling) unless you do something really weird like saying there is no gravity and thus nothing to couple (I’m sure flat earthers are a fan of this -_-).

Ergo, modified gravity could indeed be asymptotically safe, without GR being involved at all, it’s completely independent.

I see a lot of attacks on the idea of the big bang, but I see absolutely no reason or evidence to think the universe is steady state, and plenty of evidence to show that at far out distances (far out times!) the universe was a very different place. Inflation being true or false would have absolutely no impact on this, as that’s more a theory about the rate of expansion at a certain period to ensure greater flatness and not the concept of expansion itself (something we naturally expect, or its inverse of contraction, in a dynamic spacetime where matter is present and influences spacetime, even without the introduction of a cosmological constant), and I find it bizarre when people try to conflate the big bang and inflation – it shows they don’t actually understand what these terms mean.

LikeLike

could anyone comment on the paper which claims cold dark matter could reproduce MOND

[Submitted on 10 Mar 2022]

The origin of MOND acceleration and deep-MOND behavior from mass and energy cascade in dark matter flow

Zhijie Xu

The MOND paradigm is an empirical theory with modified gravity to reproduce many astronomical observations without invoking the dark matter hypothesis. Instead of falsifying the existence of dark matter, we propose that MOND is an effective theory naturally emerging from the long-range and collisionless nature of dark matter flow. It essentially describes the dynamics of baryonic mass suspended in fluctuating dark matter fluid. We first review the unique properties of self-gravitating collisionless dark matter flow (SG-CFD), followed by their implications in the origin of MOND theory. To maximize system entropy, the long-range interaction requires a broad size of halos to be formed. These halos facilitate an inverse mass and energy cascade from small to large mass scales that involves a constant rate of energy transfer ϵu=−4.6×10−7m2/s3. In addition to the velocity fluctuation with a typical scale u, the long-range interaction leads to a fluctuation in acceleration with a typical scale a0 that matches the value of critical MOND acceleration. The velocity and acceleration fluctuations in dark matter flow satisfy the equality ϵu=−a0u/(3π)2 such that a0 can be determined. A notable (unexplained) coincidence of cosmological constant Λ∝(a0/c)2 might point to a dark energy density proportional to acceleration fluctuation, i.e. ρvac∝a20/G. At z=0 with u=354.61km/s, a0=1.2×10−10m/s2 can be obtained. For given particle velocity vp, maximum entropy distributions developed from mass/energy cascade lead to a particle kinetic energy ϵk∝vp at small acceleration a0. Combining this with the constant rate of energy transfer ϵu, both regular Newtonian dynamics and deep-MOND behavior can be fully recovered.

Comments: 20 pages, 8 figures

Subjects: Cosmology and Nongalactic Astrophysics (astro-ph.CO); Astrophysics of Galaxies (astro-ph.GA); Fluid Dynamics (physics.flu-dyn)

Cite as: arXiv:2203.05606 [astro-ph.CO]

LikeLike

It’s a success for the MOND theorists because people aren’t outright dismissing the theory anymore, but are now trying to explain MOND at a deeper level.

LikeLike

true. I am wondering if that paper is sound or deeply flawed.

LikeLike

The idea of treating it like turbulence, but with zero viscosity seems sound. One obvious reservation is that in 3D turbulence the energy transfer is primarily from large scales to small scales and he needs to invoke 2D turbulence to find an example of energy transfer from small scales to large scales, which he needs for his model. As the universe has three spatial dimensions, this seems like a serious weakness. It would be much more convincing if he could make this work with a normal rather than inverse energy cascade, or else demonstrate that an inverse energy cascade will always occur in collision-less dark matter, rather than just assuming it.

Also in the conclusions he says: “In N-body simulation, the root-mean-square particle acceleration decreases with time and matches the critical MOND acceleration (Fig. 5).” That is obviously the case at z=0, but his model means that for z>0 the root-mean-square particle acceleration, and therefore a0 should be higher and so the Tully-Fisher relation would be shifted to higher velocities as we look back further in time. As far as I know from Stacy’s previous blog posts, this is not the case.

LikeLike

This is a classic example of the rampant model-fitting exercises that have turned cosmology into an unscientific wasteland. Dark matter, never predicts it can only postdict. As scientific concept DM has been falsified by the relentless negative empirical evidence – it is not part of empirical reality.

No matter. For its cult of believers, DM must exist because it provides a kind of magic fairy dust that can be sprinkled as needed wherever their extensive modeling inadequacies (on scale the scale of galaxies, galaxy clusters and the CMB) become apparent in some glaring discrepancy with observations or, as in this case, a rival model that actually makes successful predictions.. When that happens, their math is just massaged, as in this example, to fit the new requirements. That is not science – it is mathematicism and it has made an unscientific mess of modern cosmology.

LikeLike

if that paper is sound deeper level.is dark matter

LikeLike

The concept of dark matter being the underlying explanation for MOND is also nothing new. Sabine Hossenfelder wrote a few papers about a superfluid dark matter model she claimed was able to reproduce MOND effects:

https://arxiv.org/abs/1809.00840

https://arxiv.org/abs/2003.07324

https://arxiv.org/abs/2201.07282

LikeLike

I have spent a lot of time trying to understand the observed MOND phenomenology in terms of dark matter. Very long story very short, none of it is satisfactory.

LikeLiked by 2 people