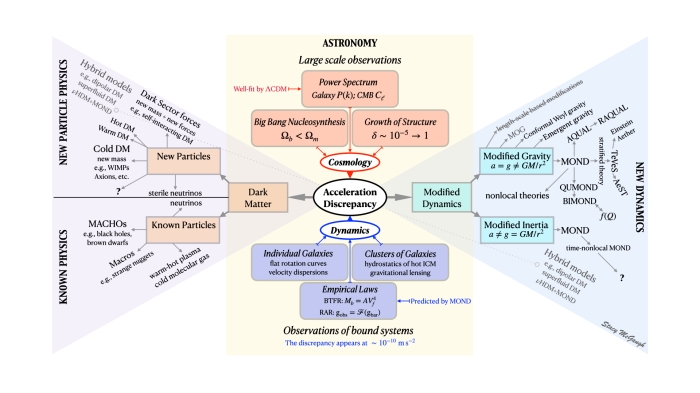

Kuhn noted that as paradigms reach their breaking point, there is a divergence of opinions between scientists about what the important evidence is, or what even counts as evidence. This has come to pass in the debate over whether dark matter or modified gravity is a better interpretation of the acceleration discrepancy problem. It sometimes feels like we’re speaking about different topics in a different language. That’s why I split the diagram version of the dark matter tree as I did:

Astroparticle physicists seem to be well-informed about the cosmological evidence (top) and favor solutions in the particle sector (left). As more of these people entered the field in the ’00s and began attending conferences where we overlapped, I recognized gaping holes in their knowledge about the dynamical evidence (bottom) and related hypotheses (right). This was part of my motivation to develop an evidence-based course1 on dark matter, to try to fill in the gaps in essential knowledge that were obviously being missed in the typical graduate physics curriculum. Though popular on my campus, not everyone in the field has the opportunity to take this course. It seems that the chasm has continued to grow, though not for lack of attempts at communication.

Part of the problem is a phase difference: many of the questions that concern astroparticle physicists (structure formation is a big one) were addressed 20 years ago in MOND. There is also a difference in texture: dark matter rarely predicts things but always explains them, even if it doesn’t. MOND often nails some predictions but leaves other things unexplained – just a complete blank. So they’re asking questions that are either way behind the curve or as-yet unanswerable. Progress rarely follows a smooth progression in linear time.

I have become aware of a common construction among many advocates of dark matter to criticize “MOND people.” First, I don’t know what a “MOND person” is. I am a scientist who works on a number of topics, among them both dark matter and MOND. I imagine the latter makes me a “MOND person,” though I still don’t really know what that means. It seems to be a generic straw man. Users of this term consistently paint such a luridly ridiculous picture of what MOND people do or do not do that I don’t recognize it as a legitimate depiction of myself or of any of the people I’ve met who work on MOND. I am left to wonder, who are these “MOND people”? They sound very bad. Are there any here in the room with us?

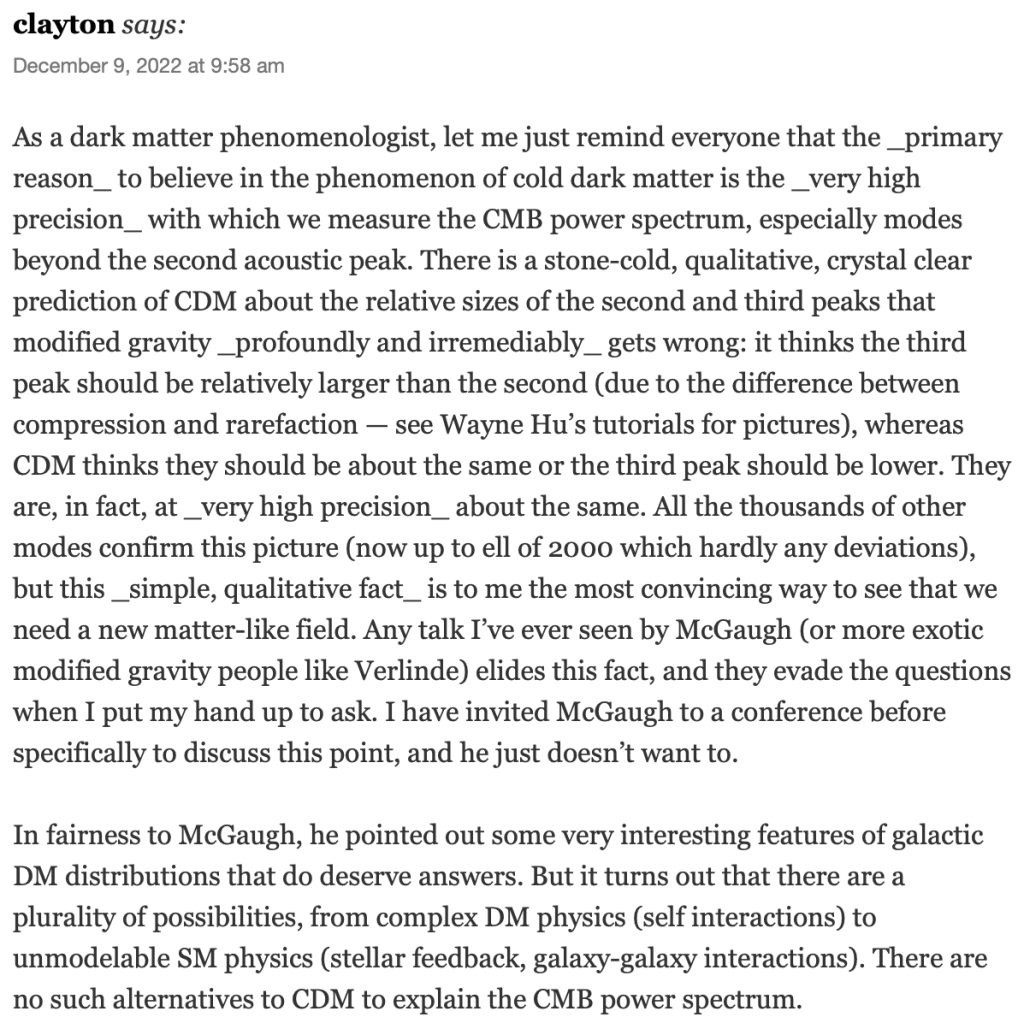

I am under no illusions as to what these people likely say when I am out of ear shot. Someone recently pointed me to a comment on Peter Woit’s blog that I would not have come across on my own. I am specifically named. Here is a screen shot:

This concisely pinpoints where the field2 is at, both right and wrong. Let’s break it down.

let me just remind everyone that the primary reason to believe in the phenomenon of cold dark matter is the very high precision with which we measure the CMB power spectrum, especially modes beyond the second acoustic peak

This is correct, but it is not the original reason to believe in CDM. The history of the subject matters, as we already believed in CDM quite firmly before any modes of the acoustic power spectrum of the CMB were measured. The original reasons to believe in cold dark matter were (1) that the measured, gravitating mass density exceeds the mass density of baryons as indicated by BBN, so there is stuff out there with mass that is not normal matter, and (2) large scale structure has grown by a factor of 105 from the very smooth initial condition indicated initially by the nondetection of fluctuations in the CMB, while normal matter (with normal gravity) can only get us a factor of 103 (there were upper limits excluding this before there was a detection). Structure formation additionally imposes the requirement that whatever the dark matter is moves slowly (hence “cold”) and does not interact via electromagnetism in order to evade making too big an impact on the fluctuations in the CMB (hence the need, again, for something non-baryonic).

When cold dark matter became accepted as the dominant paradigm, fluctuations in the CMB had not yet been measured. The absence of observable fluctuations at a larger level sufficed to indicate the need for CDM. This, together with Ωm > Ωb from BBN (which seemed the better of the two arguments at the time), sufficed to convince me, along with most everyone else who was interested in the problem, that the answer had3 to be CDM.

This all happened before the first fluctuations were observed by COBE in 1992. By that time, we already believed firmly in CDM. The COBE observations caused initial confusion and great consternation – it was too much! We actually had a prediction from then-standard SCDM, and it had predicted an even lower level of fluctuations than what COBE observed. This did not cause us (including me) to doubt CDM (thought there was one suggestion that it might be due to self-interacting dark matter); it seemed a mere puzzle to accommodate, not an anomaly. And accommodate it we did: the power in the large scale fluctuations observed by COBE is part of how we got LCDM, albeit only a modest part. A lot of younger scientists seem to have been taught that the power spectrum is some incredibly successful prediction of CDM when in fact it has surprised us at nearly every turn.

As I’ve related here before, it wasn’t until the end of the century that CMB observations became precise enough to provide a test that might distinguish between CDM and MOND. That test initially came out in favor of MOND – or at least in favor of the absence of dark matter: No-CDM, which I had suggested as a proxy for MOND. Cosmologists and dark matter advocates consistently omit this part of the history of the subject.

I had hoped that cosmologists would experience the same surprise and doubt and reevaluation that I had experienced when MOND cropped up in my own data when it cropped up in theirs. Instead, they went into denial, ignoring the successful prediction of the first-to-second peak amplitude ratio, or, worse, making up stories that it hadn’t happened. Indeed, the amplitude of the second peak was so surprising that the first paper to measure it omitted mention of it entirely. Just didn’t talk about it, let alone admit that “Gee, this crazy prediction came true!” as I had with MOND in LSB galaxies. Consequently, I decided that it was better to spend my time working on topics where progress could be made. This is why most of my work on the CMB predates “modes beyond the second peak” just as our strong belief in CDM also predated that evidence. Indeed, communal belief in CDM was undimmed when the modes defining the second peak were observed, despite the No-CDM proxy for MOND being the only hypothesis to correctly predict it quantitatively a priori.

That said, I agree with clayton’s assessment that

CDM thinks [the second and third peak] should be about the same

That this is the best evidence now is both correct and a much weaker argument than it is made out to be. It sounds really strong, because a formal fit to the CMB data require a dark matter component at extremely high confidence – something approaching 100 sigma. This analysis assumes that dark matter exist. It does not contemplate that something else might cause the same effect, so all it really does, yet again, is demonstrate that General Relativity cannot explain cosmology when restricted to the material entities we concretely know to exist.

Given the timing, the third peak was not a strong element of my original prediction, as we did not yet have either a first or second peak. We hadn’t yet clearly observed peaks at all, so what I was doing was pretty far-sighted, but I wasn’t thinking that far ahead. However, the natural prediction for the No-CDM picture I was considering was indeed that the third peak should be lower than the second, as I’ve discussed before.

In contrast, in CDM, the acoustic power spectrum of the CMB can do a wide variety of things:

Given the diversity of possibilities illustrated here, there was never any doubt that a model could be fit to the data, provided that oscillations were observed as expected in any of the theories under consideration here. Consequently, I do not find fits to the data, though excellent, to be anywhere near as impressive as commonly portrayed. What does impress me is consistency with independent data.

What impresses me even more are a priori predictions. These are the gold standard of the scientific method. That’s why I worked my younger self’s tail off to make a prediction for the second peak before the data came out. In order to make a clean test, you need to know what both theories predict, so I did this for both LCDM and No-CDM. Here are the peak ratios predicted before there were data to constrain them, together with the data that came after:

The left hand panel shows the predicted amplitude ratio of the first-to-second peak, A1:2. This is the primary quantity that I predicted for both paradigms. There is a clear distinction between the predicted bands. I was not unique in my prediction for LCDM; the same thing can be seen in other contemporaneous models. All contemporaneous models. I was the only one who was not surprised by the data when they came in, as I was the only one who had considered the model that got the prediction right: No-CDM.

The same No-CDM model fails to correctly predict the second-to-third peak ratio, A2:3. It is, in fact, way off, while LCDM is consistent with A2:3, just as Clayton says. This is a strong argument against No-CDM, because No-CDM makes a clear and unequivocal prediction that it gets wrong. Clayton calls this

a stone-cold, qualitative, crystal clear prediction of CDM

which is true. It is also qualitative, so I call it weak sauce. LCDM could be made to fit a very large range of A2:3, but it had already got A1:2 wrong. We had to adjust the baryon density outside the allowed range in order to make it consistent with the CMB data. The generous upper limit that LCDM might conceivably have predicted in advance of the CMB data was A1:2 < 2.06, which is still clearly less than observed. For the first years of the century, the attitude was that BBN had been close, but not quite right – preference being given to the value needed to fit the CMB. Nowadays, BBN and the CMB are said to be in great concordance, but this is only true if one restricts oneself to deuterium measurements obtained after the “right” answer was known from the CMB. Prior to that, practically all of the measurements for all of the important isotopes of the light elements, deuterium, helium, and lithium, all concurred that the baryon density Ωbh2 < 0.02, with the consensus value being Ωbh2 = 0.0125 ± 0.0005. This is barely half the value subsequently required to fit the CMB (Ωbh2 = 0.0224 ± 0.0001). But what’s a factor of two among cosmologists? (In this case, 4 sigma.)

Taking the data at face value, the original prediction of LCDM was falsified by the second peak. But, no problem, we can move the goal posts, in this case by increasing the baryon density. The successful prediction of the third peak only comes after the goal posts have been moved to accommodate the second peak. Citing only the comparable size of third peak to the second while not acknowledging that the second was too small elides the critical fact that No-CDM got something right, a priori, that LCDM did not. No-CDM failed only after LCDM had already failed. The difference is that I acknowledge its failure while cosmologists elide this inconvenient detail. Perhaps the second peak amplitude is a fluke, but it was a unique prediction that was exactly nailed and remains true in all subsequent data. That’s a pretty remarkable fluke4.

LCDM wins ugly here by virtue of its flexibility. It has greater freedom to fit the data – any of the models in the figure of Dodelson & Hu will do. In contrast. No-CDM is the single blue line in my figure above, and nothing else. Plausible variations in the baryon density make hardly any difference: A1:2 has to have the value that was subsequently observed, and no other. It passed that test with flying colors. It flunked the subsequent test posed by A2:3. For LCDM this isn’t even a test, it is an exercise in fitting the data with a model that has enough parameters5 to do so.

There were a number of years at the beginning of the century during which the No-CDM prediction for the A1:2 was repeatedly confirmed by multiple independent experiments, but before the third peak was convincingly detected. During this time, cosmologists exhibited the same attitude that Clayton displays here: the answer has to be CDM! This warrants mention because the evidence Clayton cites did not yet exist. Clearly the as-yet unobserved third peak was not the deciding factor.

In those days, when No-CDM was the only correct a priori prediction, I would point out to cosmologists that it had got A1:2 right when I got the chance (which was rarely: I was invited to plenty of conferences in those days, but none on the CMB). The typical reaction was usually outright denial6 though sometimes it warranted a dismissive “That’s not a MOND prediction.” The latter is a fair criticism. No-CDM is just General Relativity without CDM. It represented MOND as a proxy under the ansatz that MOND effects had not yet manifested in a way that affected the CMB. I expected that this ansatz would fail at some point, and discussed some of the ways that this should happen. One that’s relevant today is that galaxies form early in MOND, so reionization happens early, and the amplitude of gravitational lensing effects is amplified. There is evidence for both of these now. What I did not anticipate was a departure from a damping spectrum around L=600 (between the second and third peaks). That’s a clear deviation from the prediction, which falsifies the ansatz but not MOND itself. After all, they were correct in noting that this wasn’t a MOND prediction per se, just a proxy. MOND, like Newtonian dynamics before it, is relativity adjacent, but not itself a relativistic theory. Neither can explain the CMB on their own. If you find that an unsatisfactory answer, imagine how I feel.

The same people who complained then that No-CDM wasn’t a real MOND prediction now want to hold MOND to the No-CDM predicted power spectrum and nothing else. First it was the second peak isn’t a real MOND prediction! then when the third peak was observed it became no way MOND can do this! This isn’t just hypocritical, it is bad science. The obvious way to proceed would be to build on the theory that had the greater, if incomplete, predictive success. Instead, the reaction has consistently been to cherry-pick the subset of facts that precludes the need for serious rethinking.

This brings us to sociology, so let’s examine some more of what Clayton has to say:

Any talk I’ve ever seen by McGaugh (or more exotic modified gravity people like Verlinde) elides this fact, and they evade the questions when I put my hand up to ask. I have invited McGaugh to a conference before specifically to discuss this point, and he just doesn’t want to.

There is so much to unpack here, I hardly know where to start. By saying I “elide this fact” about the qualitatively equality of the second and third peak, Clayton is basically accusing me of lying by omission. This is pretty rich coming from a community that consistently elides the history I relate above, and never addresses the question raised by MOND’s predictive power.

Intellectual honesty is very important to me – being honest that MOND predicted what I saw in low surface brightness where my own prediction was wrong is what got me into this mess in the first place. It would have been vastly more convenient to pretend that I never heard of MOND (at first I hadn’t7) and act like that never happened. That would be an lie of omission. It would be a large lie, a lie that denies an important aspect of how the world works (what we’re supposed to uncover through science), the sort of lie that cleric Paul Gerhardt may have had in mind when he said

When a man lies, he murders some part of the world.

Paul Gerhardt

Clayton is, in essence, accusing me of exactly that by failing to mention the CMB in talks he has seen. That might be true – I give a lot of talks. He hasn’t been to most of them, and I usually talk about things I’ve done more recently than 2004. I’ve commented explicitly on this complaint before –

There’s only so much you can address in a half hour talk. [This is a recurring problem. No matter what I say, there always seems to be someone who asks “why didn’t you address X?” where X is usually that person’s pet topic. Usually I could do so, but not in the time allotted.]

– so you may appreciate my exasperation at being accused of dishonesty by someone whose complaint is so predictable that I’ve complained before about people who make this complaint. I’m only human – I can’t cover all subjects for all audiences every time all the time. Moreover, I do tend to choose to discuss subjects that may be news to an audience, not simply reprise the greatest hits they want to hear. Clayton obviously knows about the third peak; he doesn’t need to hear about it from me. This is the scientific equivalent of shouting Freebird! at a concert.

It isn’t like I haven’t talked about it. I have been rigorously honest about the CMB, and certainly have not omitted mention of the third peak. Here is a comment from February 2003 when the third peak was only tentatively detected:

Page et al. (2003) do not offer a WMAP measurement of the third peak. They do quote a compilation of other experiments by Wang et al. (2003). Taking this number at face value, the second to third peak amplitude ratio is A2:3 = 1.03 +/- 0.20. The LCDM expectation value for this quantity was 1.1, while the No-CDM expectation was 1.9. By this measure, LCDM is clearly preferable, in contradiction to the better measured first-to-second peak ratio.

Or here, in March 2006:

the Boomerang data and the last credible point in the 3-year WMAP data both have power that is clearly in excess of the no-CDM prediction. The most natural interpretation of this observation is forcing by a mass component that does not interact with photons, such as non-baryonic cold dark matter.

There are lots like this, including my review for CJP and this talk given at KITP where I had been asked to explicitly take the side of MOND in a debate format for an audience of largely particle physicists. The CMB, including the third peak, appears on the fourth slide, which is right up front, not being elided at all. In the first slide, I tried to encapsulate the attitudes of both sides:

I did the same at a meeting in Stony Brook where I got a weird vibe from the audience; they seemed to think I was lying about the history of the second peak that I recount above. It will be hard to agree on an interpretation if we can’t agree on documented historical facts.

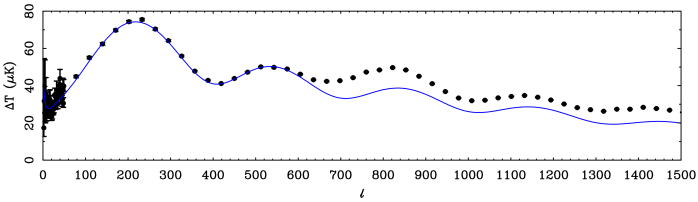

More recently, this image appears on slide 9 of this lecture from the cosmology course I just taught (Fall 2022):

I recognize this slide from talks I’ve given over the past five plus years; this class is the most recent place I’ve used it, not the first. On some occasions I wrote “The 3rd peak is the best evidence for CDM.” I do not recall which all talks I used this in; many of them were likely colloquia for physics departments where one has more time to cover things than in a typical conference talk. Regardless, these apparently were not the talks that Clayton attended. Rather than it being the case that I never address this subject, the more conservative interpretation of the experience he relates would be that I happened not to address it in the small subset of talks that he happened to attend.

But do go off, dude: tell everyone how I never address this issue and evade questions about it.

I have been extraordinarily patient with this sort of thing, but I confess to a great deal of exasperation at the perpetual whataboutism that many scientists engage in. It is used reflexively to shut down discussion of alternatives: dark matter has to be right for this reason (here the CMB); nothing else matters (galaxy dynamics), so we should forbid discussion of MOND. Even if dark matter proves to be correct, the CMB is being used an excuse to not address the question of the century: why does MOND get so many predictions right? Any scientist with a decent physical intuition who takes the time to rub two brain cells together in contemplation of this question will realize that there is something important going on that simply invoking dark matter does not address.

In fairness to McGaugh, he pointed out some very interesting features of galactic DM distributions that do deserve answers. But it turns out that there are a plurality of possibilities, from complex DM physics (self interactions) to unmodelable SM physics (stellar feedback, galaxy-galaxy interactions). There are no such alternatives to CDM to explain the CMB power spectrum.

Thanks. This is nice, and why I say it would be easier to just pretend to never have heard of MOND. Indeed, this succinctly describes the trajectory I was on before I became aware of MOND. I would prefer to be recognized for my own work – of which there is plenty – than an association with a theory that is not my own – an association that is born of honestly reporting a surprising observation. I find my reception to be more favorable if I just talk about the data, but what is the point of taking data if we don’t test the hypotheses?

I have gone to great extremes to consider all the possibilities. There is not a plurality of viable possibilities; most of these things do not work. The specific ideas that are cited here are known not work. SIDM apears to work because it has more free parameters than are required to describe the data. This is a common failing of dark matter models that simply fit some functional form to observed rotation curves. They can be made to fit the data, but they cannot be used to predict the way MOND can.

Feedback is even worse. Never mind the details of specific feedback models, and think about what is being said here: the observations are to be explained by “unmodelable [standard model] physics.” This is a way of saying that dark matter claims to explain the phenomena while declining to make a prediction. Don’t worry – it’ll work out! How can that be considered better than or even equivalent to MOND when many of the problems we invoke feedback to solve are caused by the predictions of MOND coming true? We’re just invoking unmodelable physics as a deus ex machina to make dark matter models look like something they are not. Are physicists straight-up asserting that it is better to have a theory that is unmodelable than one that makes predictions that come true?

Returning to the CMB, are there no “alternatives to CDM to explain the CMB power spectrum”? I certainly do not know how to explain the third peak with the No-CDM ansatz. For that we need a relativistic theory, like Beklenstein‘s TeVeS. This initially seemed promising, as it solved the long-standing problem of gravitational lensing in MOND. However, it quickly became clear that it did not work for the CMB. Nevertheless, I learned from this that there could be more to the CMB oscillations than allowed by the simple No-CDM ansatz. The scalar field (an entity theorists love to introduce) in TeVeS-like theories could play a role analogous to cold dark matter in the oscillation equations. That means that what I thought was a killer argument against MOND – the exact same argument Clayton is making – is not as absolute as I had thought.

Writing down a new relativistic theory is not trivial. It is not what I do. I am an observational astronomer. I only play at theory when I can’t get telescope time.

So in the mid-00’s, I decided to let theorists do theory and started the first steps in what would ultimately become the SPARC database (it took a decade and a lot of effort by Jim Schombert and Federico Lelli in addition to myself). On the theoretical side, it also took a long time to make progress because it is a hard problem. Thanks to work by Skordis & Zlosnik on a theory they [now] call AeST8, it is possible to fit the acoustic power spectrum of the CMB:

This fit is indistinguishable from that of LCDM.

I consider this to be a demonstration, not necessarily the last word on the correct theory, but hopefully an iteration towards one. The point here is that it is possible to fit the CMB. That’s all that matters for our current discussion: contrary to the steady insistence of cosmologists over the past 15 years, CDM is not the only way to fit the CMB. There may be other possibilities that we have yet to figure out. Perhaps even a plurality of possibilities. This is hard work and to make progress we need a critical mass of people contributing to the effort, not shouting rubbish from the peanut gallery.

As I’ve done before, I like to take the language used in favor of dark matter, and see if it also fits when I put on a MOND hat:

As a galaxy dynamicist, let me just remind everyone that the primary reason to believe in MOND as a physical theory and not some curious dark matter phenomenology is the very high precision with which MOND predicts, a priori, the dynamics of low-acceleration systems, especially low surface brightness galaxies whose kinematics were practically unknown at the time of its inception. There is a stone-cold, quantitative, crystal clear prediction of MOND that the kinematics of galaxies follows uniquely from their observed baryon distributions. This is something CDM profoundly and irremediably gets wrong: it predicts that the dark matter halo should have a central cusp9 that is not observed, and makes no prediction at all for the baryon distribution, let alone does it account for the detailed correspondence between bumps and wiggles in the baryon distribution and those in rotation curves. This is observed over and over again in hundreds upon hundreds of galaxies, each of which has its own unique mass distribution so that each and every individual case provides a distinct, independent test of the hypothesized force law. In contrast, CDM does not even attempt a comparable prediction: rather than enabling the real-world application to predict that this specific galaxy will have this particular rotation curve, it can only refer to the statistical properties of galaxy-like objects formed in numerical simulations that resemble real galaxies only in the abstract, and can never be used to directly predict the kinematics of a real galaxy in advance of the observation – an ability that has been demonstrated repeatedly by MOND. The simple fact that the simple formula of MOND is so repeatably correct in mapping what we see to what we get is to me the most convincing way to see that we need a grander theory that contains MOND and exactly MOND in the low acceleration limit, irrespective of the physical mechanism by which this is achieved.

That is stronger language than I would ordinarily permit myself. I do so entirely to show the danger of being so darn sure. I actually agree with clayton’s perspective in his quote; I’m just showing what it looks like if we adopt the same attitude with a different perspective. The problems pointed out for each theory are genuine, and the supposed solutions are not obviously viable (in either case). Sometimes I feel like we’re up the proverbial creek without a paddle. I do not know what the right answer is, and you should be skeptical of anyone who is sure that he does. Being sure is the sure road to stagnation.

1It may surprise some advocates of dark matter that I barely touch on MOND in this course, only getting to it at the end of the semester, if at all. It really is evidence-based, with a focus on the dynamical evidence as there is a lot more to this than seems to be appreciated by most physicists*. We also teach a course on cosmology, where students get the material that physicists seem to be more familiar with.

*I once had a colleague who was is a physics department ask how to deal with opposition to developing a course on galaxy dynamics. Apparently, some of the physicists there thought it was not a rigorous subject worthy of an entire semester course – an attitude that is all too common. I suggested that she pointedly drop the textbook of Binney & Tremaine on their desks. She reported back that this technique proved effective.

2I do not know who clayton is; that screen name does not suffice as an identifier. He claims to have been in contact with me at some point, which is certainly possible: I talk to a lot of people about these issues. He is welcome to contact me again, though he may wish to consider opening with an apology.

3One of the hardest realizations I ever had as a scientist was that both of the reasons (1) and (2) that I believed to absolutely require CDM assumed that gravity was normal. If one drops that assumption, as one must to contemplate MOND, then these reasons don’t require CDM so much as they highlight that something is very wrong with the universe. That something could be MOND instead of CDM, both of which are in the category of who ordered that?

4In the early days (late ’90s) when I first started asking why MOND gets any predictions right, one of the people I asked was Joe Silk. He dismissed the rotation curve fits of MOND as a fluke. There were 80 galaxies that had been fit at the time, which seemed like a lot of flukes. I mention this because one of the persistent myths of the subject is that MOND is somehow guaranteed to magically fit rotation curves. Erwin de Blok and I explicitly showed that this was not true in a 1998 paper.

5I sometimes hear cosmologists speak in awe of the thousands of observed CMB modes that are fit by half a dozen LCDM parameters. This is impressive, but we’re fitting a damped and driven oscillation – those thousands of modes are not all physically independent. Moreover, as can be seen in the figure from Dodelson & Hu, some free parameters provide more flexibility than others: there is plenty of flexibility in a model with dark matter to fit the CMB data. Only with the Planck data do minor tensions arise, the reaction to which is generally to add more free parameters, like decoupling the primordial helium abundance from that of deuterium, which is anathema to standard BBN so is sometimes portrayed as exciting, potentially new physics.

For some reason, I never hear the same people speak in equal awe of the hundreds of galaxy rotation curves that can be fit by MOND with a universal acceleration scale and a single physical free parameter, the mass-to-light ratio. Such fits are over-constrained, and every single galaxy is an independent test. Indeed, MOND can predict rotation curves parameter-free in cases where gas dominates so that the stellar mass-to-light ratio is irrelevant.

How should we weigh the relative merit of these very different lines of evidence?

6On a number of memorable occasions, people shouted “No you didn’t!” On smaller number of those occasions (exactly two), they bothered to look up the prediction in the literature and then wrote to apologize and agree that I had indeed predicted that.

7If you read this paper, part of what you will see is me being confused about how low surface brightness galaxies could adhere so tightly to the Tully-Fisher relation. They should not. In retrospect, one can see that this was a MOND prediction coming true, but at the time I didn’t know about that; all I could see was that the result made no sense in the conventional dark matter picture.

Some while after we published that paper, Bob Sanders, who was at the same institute as my collaborators, related to me that Milgrom had written to him and asked “Do you know these guys?”

8Initially they had called it RelMOND, or just RMOND. AeST stands for Aether-Scalar-Tensor, and is clearly a step along the lines that Bekenstein made with TeVeS.

In addition to fitting the CMB, AeST retains the virtues of TeVeS in terms of providing a lensing signal consistent with the kinematics. However, it is not obvious that it works in detail – Tobias Mistele has a brand new paper testing it, and it doesn’t look good at extremely low accelerations. With that caveat, it significantly outperforms extant dark matter models.

There is an oft-repeated fallacy that comes up any time a MOND-related theory has a problem: “MOND doesn’t work therefore it has to be dark matter.” This only ever seems to hold when you don’t bother to check what dark matter predicts. In this case, we should but don’t detect the edge of dark matter halos at higher accelerations than where AeST runs into trouble.

9Another question I’ve posed for over a quarter century now is what would falsify CDM? The first person to give a straight answer to this question was Simon White, who said that cusps in dark matter halos were an ironclad prediction; they had to be there. Many years later, it is clear that they are not, but does anyone still believe this is an ironclad prediction? If it is, then CDM is already falsified. If it is not, then what would be? It seems like the paradigm can fit any surprising result, no matter how unlikely a priori. This is not a strength, it is a weakness. We can, and do, add epicycle upon epicycle to save the phenomenon. This has been my concern for CDM for a long time now: not that it gets some predictions wrong, but that it can apparently never get a prediction so wrong that we can’t patch it up, so we can never come to doubt it if it happens to be wrong.

“A lot of younger scientists seem to have been taught that the power spectrum is some incredibly successful prediction of CDM when in fact it has surprised us at nearly every turn.” – great to have that cleared up, as a 30 year old! No physics news site I know ever mentioned that. Good to know the size of their dishonesty thing we’re dealing with…

LikeLiked by 1 person

There has been a lot of self-gaslighting in the field. First a thing is surprising, then it is doubted, then in time it becomes familiar and everybody knows it: it was known all along!

LikeLiked by 3 people

Or, as Feynman observed, the easiest person to fool is yourself.

LikeLiked by 1 person

I mean, we spent the entire 1990s trying to figure out ways in which the luminosity function could have a faint end as steep as the halo mass function. In the ’00s we gave up. In the teens, we accepted that there had to be a nonlinear stellar mass-halo mass relation; i.e., a rolling fudge factor that maps from one to the other. Now this is widely accepted as natural.

LikeLiked by 1 person

There are a lot of funding reasons to keep milking the dark matter cow.

But the fact remains “that the kinematics of galaxies follows uniquely from their observed baryon distributions”, so dark matter is superfluous at galaxy complexity level, hence dark matter is superfluous at higher complexity levels.

LikeLiked by 1 person

Isn’t that a basic feature of reality, that enormous amounts of information is lost to the passage of time? The memories and physical evidence are a faint, distorted shadow of the events.

Yet it seems one of the assumptions physics takes for granted is that information is never lost, except what falls into black holes. It’s out there on the time dimension. With the right application of mathematical faerie dust we can time travel through wormholes in the fabric of spacetime and find out everything.

I realize the social issues are far greater than just funding. That generations of scientists have burrowed ever deeper into the concepts they were schooled in and that is the totality of their understanding of reality, but what if some of those foundational axioms, that have been handed down over the generations, are simply wrong?

For example, what if there is no tiny thing, no atom, no particle, no string at the very core? What if their properties, weight, density, location, energy, etc, are themselves effects? Nodes in networks, of attraction/repulsion, expansion/consolidation, etc.

Yet it seems by the very nature of the process, anyone looking even a fraction outside the box, even adjusting the math a little, as with MOND, is to be demonized.

Epicycles lasted about two thousand years. One can only wonder how long the current set of assumptions will stand.

LikeLike

I wouldn’t doubt that deeply, the established science results from pre 1950 are clearly true in their field of application. I think a big problem is that philosophy is nowadays not taught for the exact sciences.

With philosophy, on the one hand scientists have advanced means to differentiate between solid philosophical foundation and less solid grounds upon which to ‘advance’ science. On the other hand, science that has solid ground is doubted less once you can validate the grounds yourself.

LikeLike

Yet what is time? Is there there some metaphysical dimension in which the events exist and the present is subjective, or is it a dynamic process of change turning future to past, as in tomorrow becomes yesterday, because the earth turns?

If it is the second, then there is no dimension of time, because the past is consumed by the present, to inform and drive it. Causality and conservation of energy. Cause becomes effect.

Is our current understanding because, as mobile organisms, this sentient interface our bodies have with their situation functions as a sequence of perceptions, in order to navigate, that our experience of time is as the point of the present seems to move past to future, similar to how our experience of the sky is the sun and stars move east to west?

If time is this measure and effect of activity causing change, then it is more like temperature, pressure, color and sound, than space. Time is frequency, events are amplitude.

It does solve various philosophical issues as well, such as determinism, given that the act of determination can only occur as the present, no matter how predictable the outcome.

Or why time is asymmetric, given action is inertial. The earth only turns one direction.

Or that different clocks can run at different rates simply because they are separate actions. Everything doesn’t march to the beat of the same drummer. It is more a function of culture, using the same languages, rules and measures, that we would assume otherwise.

As for presentism, it’s the energy conserved, not the information. That different events will be observed in different order from different locations is no more consequential than seeing the moon as it was a moment ago, simultaneous with seeing stars as the were years ago. That the information changes is time.

I could go on, but that’s enough to elicit either a logical response, or denunciation.

LikeLike

We can judge scientific ideas very well by experimentation, philosophy and sound reasoning. If time wouldn’t pass if “no event at all is happening”, that’s akin to the idea that something perhaps doesn’t exist if nobody is observing it (or: interacting with it). This philosophic idea is called antirealism, applied to time you may translate it as you did: if no event occurs during an interval, who can tell whether the time interval really exists? It cannot be deduced from events, after all.

An interesting turnaround of this idea is “well, since in everyday life we can depend on the independently constant behavior of time, apparently something happens everywhere all the time in a constant universal frequency”. This something then is what defines time. You might imagine it as God rolling out His scroll describing all events in the universe at every moment.

But for scientific talk, General Relativity shows that whatever time is at the smallest details, its emergent behaviour is that of the unique different dimension of 4D Minkowski space.

LikeLike

It can certainly be mapped as a dimension. Humanity has been doing it since the dawn of civilization. It’s called history.

The question is as to what is the physical basis for this dynamic?

Epicycles really were brilliant math for their time and the earth is the center of our frame of reference, so it can be modeled as the center of the frame, but that didn’t make those crystalline spheres physically real.

So is there really this “fabric of spacetime,” that is the physical basis of gravity, like crystalline spheres were supposedly the physical basis of epicycles?

No universal frequency. Just the spectrum of frequencies.

As for antirealism, just because you don’t put your hand on a stove, doesn’t mean it’s not hot. As I said, time is an effect and measure of activity, like temperature, pressure, color and sound. Time is rate, temperature is degree.

LikeLike

“Prior to that, practically all of the measurements for all of the important isotopes of the light elements, deuterium, helium, and lithium, all concurred that the baryon density Ωbh2 < 0.02, with the consensus value being Ωbh2 = 0.0125 ± 0.0005. This is barely half the value subsequently required to fit the CMB (Ωbh2 = 0.0224 ± 0.0001). But what’s a factor of two among cosmologists? (In this case, 4 sigma.)”

Could you elaborate on this. Are the BBN estimates of baryon density only accurate to factors of 2. Is baryon density one of the adjustable parameters in the LCDM that you refer to? Is it possible to improve these BBN estimates to eliminate this adjustable variable or is there a fundamental uncertainty in the BBN theory?

LikeLike

Yes, the baryon density is one of the adjustable parameters. Prior to the CMB observations, it was already well constrained, to better than a factor of 2. More like 20% according to Walker et al (1992). That number (0.0125) was taken as gospel; many cosmological codes had it hard-wired into them it was thought to be so well known. It was suddenly abandoned when the CMB data emerged. There have been more accurate measurements since then, but I am concerned that they might experience the confirmation bias that frequently afflicts the field.

I’ve written extensively on this here – follow the links.

LikeLike

Re: “the MOND people”….weren’t they depicted in Flash Gordon in the ’30’s? =P

Stacy, I read your entire opening without eliding anything, and it just confirms

what I knew already…..that you are by faaaaarrrr the best one to speak about MOND,

(and maybe even CDM). No one comes close to understanding & articulating all the nitty-gritty details of the data, warts & all. I especially appreciate your personal & scientific integrity. Would that others had the same.

I think it would be good if Peter Woit & Sean Carroll, et al, took (or at least audit) your course on CDM prerequisite to your course on MOND, as it is not their area of expertise.

LikeLiked by 3 people

My apologies to Dr.Woit….he did not (as far as I know) say anything disparaging about you or MOND people. It was “Clayton”, one of his bloggers….I was “not even” wrong!

LikeLiked by 1 person

Right. Woit seems entirely reasonable. It was just this reply by clayton to his blog in which it came up. Woit does find me too acerbic apparently, but that too is a phase difference: I’ve bent over backwards to be patient in explaining this since 1995; even my patience is wearing thin, and I guess it shows. I am not remotely remorseful about this: I am fed up for good reason. It seems like I have to start at ground zero with each and every scientist who has it stuck in their head that it Has to be dark matter. I’ve addressed that, repeatedly, in many publications – are they incapable of doing a literature search?

A strange thing I note in these discussions, that again marks how far behind the curve most particle phenomenologists are, is that they seem to think that the reason to consider MONDian theories is the lack of dark matter detections, or that dark matter is ad hoc. Those are not the motivations at all. The motivation is that MOND has had many a priori predictions come true – predictions that dark matter did not make, does not satisfactorily explain, nor can replicate. See, for example, https://tritonstation.com/2018/09/14/dwarf-satellite-galaxies-iii-the-dwarfs-of-andromeda/ in which I relate the story of how MOND predicts correctly the velocity dispersions of newly discovered dwarf galaxies, including some for which the LCDM interpretation was that these systems must be out of equilibrium because they didn’t make sense in terms of LCDM. This is typical: dark matter can’t make the equivalent prediction, gets it wrong when I try, then we cook up an excuse without confronting the difficult fact that MOND got it right a priori yet again.

MOND is consistently the more successful predictive theory. That dark matter remains undetected follows as an inference from this, not the other way around.

LikeLiked by 3 people

I would imagine that Woit is sympathetic to your position precisely because he has been in exactly the same position by expressing doubt in the widespread unconditional belief in string theory.

LikeLike

If only to illustrate how widespread is the scientific interest in the LCDM v MOND debate, you may be interested in the opinion expressed of “dark matter phenomena” by the “4 gravitons” blogger on 30 Dec-22 – “Some solve this by introducing dark matter, others by modifying gravity, but this is more of a technical difference than it sounds: in order to modify gravity, one must introduce new quantum fields, much the same way one does when introducing dark matter. The only debate is how ‘matter-like’ those fields need to be, but either approach goes beyond the Standard Model.”

LikeLiked by 3 people

Yes and no. We all agree there has to be new physics beyond what we currently know. Dark matter and MOND, as historically defined, are very different hypotheses. Maybe they are two sides of the same coin that will converge on something similar, but that has yet to happen, and it is a false equivalency to depict it as being so right now. MOND could, for example, be a modification of inertia instead of gravity; we don’t know if this is the case, let alone whether it involves introducing new quantum fields. We would like there to be a quantum theory of gravity, and it would be nifty if that included MOND, but we don’t know if it would, nor even if MOND enters at the classical rather than quantum level.

To some extent, this is just a rephrasing of the need for “matter-like” fields to explain the CMB, but that can be a scalar field or some other gawdawful thing we don’t even imagine yet. At any rate, if they are two sides of the same coin, why have I consistently witnessed so much hostility to even talking about MOND?

LikeLiked by 2 people

Yes, “4 gravitons” aka Matthew von Hippel, is making certain assumptions, for example he describes quantum gravity as an “almost-sure thing”. But he is interesting in that he is an assistant professor, particle theorist, more specifically an amplitudeologist, well respected, who seems to represent mainstream thinking in his field.

LikeLike

I really don’t see any reason for that.

Do you need any new fields and/or particles to explain the rigidity of solids?

Why then you may need any new fields and/or particles to explain galaxies rotational “rigidity”(flatness)?

Since “the kinematics of galaxies follows uniquely from their observed baryon distributions”.

Once again, I think, a naive reductionist mindset is the real problem here.

LikeLiked by 1 person

Bigger problems for the standard modell of cosmology?https://arxiv.org/abs/2212.07733

LikeLiked by 2 people

Yes, this is interesting. There are a growing number of indications that the universe is less isotropic and homogeneous than we assume, or than it should be in the standard cosmology. I myself gave little regard to these as recently as a few years ago, but maybe there is something to it? We have to map a lot more of the universe to sort this out.

LikeLiked by 1 person

The most recent Snowmass White Paper said in section VII.H. that one of the solutions to the Hubble tension and the S8 tension is that the universe might not be isotropic or homogeneous, which would require revising virtually every facet of modern physical cosmology today:

https://arxiv.org/abs/2203.06142

LikeLike

I noticed this recent philosophy of science argument against MOND:

https://link.springer.com/article/10.1007/s11229-022-03830-8

The most serious problem with this is the excessive focus on rotation curve successes of MOND. I explain about the Local Group satellite planes 14 minutes into this video:

There is also a detailed section about them in my MOND review (Arxiv: 2110.06936). It provides a clear falsification of LCDM and appears well understandable in MOND to some degree of detail – see this open access paper (I am giving a talk on it at IAUS379):

https://doi.org/10.1093/mnras/stac722

The satellite planes have been a major issue for LCDM for almost twenty years. The lack of any explanation in this time despite all the vested interests in rescuing LCDM strongly suggests that there is no such explanation. Minor tweaks also do not work. The issue is really that the satellite planes must have formed dissipatively and that makes no sense with collisionless dark matter. The dissipation realistically occurred in the baryons. But since the satellite planes are at a high inclination to the disc, they should consist of tidal dwarf galaxies (TDGs). These are known to form into thin planes (Antennae, Seashell, etc.) But there are two main problems with the TDG idea in Newtonian mechanics, both related to the expected lack of dark matter in them:

1) They would be very fragile to tides from their parent galaxy as that would still have a lot of dark matter, leading to an extreme mass ratio with a purely baryonic dwarf galaxy – this is why TDGs do exist in LCDM cosmological simulations but are very rare and do not make the observed satellite planes more likely (chance alignments are more plausible than TDGs surviving this long)

2) They would have low internal velocity dispersions, contradicting the observations.

Both problems strongly suggest an enhancement to the self-gravity, which is just what MOND offers. Is this the unique explanation? I do not know. But I have never seen any other kind of explanation that makes sense, let alone works in detail.

I have also written about MOND and the CMB in my review. The CMB can be nicely explained in MOND. The idea that it requires cold dark matter is completely wrong and just demonstrates ignorance of the literature.

It is good to see a broader consideration of MOND beyond the rotation curve tests. MOND predicts the formation of galaxies at high redshift:

https://doi.org/10.1046/j.1365-8711.1998.01459.x

We are currently doing cosmological MOND simulations to test these earlier analytic results. The situation appears promising. One of the first figures in the article will be the fit to the CMB in the adopted cosmological model. So much for MOND not fitting the CMB.

LikeLiked by 2 people

Philosophers seem akin to astronomers insofar as there seems to be no result so obvious that someone won’t interpret it to mean the exact opposite. To address a single thing that is wrong with this particular paper, I note the assertion that “the argument from Unexpected Explanatory Success fails since there is a known alternative to MOND.”

There is no satisfactory alternative to MOND, because dark matter can only be used to explain post facto what MOND predicts a priori. If it weren’t for that tiny inconvenience, I would never have written papers about MOND – or the many papers critical of dark matter. If we adopt the attitude of these authors, then we have no reason to believe in heliocentrism, since there is a known alternative in geocentrism.

LikeLiked by 1 person

The article is also filled with statements along the lines that the majority can’t have got it wrong, that it will work out eventually despite no clear evidence, that more complicated ad hoc explanations are just as good as simple explanations, etc. They admit LCDM cannot be falsified as it stands but hope it will eventually become defined well enough that it would become falsifiable. The importance of making clear prior predictions is totally lost on them – they are blissfully unaware of the severe moral hazards associated with a theorist only matching the data once they have it. The idea that MOND is somehow portrayed as the only game in town is ridiculous – MOND researchers compare their model with LCDM all the time. But many of the observations can really only work in MOND as far as we know. In general, the article has a massive amount of disdain for MOND, which in my opinion is due to sociological pressure on the authors to conform to the LCDM myth. Similar philosophical arguments against LCDM do not have such disdain for LCDM but respectfully point out its shortcomings when confronted with real data, without which presumably it might not be such a bad model. The focus on some of the in my view less convincing reasons to favour MOND is also very unhelpful. There are significant falsifications of LCDM like the Hubble tension, which works nicely in MOND (MNRAS, 499, 2845). So the whole article was quite bad. It shows the sort of dodgy logic one would need to conclude that the data favours LCDM.

LikeLiked by 1 person

“the majority can’t have got it wrong”

Actually scientific progress is many times synonyms with showing that the majority is frequently dead wrong, and people showing that the majority is wrong should feel a well deserved “perverse” pleasure.

LikeLiked by 1 person

Of course – this is one obvious problem with the philosophy article I mentioned. As for what Stacy said about the importance of prior predictions, I tried to be honest in the review about what is an unavoidable prediction of LCDM/MOND and what is something that it can fit due to a lot of flexibility in that area. Such things are not in favour of a model. I usually just mentioned them either to stop people using them that way, or to stop them using it to oppose the theory since they might not have been aware it can fit those data.

There is an interesting quote from the article:

The mere fact that a theory’s empirical implications are insufficiently or not at all understood at a given point therefore does not disqualify it as a scientific alternative in the context of meta-empirical assessment.

Applying this to MOND, that would mean its presently uncertain application to cluster and cosmological scales should not be treated as a serious problem. If only the authors had understood this rather than used the above logic to argue LCDM is all right in galaxies despite being falsified at overwhelming significance.

LikeLiked by 1 person

It really should not be surprising that MOND is good at galaxy level complexity but not at higher complexity levels.

Almost exactly the same happens in Quantum mechanics that is useless in very complex chemical compounds, and totally at biological level complexity, specimens and higher complexity hierarchies.

Expecting any theory to be good at multiple complexity levels (naive reductionism) is the underlying problem here.

Complexity is a boundary for the predictive/explanatory power of any theory, including Quantum mechanics, general relativity, MOND or anything else.

LikeLike

I find it confusing that LCDM is loosely used both as the name of a theory and large variety of loosely related models. Adding more parameters is necessarily changing the associated theory, is it not? Tweaking the IMF to fit JWST observations is not a minor detail.

LikeLike

I appreciate your article, it helps to clarify/understand CMB related questions.

It is not that easy to keep track of all the questions related to the LCDM vs. MOND dispute and the respective merits in light of available data. Your articles and your blog have contributed much to this – and this in a scientifically honest and as far as possible fair way.

I really can understand, that you are frustrated by a (let’s say) less honest and constructive response from many CDM proponents. I hope you don’t become too bitter due to that, especially as I see increasingly more objective discussions and work on the matter.

From my perception, meanwhile CDM with simple interaction-free matter is ruled out and MOND at least provides strict rules to be met by any (better) model.

Just yesterday I found a sentence in an article*, which may help here, too, to proceed:

“We looked at the existing model […], knowing that it does not reproduce what we see, and asked, ‘What assertion are we taking for granted?'” […] “The trick is to look at something that everybody takes to be true but for no good reason.”

* https://www.spacedaily.com/reports/How_do_rocky_planets_really_form_999.html

LikeLiked by 3 people

“The trick is to look at something that everybody takes to be true but for no good reason.”

The Big Something that everybody takes to be true without any scientific justification is the Expanding Universe model. The EU is 100 years old and it has not aged well. It constitutes nevertheless an inviolable axiom in academic cosmology, one that is almost certainly wrong.

The EU assumption is wrong in both its aspects, that the Cosmos we observe is a unified, coherent and simultaneous entity (a Universe), and the cause of the cosmological redshift is some form of recessional velocity. Neither assumption has any empirical basis; they are held true by consensual belief not scientific evidence.

As an intellectual exercise, discarding the EU axiom means that you no longer need to believe in and defend the Inflation and Big Bang events, expanding spacetime, cosmological scale Dark Matter, and Dark Energy. Given that those entities have no empirical basis anyway, that’s a pretty good ROI. Needless to say though, there is no research funding available for non-EU models.

LikeLike

I have to say I’m glad I’m on the outside, looking in, than on the inside, thinking the model is god, when even someone like me can see various of the gapping holes in it.

This will go down as another epicycles.

LikeLike

Unfortunately, you’d be left with explaining all those redshifts, which is a bigger problem than all of those you mention put together.

LikeLike

The last paragraph of your article also contains an interesting observation concerning progress in science,

“We get a regular dribble of little-but-still-important contributions. But every five years or so, someone comes out with something that creates a seismic shift in the field.”

The number of years may be variable, but, the remark is honest about how the giants upon whose shoulders we stand depend upon paths made from pebbles.

LikeLike

Could dark energy behave like a fluid acting as a drag on visible matter creating MOND via inertia

LikeLike

Hongsheng Zhao has considered some dark superfluid models sort of along these lines. There are a lot of ideas to consider (and mostly reject), including those that we haven’t yet thought up.

LikeLike

I couldn’t agree more that much of the problem with scientists dismissing MOND, almost out-of-hand, is their evident lack of knowledge of its clear and unambiguous successes. Stacy wonderfully encapsulates these successes, in the inset section, from the perspective of a “galaxy dynamicist”.

LikeLiked by 3 people

It is not a lack of knowledge that is the problem since that knowledge is readily available. Upton Sinclair’s aphorism applies here: It is hard to make someone understand something when their job depends on not understanding it.

LikeLiked by 3 people

I omit the part about right-handed neutrinos as irrelevant to the discussion here.

if right-handed neutrinos existed could they explains why

LikeLike

Dr. McGaugh,

It breaks my heart to read these kind of posts. I am painfully aware of analogous situations in mathematics when people engage in “foundational claims.” The first time I posted a comment here, it included a link to Alexander Grothendieck’s criticism of scientism (scientific realism) as being comparable to a religion.

Because I am not qualified to say much, I have refrained from posting here. The recent mentions of Dr. Woit and his blog led me to do otherwise. Please accept my apologies for the off-topic comments.

In this blog, you emphasize the importance of understanding the historical narrative. Your commenter, jeremyjr01, probably thinks I am a jerk for insisting that the narrative surrounding the developments of first-order logic, Goedel’s incompleteness theorem, and computability theory be respected when the term “metamathematics” is involved. I have seen internet gadflies declare that all mathematics before 1933 (Goedel) had been made irrelevant.

My criticism of Dr. Woit (as a mathematician) lies with an analogous declaration that the foundations of mathematics lies with group representations. If you examine the Wikipedia image,

https://en.m.wikipedia.org/wiki/File:Magma_to_group4.svg

you will see that there are numerous algebraic structures which have “less structure” than a group. What makes Dr. Woit’s statement even more objectionable is the interest physicists have also had with respect to Lie algebras in which associativity fails.

Once associativity failure is recognized, the two paths “out of a mere product (magma)” involve either a “distinguished element” with respect to the product or divisibility. In so far as “higher energies” permit particle accelerators to investigate “smaller parts of space,” divisibility may be more relevant to what physicists do. And, I have found papers on “quantum quasigroups.” These are constructed in analogy with a finite geometric structure called a Mendelsohn triple system.

Your story most directly relates to “truth” in the foundations of mathematics through debates over a construct called a “definite description” in so far as these constructs may be thought to introduce names into a corpus.

The classic example involves the planet Neptune. The word ‘Neptune’ had been used in scientific discourse prior to an actual astronomical observation of the planet Neptune. The “logico-philosophical” question then becomes one of how to a understand the truth valution of statements using the word ‘Neptune’ as a planet’s name before it had been known to exist.

When I speak of “math belief” I think of how logicians insist upon logics having a semantic theory because mathematicians communicate with one another through proofs. They do not even care if it is a “pretend” semantics that ultimately diminishes the applications of mathematics. But, that is a criticism from hindsight, and, one must recognize that the historical narrative is such that this research needed to be done.

It does not help matters when people indoctrinated into scientism defend their beliefs with naive rhetorical devices learned from participation in debating clubs. The expression, “Prove me wrong,” undoubtedly belongs to such a person.

It also does not help matters when people declare that one needs to understand the mathematics to understand the physics while conveniently declare mathematics to be merely a language form. If the semantic theories seemingly required for mathematics are inadequate to applications of mathematics, then invoking mathematics to justify some application is a fallacious shifting of the burden of truth.

With regard to use of the word, ‘Neptune’, there is a logic called positive free logic which would admit the use of names referring to non-existents. David Louis suggested that scientific discourse might be best understood relative to this logic. But, as you might expect, demands for a semantic theory impinge on understanding “truth” as it might apply to a term awaiting valuation.

This need for speculative reasoning within the sciences leaves the sciences open to “map and territory” postmodernism. I am sure your commenter, brodix, does not understand my objections. They lie with the fact that Galileo, apparently, acknowledged that one could “reduce” knowledge to the spellings of words. He chose to value measurement and calculation as the guide to “more meaningful” stories. I have to speak in terms of “meaningfulness” because “truth” is so problematic.

The prior prediction of a phenomenon is a “gold standard” for disqualifying stories. But, as anyone who finds scientism to be objectionable because of an agnostic temperament must conclude, it is not a verification that one’s beliefs are true. Truth is problematic. And, unless it can be decisively distinguished from belief, it is this problematic nature that gives postmodernist arguments their force.

I had been glad to see a mention of inertia in this post. I hope that you and others can say more about inertia in MOND in the future.

My reason for this lies with the reliance of real analysis and related subjects upon the real numbers as an ontology of “points.” William Lawvere has already shown that fragments of infinitesmal calculus can be accomplished without the real numbers as a complete ordered field (admittedly, the logic is not classical). However, there are also mathematicians trying to implement systems that do not rely on an ontology of points.

My questions about inertia relate to this in the sense of how rotational inertia is defined in terms of a lever acting at a point. Yes, this is Newtonian pedagogy. But, while I am uncertain about my knowledge, I do not see how the transformation to Lagrangian principles of action or Hamiltonian energy economics would affect this understanding of a lever at a point.

If there are no points in the sense of an ontology of points, what are the facts of rotational inertia?

Anyway, once again, please accept my apology for the long, off-topic comments. I will try to refrain in the future.

LikeLiked by 1 person

I appreciate the distinction you make between “meaningfulness” and truth. Most scientists don’t seem to have patience for that distinction, nor in many cases, to have contemplated it much at all.

The example of Neptune is obviously relevant, as is the contemporaneous invention of Vulcan. Both were spoken of as a reality before their discovery. Neptune was and Vulcan was not – the need for it of course turning out to be a sign of GR corrections to Newtonian gravity. That we now speak of material dark matter as a real “truth” elides this obvious historical parallel, if only as a reminder that those of us who are ignorant of history are guaranteed to repeat it.

LikeLiked by 2 people

“meaningfulness”

I wish it were my distinction. It actually took me many years to recognize it.

The typical response to paradoxes is “avoidance.” So, Russell’s paradox arises with respect to the Boolean idea of both a proposition and its negation yielding meaningful “sets” combined with unrestricted substitutions relative to variables and universal quantifiers.

The “avoidance” response had been to understand sets as a hierarchical structure (Russell’s type hierarchy or, implicitly, Zermelo’s restrictions on the Boolean sense of a “set”).

Semantic paradoxes led to the philosophy of formal systems with a distinction between an “object language” which could not express the idea, “… is true” and a “metalanguage” in which “… is true” could be stipulated for the object language. Then, “truth” turned out to rely upon a hierarchy too: object language, metalanguage, metametalanguage, metametametalanguage, ….

But, the best way to describe the syntactic restrictions on object language constructs is to understand some expressions as “meaningful” and others as “not meaningful.”

After banging one’s head on the “wall of problematic truth” long enough, the only word left ringing in one’s ears is “meaningfulness.”

It is the residue from philosophy ad nauseum.

LikeLike

It’s the journey, not the destination.

We are on this reductionist quest for some absolute, but everything is contextual, so we get caught up in these endless loops.

Life is like a sentence. The end is just punctuation. What matters is how well you tie the rest of the story together.

LikeLike

Mr. Selmke,

The last paragraph of your article also contains an interesting observation concerning progress in science,

“We get a regular dribble of little-but-still-important contributions. But every five years or so, someone comes out with something that creates a seismic shift in the field.”

The number of years may be variable, but, the remark is honest about how the giants upon whose shoulders we stand depend upon paths made from pebbles.

LikeLiked by 1 person

So, whereas Dr. McGaugh has emphasized the need to know both MOND and Lambda-CDM, working to understand mathematical foundations requires knowing a number of -isms. The big three are logicism, formalism, and intuitionism. Somewhat independently, there are dispositions — one may contrast platonism with constructivism. And, with repect to foundational systems, there is Zermelo-Fraenkel set theory, higher order arithmetic, and category theory.

One -ism which really never makes this list is semi-intuitionism. It is associated with French mathematicians like Lesbegue, Baire, and Borel. It originates with Poincare’s dispute with logicians and their demand for semantics over epistemology. Poincare ought to technically be called an intuitionist — but not in the sense of Kant. He simply viewed mathematical induction as a proof method revealing an infinity of “truths” to be beyond the finitary capacity of human discourse. Hence, it is justified by “intuition.”

I had been asking about “inertia” (rather naively). So, I ran some searches to find the article at the link,

https://www.frontiersin.org/articles/10.3389/fspas.2022.950468/full

I do not know if “quantum gravity” is inevitable. But, when I first turned to study mathematics, I knew of Planck’s constant. When I learned of the Lesbegue number lemma,

https://en.m.wikipedia.org/wiki/Lebesgue%27s_number_lemma

it had been obvious to me that this ought to bear some relationship to Planck’s constant as a minimal, “meaningful” length.

The physics article above concerns itself with a quantization of “space” in relation to a modified uncertainty principle. It goes on to discuss failure of the weak equivalence principle. Again, admitting my weaknesses, I believe — but cannot be certain — that this involves our understanding of inertia.

Assuming that I am correct with this assumption, can I “prove” that physics is best understood relative to French semi-intuitionism?

I know better than to try.

It will take me a long time to sort out “deformed algebras” and other prerequisites for this article. But, upon my cursory reading, my concerns about the relationship of Lie algebras to simple axioms for a metric appear to be confirmed. Weak equivalence will be recovered relative to Lie algebras.

One of the first references in that link which has an arXiv preprint available is a thought experiment about black holes,

https://arxiv.org/abs/hep-th/9301067

Again, the details are beyond my personal knowledge of physics. But, the paper includes the statement,

“… , one could take our result as an indication of the fact that it is not possible to construct a consistent quantum theory of gravity with pointlike objects.”

Since I have already noted in previous comments how Einstein insisted that general relativity did not constitute a geometrization of physics, this remark would seem to indicate that “folklore” has obfuscated Einstein’s stated position. It would seem that the unification of gravitation with inertia ought not be studied with mathematics requiring an “ontology of points.” But, I say this without knowledge of the “deformed algebras” of the earlier link.

In any case, I would welcome comment from physicists on these papers. Right or wrong, my own deliberations on how “ontologies of points” are presenting a problem for “science” seem vindicated, although not so useful toward new scientific methods.

For what this is worth, Dr. McGaugh you (and Dr. Hossenfelder) stand out among the physics bloggers precisely because of “intellectual honesty.”

Thank you.

LikeLike

A vortex could be considered a point, but it’s not really an object.

All that can really be said about gravity is it’s centripetal.

Given it seems the actual energy of anything falling into black holes is shot out the poles as quasars, which are like giant lasers and lasers are synchronized lightwaves, it seems that if we let go of our tactile obsession with “objects/particles,” the answer might be buried in there somewhere, in the relationship between centripetal and synchronization.

LikeLike

Hi mls,

I see it like this:

An observation or measurement is at the beginning.

Then comes an idealization of the objects involved and then one develops the mathematics for it.

Always in this order.

Peter Woit sometimes lists projects in which mathematics is understood in a particularly strict way and in which further insights are hoped for.

Behind this is the thought: “Mathematics leads me (to the goal).”

I don’t think much of it.

Simple example: Ohm’s law:

R=U/I resistance = voltage divided by current.

Resistance is constant and you can vary current and voltage accordingly.

As a mathematical theoretical physicist one will

increase the voltage and get a higher current.

Or one will increase the current and get a higher voltage.

From a mathematical point of view, this is absolutely correct.

But from a physical point of view, the second method is nonsense.

It is impossible to increase the current to get a higher voltage.

You must/will always change the voltage and then get a corresponding current.

Never the other way around.

If you read around a bit, you will only find the point-mass model.

That’s fantastic for planets. And the math that goes with it is too – for planets.

Differential calculus also works extremely well for objects > 10µm. This is already a small miracle

and not self-evident. But for smaller objects it becomes gradually inadequate, unsuitable.

I consider the Planck units (Planck length, Planck mass,…) as a physical-mathematical gimmick.

We already cannot observe single objects much smaller than 1µm.

In a light microscope we see interference fringes from 1µm.

If you use X-rays you can get higher resolution, but the world becomes more and more transparent.

To see structures of about 10nm you always need macroscopic objects.

If you look at all the double slit experiments one (I) gets the impression,

the used objects are some 100 µm in size. Just as big as the generated focus.

You will find scattering experiments suggesting that electrons are smaller than 10^-18m.

But these are calculations that work something like this:

1. you have a function f(x) with f=1 always where the particle is and f=0 otherwise.

2. from this function one builds the Fourier transform F(k)

3. from F(k) one forms the magnitude square. F2(k)

4. F2(k) can be measured finitely.

5. F2(k) is constant for all k, up to very high values.

6. one concludes backwards that f(x) is a delta function, with a width of 10^-18m.

But the calculation has an inaccuracy. One does not get from F2(k) to F(k) unambiguously.

Amount squaring is unique but not one-to-one.

That is, one gets from 1st to 5th, but not from 5th to 1st.

In other words:

Such a small electron is sufficient for the experiments, but not necessary.

Or, to put it a bit more popularly, you can believe that an electron is that small, but you don’t have to.

So one will have to think of a new model (different from the point mass model) for the electron.

The third physicist will do this and develop an appropriate mathematics for it.

Stefan

LikeLike

Mr. Freundt,

Thank you for this explanation. Sadly, my problem has been to work backwards from mathematics while respecting its corpus.

The potential example had been a perfect choice. The pedagogy of physics begins with a description of total energy partitioned into two additive factors. Potential is fundamental.

With regard to the mass-point example, my work in foundations makes me acutely aware of where mathematicians have been working on systems which do not assume “points.” So, I struggle with mathematical physics which does, and, must try to understand physics in ways that do not.

And, I very much appreciate the explicit description in which you discuss the construction of a Fourier transform. You have probably never heard of sets of uniqueness,

https://en.m.wikipedia.org/wiki/Set_of_uniqueness

but, this is a precise topic in mathematics where Fourier analysis and foundational studies in mathematics meet/diverge.

I have not yet discovered any non-classical mathematics which addresses Fourier analysis.

Let us hope that the third generation of physicist you mention can find something useful at this juncture. I am concerned, however, that physics (of the small) has reached a point where measurements are of no use.

Your comment is very much appreciated.

LikeLike

Hi mls,

Thank you very much for your kind words.

I did not understand the remark about the Fourier transform and the uniqueness of theorems.

Of course, I know that the FT is one-to-one.

But when we square it, we lose the phase and can’t get it back.

Have I misunderstood you? Did I miss something?

Sums and series and theorems on convergence I had in high school.

About the rigor of mathematics, I always think of the famous mathematician Goro Shimura,

who said about his friend Yutaka Taniyama:

min 12:40

“Taniyama was not a very careful person as a mathematician. He made a lot of mistakes. But he made mistakes in a good direction.

… I tried to imitate him. But it is very difficult to make good mistakes.”

And Wiles himself (min 40:30)

.. pinpoint exactly why it wasn’t working and formulate it precisely. One can never do that in mathematics…

Stefan

LikeLike

Mr. Freundt,