Dark matter remains undetected in the laboratory. This has been true for forever, so I don’t know what drives the timing of the recent spate of articles encouraging us to keep the faith, that dark matter is still a better idea than anything else. This depends on how we define “better.”

There is a long-standing debate in the philosophy of science about the relative merits of accommodation and prediction. A scientific theory should have predictive power. It should also explain all the relevant data. To do the latter almost inevitably requires some flexibility in order to accommodate things that didn’t turn out exactly as predicted. What is the right mix? Do we lean more towards prediction, or accommodation? The answer to that defines “better” in this context.

One of the recent articles is titled “The dark matter hypothesis isn’t perfect, but the alternatives are worse” by Paul Sutter. This perfectly encapsulates the choice one has to make in what is unavoidably a value judgement. Is it better to accommodate, or to predict (see the Spergel Principle)? Dr. Sutter comes down on the side of accommodation. He notes a couple of failed predictions of dark matter, but mentions no specific predictions of MOND (successful or not) while concluding that dark matter is better because it explains more.

One important principle in science is objectivity. We should be even-handed in the evaluation of evidence for and against a theory. In practice, that is very difficult. As I’ve written before, it made me angry when the predictions of MOND came true in my data for low surface brightness galaxies. I wanted dark matter to be right. I felt sure that it had to be. So why did this stupid MOND theory have any of its predictions come true?

One way to check your objectivity is to look at it from both sides. If I put on a dark matter hat, then I largely agree with what Dr. Sutter says. To quote one example:

The dark matter hypothesis isn’t perfect. But then again, no scientific hypothesis is. When evaluating competing hypotheses, scientists can’t just go with their guts, or pick one that sounds cooler or seems simpler. We have to follow the evidence, wherever it leads. In almost 50 years, nobody has come up with a MOND-like theory that can explain the wealth of data we have about the universe. That doesn’t make MOND wrong, but it does make it a far weaker alternative to dark matter.

Paul Sutter

OK, so now let’s put on a MOND hat. Can I make the same statement?

The MOND hypothesis isn’t perfect. But then again, no scientific hypothesis is. When evaluating competing hypotheses, scientists can’t just go with their guts, or pick one that sounds cooler or seems simpler. We have to follow the evidence, wherever it leads. In almost 50 years, nobody has detected dark matter, nor come up with a dark matter-based theory with the predictive power of MOND. That doesn’t make dark matter wrong, but it does make it a far weaker alternative to MOND.

So, which of these statements is true? Well, both of them. How do we weigh the various lines of evidence? Is it more important to explain a large variety of the data, or to be able to predict some of it? This is one of the great challenges when comparing dark matter and MOND. They are incommensurate: the set of relevant data is not the same for both. MOND makes no pretense to provide a theory of cosmology, so it doesn’t even attempt to explain much of the data so beloved by cosmologists. Dark matter explains everything, but, broadly defined, it is not a theory so much as an inference – assuming gravitational dynamics are inviolate, we need more mass than meets the eye. It’s a classic case of comparing apples and oranges.

While dark matter is a vague concept in general, one can build specific theories of dark matter that are predictive. Simulations with generic cold dark matter particles predict cuspy dark matter halos. Galaxies are thought to reside in these halos, which dominate their dynamics. This overlaps with the predictions of MOND, which follow from the observed distribution of normal matter. So, do galaxies look like tracer particles orbiting in cuspy halos? Or do their dynamics follow from the observed distribution of light via Milgrom’s strange formula? The relevant subset of the data very clearly indicate the latter. When head-to-head comparisons like this can be made, the a priori predictions of MOND win, hands down, over and over again. [If this statement sounds wrong, try reading the relevant scientific literature. Being an expert on dark matter does not automatically make one an expert on MOND. To be qualified to comment, one should know what predictive successes MOND has had. People who say variations of “MOND only fits rotation curves” are proudly proclaiming that they lack this knowledge.]

It boils down to this: if you want to explain extragalactic phenomena, use dark matter. If you want to make a prediction – in advance! – that will come true, use MOND.

A lot of the debate comes down to claims that anything MOND can do, dark matter can do better. Or at least as well. Or, if not as well, good enough. This is why conventionalists are always harping about feedback: it is the deus ex machina they invoke in any situation where they need to explain why their prediction failed. This does nothing to explain why MOND succeeded where they failed.

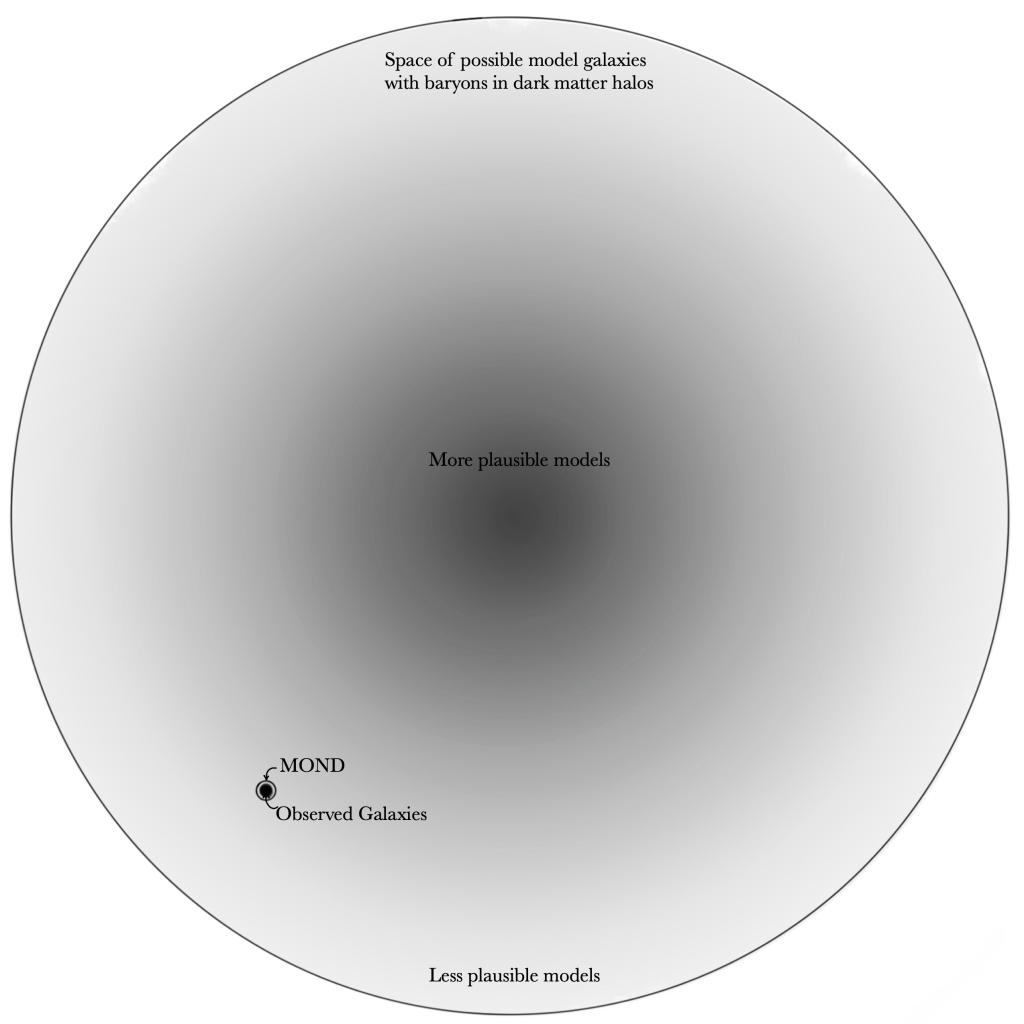

This post-hoc reasoning is profoundly unsatisfactory. Dark matter, being invisible, allows us lots of freedom to cook up an explanation for pretty much anything. My long-standing concern for the dark matter paradigm is not the failure of any particular prediction, but that, like epicycles, it has too much explanatory power. We could use it to explain pretty much anything. Rotation curves flat when they should be falling? Add some dark matter. No such need? No dark matter. Rising rotation curves? Sure, we could explain that too: add more dark matter. Only we don’t, because that situation doesn’t arise in nature. But we could if we had to. (See, e.g., Fig. 6 of de Blok & McGaugh 1998.)

There is no requirement in dark matter that rotation curves be as flat as they are. If we start from the prior knowledge that they are, then of course that’s what we get. If instead we independently try to build models of galactic disks in dark matter halos, very few of them wind up with realistic looking rotation curves. This shouldn’t be surprising: there are, in principle, an uncountably infinite number of combinations of galaxies and dark matter halos. Even if we impose some sensible restrictions (e.g., scaling the mass of one component with that of the other), we still don’t get it right. That’s one reason that we have to add feedback, which suffices according to some, and not according to others.

In contrast, the predictions of MOND are unique. The kinematics of an object follow from its observed mass distribution. The two are tied together by the hypothesized force law. There is a one-to-one relation between what you see and what you get.

This was not expected in dark matter. It makes no sense that this should be so. The baryonic tail should not wag the dark matter dog.

From the perspective of building dark matter models, it’s like the proverbial needle in the haystack: the haystack is the volume of possible baryonic disk plus dark matter halo combinations; the one that “looks like” MOND is the needle. Somehow nature plucks the MOND-like needle out of the dark matter haystack every time it makes a galaxy.

Dr. Sutter says that we shouldn’t go with our gut. That’s exactly what I wanted to do, long ago, to maintain my preference for dark matter. I’d love to do that now so that I could stop having this argument with otherwise reasonable people.

Instead of going with my gut, I’m making a probabilistic statement. In Bayesian terms, the odds of observing MONDian behavior given the prior that we live in a universe made of dark matter are practically zero. In MOND, observing MONDian behavior is the only thing that can happen. That’s what we observe in galaxies, over and over again. Any information criterion shows a strong quantitative preference for MOND when dynamical evidence is considered. That does not happen when cosmological data are considered because MOND makes no prediction there. Concluding that dark matter is better overlooks the practical impossibility that MOND-like phenomenolgy is observed at all. Of course, once one knows this is what the data show, it seems a lot more likely, and I can see that effect in the literature over the long arc of scientific history. This is why, to me, predictive power is more important than accommodation: what we predict before we know the answer is more important than whatever we make up once the answer is known.

The successes of MOND are sometimes minimized by lumping all galaxies into a single category. That’s not correct. Every galaxy has a unique mass distribution; each one is an independent test. The data for galaxies extend over a large dynamic range, from dwarfs to giants, from low to high surface brightness, from gas to star dominated cases. Dismissing this by saying “MOND only explains rotation curves” is like dismissing Newton for only explaining planets – as if every planet, moon, comet, and asteroid aren’t independent tests of Newton’s inverse square law.

MOND does explain more that rotation curves. That was the first thing I checked. I spent several years looking at all of the data, and have reviewed the situation many times since. What I found surprising is how much MOND explains, if you let it. More disturbing was how often I came across claims in the literature that MOND was falsified by X only to try the analysis myself and find that, no, if you bother to do it right, that’s pretty much just what it predicts. Not in every case, of course – no hypothesis is perfect – but I stopped bothering after several hundred cases. Literally hundreds. I can’t keep up with every new claim, and it isn’t my job to do so. My experience has been that as the data improve, so too does its agreement with MOND.

Dr. Sutter’s article goes farther, repeating a common misconception that “the tweaking of gravity under MOND is explicitly designed to explain the motions of stars within galaxies.” This is an overstatement so strong as to be factually wrong. MOND was explicitly designed to produce flat rotation curves – as was dark matter. However, there is a lot more to it than that. Once we write down the force law, we’re stuck with it. It has lots of other unavoidable consequences that lead to genuine predictions. Milgrom explicitly laid out what these consequences would be, and basically all of them have subsequently been observed. I include a partial table in my last review; it only ends where it does because I had to stop somewhere. These were genuine, successful, a priori predictions – the gold standard in science. Some of them can be explained with dark matter, but many cannot: they make no sense, and dark matter can only accommodate them thanks to its epic flexibility.

Dr. Sutter makes a number of other interesting points. He says we shouldn’t “pick [a hypothesis] that sounds cooler or seems simpler.” I’m not sure which seems cooler here – a universe pervaded by a mysterious invisible mass that we can’t [yet] detect in the laboratory but nevertheless controls most of what goes on out there seems pretty cool to me. That there might also be some fundamental aspect of the basic theory of gravitational dynamics that we’re missing also seems like a pretty cool possibility. Those are purely value judgments.

Simplicity, however, is a scientific value known as Occam’s razor. The simpler of competing theories is to be preferred. That’s clearly MOND: we make one adjustment to the force law, and that’s it. What we lack is a widely accepted, more general theory that encapsulates both MOND and General Relativity.

In dark matter, we multiply entities unnecessarily – there is extra mass composed of unknown particles that have no place in the Standard Model of particle physics (which is quite full up) so we have to imagine physics beyond the standard model and perhaps an entire dark sector because why just one particle when 85% of the mass is dark? and there could also be dark photons to exchange forces that are only active in the dark sector as well as entire hierarchies of dark particles that maybe have their own ecosystem of dark stars, dark planets, and maybe even dark people. We, being part of the “normal” matter, are just a minority constituent of this dark universe; a negligible bit of flotsam compared to the dark sector. Doesn’t it make sense to imagine that the dark sector has as rich and diverse a set of phenomena as the “normal” sector? Sure – if you don’t mind abandoning Occam’s razor. Note that I didn’t make any of this stuff up; everything I said in that breathless run-on sentence I’ve heard said by earnest scientists enthusiastic about how cool the dark sector could be. Bugger Occam.

There is also the matter of timescales. Dr. Sutter mentions that “In almost 50 years, nobody has come up with a MOND-like theory” that does all that we need it to do. That’s true, but for the typo. Next year (2023) will mark the 40th anniversary of Milgrom’s first publications on MOND, so it hasn’t been half a century yet. But I’ve heard recurring complaints to this effect before, that finding the deeper theory is taking too long. Let’s examine that, shall we?

First, remember some history. When Newton introduced his inverse square law of universal gravity, it was promptly criticized as a form of magical thinking: How, Sir, can you have action at a distance? The conception at the time was that you had to be in physical contact with an object to exert a force on it. For the sun to exert a force on the earth, or the earth on the moon, seemed outright magical. Leibnitz famously accused Newton of introducing ‘occult’ forces. As a consequence, Newton was careful to preface his description of universal gravity as everything happening as if the force was his famous inverse square law. The “as if” is doing a lot of work here, basically saying, in modern parlance “OK, I don’t get how this is possible, I know it seems really weird, but that’s what it looks like.” I say the same about MOND: galaxies behave as if MOND is the effective force law. The question is why.

As near as I can tell from reading the history around this, and I don’t know how clear this is, but it looks like it took about 20 years for Newton to realize that there was a good geometric reason for the inverse square law. We expect our freshman physics students to see that immediately. Obviously Newton was smarter than the average freshman, so why’d it take so long? Was he, perhaps, preoccupied with the legitimate-seeming criticisms of action at a distance? It is hard to see past a fundamental stumbling block like that, and I wonder if the situation now is analogous. Perhaps we are missing something now that will seems obvious in retrospect, distracted by criticisms that will seem absurd in the future.

Many famous scientists built on the dynamics introduced by Newton. The Poisson equation isn’t named the Newton equation because Newton didn’t come up with it even though it is fundamental to Newtonian dynamics. Same for the Lagrangian. And the classical Hamiltonian. These developments came many decades after Newton himself, and required the efforts of many brilliant scientists integrated over a lot of time. By that standard, forty years seems pretty short: one doesn’t arrive at a theory of everything overnight.

What is the right measure? The integrated effort of the scientific community is more relevant than absolute time. Over the past forty years, I’ve seen a lot of push back against even considering MOND as a legitimate theory. Don’t talk about that! This isn’t exactly encouraging, so not many people have worked on it. I can count on my fingers the number of people who have made important contributions to the theoretical development of MOND. (I am not one of them. I am an observer following the evidence, wherever it leads, even against my gut feeling and to the manifest detriment of my career.) It is hard to make progress without a critical mass of people working on a problem.

Of course, people have been looking for dark matter for those same 40 years. More, really – if you want to go back to Oort and Zwicky, it has been 90 years. But for the first half century of dark matter, no one was looking hard for it – it took that long to gel as a serious problem. These things take time.

Nevertheless, for several decades now there has been an enormous amount of effort put into all aspects of the search for dark matter: experimental, observational, and theoretical. There is and has been a critical mass of people working on it for a long time. There have been thousands of talented scientists who have contributed to direct detection experiments in dozens of vast underground laboratories, who have combed through data from X-ray and gamma-ray observatories looking for the telltale signs of dark matter decay or annihilation, who have checked for the direct production of dark matter particles in the LHC; even theorists who continue to hypothesize what the heck the dark matter could be and how we might go about detecting it. This research has been well funded, with billions of dollars having been spent in the quest for dark matter. And what do we have to show for it?

Zero. Nada. Zilch. Squat. A whole lot of nothing.

This is equal to the amount of funding that goes to support research on MOND. There is no faster way to get a grant proposal rejected than to say nice things about MOND. So one the one hand, we have a small number of people working on the proverbial shoestring, while on the other, we have a huge community that has poured vast resources into the attempt to detect dark matter. If we really believe it is taking too long, perhaps we should try funding MOND as generously as we do dark matter.

Sutter is also kind of an idiot really. He literally doesn’t know the first thing about MOND. And when I say literally, I do mean literally. He has claimed that MOND is a modification of gravity on large scales such as galaxies (it isn’t, obviously it is a modification at low gravitational accelerations). This is an error so glaring that it could have been resolved by reading the lead of the MOND article on Wikipedia. Something that would take all of twenty seconds. But instead he’d rather make twenty minute videos where he “explains” why dark matter is good by repeating fallacies and half truths. I’ll be charitable and assume he’s just ignorant and isn’t doing this malliciously.

It goes to show that even quite intelligent people can say and do really stupid things when they lack critical thinking skills. He should look up steel manning and try that for a while.

LikeLiked by 1 person

There is an ample amount of ignorance on the subject. That’s correctable, but no on seems to bother. There seems to be an echo chamber effect going on, where people who hate MOND say incorrect things about it that get repeated so often they must be true, because everyone says so.

I try to be charitable as well, as I don’t know the motivations of others. But there seems to be attacks on MOND every time it has a prediction come true. It feels like a coordinated distraction campaign – the sort of thing you see in politics but hope note to in science.

LikeLiked by 1 person

Great post, Dr. McGaugh, concise and well focused; the absurdity of the current situation couldn’t be more clearly laid out. Will it matter? Maybe not, that is after all, why the situation is so scientifically absurd. Nothing matters to true believers more than their beliefs.

“…assuming gravitational dynamics are inviolate…” This is really the root of the problem. The assumption, effectively, is that our gravitational models (ND/GR), derived in the context of the solar system, are Universal Laws despite the fact that they do not work without modification on galactic scales and above.

MOND does a good job demonstrating that it is the models that are the problem not physical reality which is where the DM folk place the blame for the models’ failures. MOND works well for disk galaxies in exactly the same way that ND/GR work for the solar system; none of them work well on systems larger and more complex than they were designed for. They are not Universal Laws. They apply where they apply. The idea that humans have enough knowledge of the vast Cosmos, the extent and nature of which we only dimly grasp, to gin up Universal Laws has always been just a hubristic conceit.

As far as “Milgrom’s strange formula” goes, I think its strangeness arises largely because it is couched in terms of a second order derivative, acceleration, which describes an effect that has some cause not specified by the formalism. This makes it hard to understand why MOND works. Stepping back though, it seems that all MOND really accomplishes is to transition the effective force law from the 1/r^2 geometry of the Newtonian shell that is applicable near the core to the appropriate 1/r geometry at the periphery of the flat disk. MOND works well and also makes physical sense in those terms.

LikeLiked by 1 person

One way to take out the second order derivative is to quantise gravity, so that the derivative is replaced by discrete changes in velocity. Of course, one cannot just quantise GR with a spin 2 graviton: firstly, this is known not to work, and secondly, GR is not an accurate enough theory of gravity. If we want a 1/r term in the force law, then we need in effect to take a “square root” of distance, that is a square root of space (or spacetime). Quantum mechanics has such a square root – it is called spin. This means that a graviton operates in the square root of spacetime, and is therefore a fermion, not a boson.

LikeLiked by 1 person

Hi, can u provide a link for the spin being a square root of qm? I dont follow u.

LikeLike

In terms of the representation theory of the Lorentz group, there are two Weyl spinor representations, usually called spin (1/2,0) and spin (0,1/2), or left-handed and right-handed spin 1/2. The tensor product of these is the spin (1/2,1/2) representation on (complex) spacetime. In the non-relativistic version, this becomes a real spacetime by taking the quaternionic tensor product instead of the complex tensor product, and the distinction between left-handed and right-handed spin disappears. I don’t know of any source in the physics literature where this is clearly described. The necessity for using quaternionic tensor products (anathema to physicists) to model real spacetime is a central theme of several of my recent papers on the arXiv.

LikeLike

Sorry, I think I misunderstood your question. I did not mean that spin is a square root *of* QM, but that it is a square root *of* spacetime *in* QM.

LikeLike

I still think we are looking at it backwards. Mass is an intermediate stage of a centripetal dynamic that goes from the barest bending of light, to the vortices at the center of galaxies.

Since synchronization of waves is centripetal, as in lasers are synchronized light waves, it is a basic wave dynamic.

Meanwhile energy radiates out, as structure coalesces in.

Energy goes to the future, as the patterns generated go to the past.

Much like consciousness goes past to future, as thoughts future to past.

LikeLiked by 1 person

Schrödinger’s cat and McGaugh’s dog

Stacy, your dog will make you famous.

A few weeks ago, at the end of June, my high school organized a graduate reunion.

I gave a talk to a 7th-grade class and an 8th-grade class (13/14-year-old kids) on the topic:

“Why is mathematics so important for good science?”

.

After a few words about the school, these students were learning,

mathematical logic as the basis of everything.

And of mathematical logic, only the part about how to start with something “false” and arrive at something “true.”

Then two examples:

1. deduce from 0=1 (false) to 1/4=1/4 (true)

2. Ptolemy with the earth in the center of the universe and his epicycle theory.

And then the practical application

1. the Rutherford experiment

with its two assumptions

and its almost classical explanation

2. and then the double slit experiment

with our Rutherford-experiment-expectation

and the actual outcome.

The lecture closes with a statement of the kind:

If we accept the wave-particle contradiction,

we can predict the outcome of the double-slit experiment very precisely.

You may have heard:

The “Deutsche Forschungs-Gemeinschaft” opens the chance for Sabine to become an outstanding physicist.

Maybe she interrupts her discussions with all the bad scientists and their bad thoughts

for at least 12 months. Maybe. Maybe not.

A good scientist would never accept the above contradiction

or at least would always be aware of it.

One can certainly start a quantum mechanics lecture with the words:

“Dear students,

we have a theory,

which starts with a contradiction.

But it gives excellent results.

And we don’t have anything better.

So I will teach it.

And you have to learn it.”

That’s what I expect from a truthful scientist. – Do you know one?

An extraordinary good scientist would search for a non-contradictory alternative

and find it. The last part is especially tricky.

Have fun and say hi to your dog

Stefan

LikeLiked by 1 person

“An extraordinary good scientist would search for a non-contradictory alternative

and find it. The last part is especially tricky.”

It’s especially tricky if the socio-economic conditions of academia actively discourage the search for alternatives, but that is precisely the current state of affairs.

LikeLiked by 2 people

There are of course plenty of good scientists searching for a non-contradictory alternative. But the conditions of academia generally ensure that they are kicked out long before they are in danger of actually finding one.

LikeLiked by 1 person

I think all scientific progress must go in small steps: First Kepler with the improved observations of elliptic orbits, and only then Newton’s law of gravity. Kepler made observations contrary to the mainstream who believed everything in the heavens #must# be perfect, so circles. The deus ex machina was then the unquestionability of this definition of perfect, and of that heaven is outer space. Most theorists then jumped to the perfect explanation, it explained by definition the relevant theological questions in the then politically correct way. Kepler instead found *from observations* that outer space has perfection in great mathematical relations and he even related orbit sizes to the platonic solids, in order to convince people of the truth. The right belief in godly perfection leaves space for it to be beyond our own understanding! And then the true perfection arrived after Kepler, with Newton’s laws.

Now, dark matter theorists jump to the perfection of explaining everything, be it without a faint idea of what the goal is of anything in the universe. Fine-tuning problems? Just add more randomness and extra universes. How to explain everything away, in order to believe there was no purpose anyway, so as to do away with all ‘imaginary’ responsibility. Instead, Milgrom feels that any pattern, how statistical though it may seem, hints at an underlying structure. He theorizes MOND and derives a myriad of other patterns, all either confirmed or not yet falsifiable. It’s about responsibility to not lose a faint signal the moment it appears before getting under layers of accumulating amendments. It was responsible to name MOND as the one theory explaining the patterns Stacy kept finding. Keeping this responsibility, Milgrom duly pointed this out to you, be it in a pretty stressful tone. By keeping close to the data, MOND counters CDM with unbelievably accurate predictions. And the data close by, the small step to take, is clearer and less subject to interpretation – compared with the CMB. CDM people jump constantly the radius of the universe to where they’re able to explain the CMB, while it’s just too hard to say right now with certainty what source it has.

LikeLiked by 2 people

And where circles were perfection during Kepler’s time, supersymmetry is perfection now. But I think the real perfection in this aspect is slightly different – like ellipses are different from circles. And more connected to reality, no new superpartners as they were clearly excluded. But like Kepler I believe and hope it will add much beauty.

LikeLiked by 1 person

“In almost 50 years, nobody has come up with a MOND-like theory that can explain the wealth of data we have about the universe.” Some of that is his unfamiliarity with the literature. MOND is not the only gravity based explanation of dark matter phenomena out there and MOND proponents acknowledge that Milgrom’s 1983 “toy model” version isn’t the complete truth about gravity. MOND’s prediction success means that any other gravity based explanation needs to reproduce MOND’s predictions where it works. But, lots of gravity based explanations do that, such as Moffat’s MOG and Deur’s approach to the self-interaction of gravitational fields in ordinary GR. http://dispatchesfromturtleisland.blogspot.com/p/deurs-work-on-gravity-and-related.html (which IMHO is the best way to reproduce MOND and also other phenomena where it fails, whether Deur is correct that his approach is really unmodified GR or not).

LikeLiked by 1 person

Self-interacting gravity as an explanation for the MOND phenomenology sounds very interesting. As a reasonably science-literate non-physicist, I have fantasised about something like Deur’s theory, but the other way around: at low accelerations, there is no self-interaction and we get the “unmodified” 1/r relation, but when acceleration gets stronger, self-interaction kicks in and modifies the relation so we get 1/r^2. But as I said, these are just fantasies from someone who knows very little about GR.

LikeLike

In my opinion, the best argument in favour of MOND has come from Erik Verlinde’s entropic gravity. He deduces MOND from the cosmological constant. The next issue: why is this constant constant in time? Where does it come from? In any naive model, the cosmological constant is related to the size of the universe via Lambda=1/R^2. But R is changing over time, and so Lambda would have to decay. This last conclusion is in contrast with data. For me, this is the great problem of the field.

LikeLiked by 1 person

Yes, entropic gravity looks like a very important idea. The question in my mind is, what is the physical mechanism? Where is the entropy located? Where is the information located? Hossenfelder thinks it lies in virtual kaons. I think it lies in neutrinos. Time will tell, I guess.

LikeLiked by 1 person

There is an article in the Washington Post today, about what the Webb is seeing and it highlights the distant mature galaxies, with the only explanation given that maybe they really are not distant, just redshifted by dust. Is anyone in the field laying any groundwork for looking outside the current model? If these observations stand up, issues of Dark Matter versus MOND, etc. will be deck chairs on the Titanic. Patches don’t solve structural issues.

https://www.washingtonpost.com/science/2022/08/26/webb-telescope-space-jupiter-galaxy/

LikeLiked by 1 person

Bob Sanders used MOND to predict, 25 years ago, that large galaxies would form by z=10. That’s what the people in the Post article are talking about, without being aware that it had been predicted. They are aware that it was not predicted in the standard dark matter structure formation paradigm, hence they’re concern. As I’ve written earlier, I think it will take a bit of sorting out to see what is true and how much of a problem this is or isn’t.

LikeLike

It seems that MOND is basically math, it that it expands the equation beyond the heliocentric frame Newton was working with, so what is it modeling?

Like Special Relativity is math, while spacetime is the presumed physical explanation. Or epicycles were geometry, while the crystalline spheres were the presumed physical explanation.

Is it a “force?” There is a tendency to describe everything in terms of particles, but no one has found gravitons, any more than they found DM.

All that can really be said of gravity is it’s a centripetal effect, no matter how effectively it’s modeled, or what cause is proposed.

Given the tendency of waves to synchronize, as one larger wave is more efficient than several smaller ones and the two parameters of the gravitational effect are the initial bending of light and the black holes/vortices at the center of galaxies, what if it’s not a specific force, so much as an entropic dynamic? Things really do just fall together, as a path of least resistance. ?

LikeLike

Do they realise that if dust is causing redshift in any significant amount they have to revise (at the least) their entire cosmology? Because it means all their distances and velocities are wrong – there’s a LOT of dust and gases out there, both in galaxies and between them.

And let’s not even talk about the effects of massive electric and magnetic fields we can SEE out there…

LikeLike

When journalists like in the Washington Post article write “redshifted by dust” they are referring to photometric, not spectroscopic, redshifts. See Stacy’s last article: https://tritonstation.com/2022/08/11/by-the-wayside/ for why photometic redshifts are not reliable in the way that spectroscopic redshifts are.

LikeLike

But surely if dust affects the UV emissions as proposed by the dust scenario, that would mean a chunk of the spectral lines are simply missing. They cannot show as RS’d if they don’t make it out of the galaxy.

At least that is my understand of what is being claimed.

“But dust can be throwing off the calculations. Dust can absorb blue light, and redden the object. It could be that some of these very distant, highly redshifted galaxies are just very dusty, and not actually as far away (and as “young”) as they appear. That would realign the observations with what astronomers expected.”

So they are saying dust absorbs the UV/blue light which means we see ‘redder’ galaxies and think they are further away.

I would presume any competent astronomer who saw a very red galaxy that was missing the characteristic RS’d UV signatures would at least be suss about what they are looking at and NOT be claiming a galaxy at the dawn of the universe. So if the photometrics say one thing and it seems to break the science, surely the 1st thing they would do is look at the spectroscopic before going public?

Maybe I am incorrect and they are happy just to get headlines NOW even if later on they are made to seem foolish?

LikeLike

Think of photometric redshifts as like a sieve. You are sieving out all the un-reddened low-redshift galaxies, leaving you with just reddened galaxies, either because of high-redshift or reddening due to dust. If the galaxy has young hot stars there usually is a sharp change in the brightness around the red-shifted wavelength corresponding to the edge of the Lyman continuum (because this means photons that can ionise atomic hydrogen). Dust should produce a more gradual change in brightness with wavelength. So they tend to be looking for a distinct change in brightness between adjacent filters. Yes, they really should get spectroscopic red-shifts for each galaxy, but that takes time and pressure to publish (at least on ArXiv) is a factor. Stacy as an active academic can explain this better.

LikeLike

Right… photometric redshifts are complicated, which is why I’ve been shy to get into their details. If you have enough band passes, the data are basically a really low resolution spectrum. That’s not really the problem; the problem is in the modeling. We can construct what a galaxy spectrum could look like, in detail, then model what it would look like to the available data. Statistics will give you a best match, a voila, there’s your redshift. Trouble is, the modeling depends on lots of things besides redshift: the star formation rate and history, the metallicity of the stars, and the dust in the system. One does a simultaneous fit to all these things, and in principle the right answer falls out. In practice, the assumptions we make about all these things are oversimplifications that might matter. It isn’t necessarily good enough to assume the dust is just a screen between us and the galaxy as is often assumed; in reality the dust is mixed in with the stars in the galaxy and the transfer of radiation is more complicated (and geometry dependent: is a particular galaxy edge-on?) than just an intervening screen. What the dust absorbs, it re-emits in the IR (energy conservation), so the overall shape of the spectrum is altered. Getting that right in a model well enough to determine a redshift is the trick. Once you build a model, statistics will pick out the “right” one with high apparent confidence – but it can’t tell you if you’re model is adequate in the first place. Spectra being complicated, there are situations in which being off a little in the modeling can result in being way off in the redshift. It isn’t usually that bad, but it can be.

LikeLike

I’m new to your pages and quirte impressed to see a scientist who actually portrays sceptical views.

This isn’t really ‘define better’ apart from the idea that science should do better. It’s about the Higgs and something I noticed that I can’t get anyone to talk about.

Here’s my issue, Multiverse ans SUSY were the 2 main hypotheses to propose a Higgs energy value and 1 said 140 GeV and the other said 115 GeV.

2 CERN experiments produced anomalies at 126 GeV – it’s hard to split the difference more exactly.

The way I learned about science is, that is a fail for both theories and they should go back and start over. Instead it was celebrated as a magificent moment in Science with a party and TV extravaganza unprecedented since the Moon landing.

There seem to be 2 questions – apart from needing to justify the $Billions spent building CERN, why were they so eager to declare victory when the data did NOT support them and just what was it those 2 experiments actually showed?

LikeLiked by 1 person

“Here’s my issue, Multiverse and SUSY were the 2 main hypotheses to propose a Higgs energy value and 1 said 140 GeV and the other said 115 GeV.”

It’s completely understandable that you’d get this impression from the movie “Particle Fever”, but those two values are pretty arbitrary representatives of the two schools of thought (they may have corresponded to candidate Higgs observations that turned out to be false alarms, and/or the predictions in someone’s favorite model). Furthermore the choice posed just represents a kind of thinking that was popular among a few celebrity theorists, and not any kind of consensus among particle physicists. I’ll also add that *both* schools of thought involved supersymmetry. The lower value represents “simple supersymmetry without finetuning”, the higher value represents “supersymmetry in a multiverse with anthropic selection”.

Meanwhile, in reality the existence of a Higgs at around 126 GeV is very well-attested by now. They have even measured its couplings to the heavier particles (theory says these couplings should be proportional to the particles’ mass, and the measurements are in the right ballpark). According to the mainstream perspective, the most significant fact is that no other new particles (supersymmetric or otherwise) have been detected around that energy, and it *was* widely believed that such particles must be there, as part of a cancellation scheme that would keep observed particle masses low. That idea, known in the jargon as “naturalness”, is considered the foremost theoretical casualty of what the LHC actually observed.

The observed value of the Higgs mass is also theoretically interesting because in the standard model without low-energy supersymmetry, that value places the Higgs field on the threshold of a radical instability. It was actually predicted by a 2009 paper using an unfashionable paradigm for quantum gravity, asymptotic safety. So I’d say the current state of theory regarding the Higgs mass is primarily a debate about alternatives to naturalness, and the criticality of the Higgs mass *should* get much more attention than it does (it does get some attention). Meanwhile the experimenters will keep testing the standard model from every angle they can, while looking for a variety of possible new physics effects, and theory will remain a mix of robust ideas, mainstream fads, and people on the fringes, a handful of whom may have a point.

LikeLiked by 2 people

Thank you very much. That is literally the first time someone has even attempted an answer to my question. Very much appreciated. I will return in about 5 years when I have gone through the info you provided and either melted my brain into a sticky mess or learned enough to make semi-intelligent comment.

At least I feel it wasn’t a stupid question… 😀

LikeLiked by 1 person

Oh, BTW… I’ve never seen Particle Fever. I watched the reveal online and the value clashed with what I thought I knew previously so I went looking to confirm those values.

LikeLike

One way to think of it is that the measured Higgs mass is *too* “normal”. Bang on the Standard Model, leaving little practical room for extensions like SUSY that give different masses or split states or other exotica that would indicate physics beyond the Standard Model. Everybody wanted new physics (the fever, I presume, though I also haven’t seen Particle Fever, nor even heard of it before) but there is just nothing there where we had hoped there would be.

LikeLike

I watched Anton Petrov explaining some new findings re Mass and how the researchers are suggesting quarks in a proton or neutron account for less than 1% of the mass and that the rest comes from the strong force gluons.

If that is so, how does that affect the idea that Higgs provides the mass? Doesn’t that suggest the Higgs IS the gluons?

LikeLike

The quarks are bound into protons and neutrons by the strong nuclear force. Remember that E=mc^2: it is the binding energy provided by the gluons of the [very!] strong nuclear force that contributes most of the apparent rest mass of protons and neutrons.

The role of the Higgs seems to be frequently overstated in this context. If *I* understand it right – and this is beyond my expertise – the Higgs explains the mass hierarchy (why does a proton weigh more than an electron?) but not where mass itself comes from.

LikeLike

So just to drop the cat amongst the pigeons… Paul LaViolette did an analysis about the state of antigravity. (Secrets of Antigravity Propulsion) Background is, as near as I can work things out, we are dealing with a truncated version of EM theory. James Clerk Maxwell did the heavy lifting in the field but died early and his work was ‘modified’ by Oliver Heaviside and Heinrich Hertz – his original work can now be downloaded from the web.

Maxwell used quaternion equations to describe his theory but after Heaviside and Hertz got into things they became 3D vector equations, we lost quite a number of the originals and 2 forces went away. It’s been a while but from memory, electrostatic and electrogravitic force.

LaViolette (PLV) went through the decades of development by Thomas Townsend Brown (TTB) and also some of the Tesla info. It seems clear there is a pretty simple way to counteract mass, or at least inertia. It involves high frequency, high voltage DC current and among other claims PLV says it’s why people aren’t allowed to touch stealth aircraft for some time after they land.

Some of the Tesla reports of what visitors saw him do as well as the demonstrations across the decades run by TTB make it clear they both had something not apparent in OUR world of EM. I don’t know how PLV is regarded in physics but then Halton Arp had some remarkable ideas and he was attacked by the orthodoxy so I’m not sure the opinion of those holding fast to a consensus matters.

So the pigeon is, does the ability to cancel the effects of mass change the way we should views the Higgs?

LikeLike

I doubt that Stacy would want to encourage discussion of conspiracy-theory physics here, but just a few words of explanation…

“electrogravitic force”

This probably derives from a 1903 paper by Whitaker who suggested, I think, that electromagnetism and gravitation could derive from a common medium, electromagnetism from its transverse waves, and gravitation from the longitudinal waves. This has been one of the theoretical inspirations for people who think Tesla invented suppressed super-technologies and so forth.

“Thomas Townsend Brown”

Brown is an inventor who had an experimental effect (Biefeld-Brown effect) in which charging a capacitor produces a push at one end. Brown thought this was due to an electrogravitic interaction, but it’s now understood as due to ionic currents produced in air near the electrodes.

LikeLike

The idea of electrogravity and electrostatic derives from Maxwell’s work not from conspiracy theories. You can find the original treatise from Maxwell online if you’d like tor ead it.

Brown’s work produced useful and evolving technology that physically moved his ‘saucers’ without apparent thrust generators. The problem with ‘understood as … ionic currents’ is that he separated the +’ve and -‘ve by the width of the ‘saucers’ he used. So we’d need an explanation for how it is the ‘ionic currents’ worked in only one direction. i.e. both sides would have ionised the air so how did it always send to ‘saucer’ the same direction?

While the previous para quation could easily be my limited physics, Brown’s effects were tested by others, specifically in vacuum and while a static DC setup didn’t produce movement, they did get motion when the current was flowing – which is why Brown not only had high voltage DC, he had high cycle levels. So the current was always in a flow condition.

Labelling things as conspiracy when there is physical evidence that needs explanation is counter productive to science. Dismissing ideas because a book needs to be found and read isn’t much better. Conspiracy theory remains, except in rare circumstance, a pejorative meant to dissuade any further examination.

And these days it is difficult to find a decent conspiracy theory that has NOT been proven out!

The facts remain that Maxwell’s work WAS truncated, Tesla DID do things no longer able to be done and Brown DID have 30+ years of demonstrating his effects to sceptical audiences.

LikeLike

In previous, “While the previous para quation” should read “While the previous para question”

LikeLike

“They have even measured its couplings to the heavier particles (theory says these couplings should be proportional to the particles’ mass, and the measurements are in the right ballpark).”

Specifically, the couplings that have been observed that are in the right ballpark in Higgs boson decays are (with the mass of one particle of that type to the nearest 0.1 GeV or one significant digit if the nearest 0.1 GeV would be zero):

b-quark pairs, 57.7% (4.2 GeV)

W boson pairs, 21.5% (80.4 GeV

tau-lepton pairs, 6.27% (1.8 GeV)

c-quark pairs, 2.89% (1.3 GeV)

Z boson pairs, 2.62% (91.2 GeV)

photon pairs, 0.227% (zero rest mass)

Z boson and a photon, 0.153% (see above)

muon pairs, 0.021 8% (0.1 GeV)

We haven’t yet observed s-quark pairs (0.1 GeV), down quark pairs (0.005 GeV), up quark pairs (0.003 GeV), or electron-positron pairs (0.0005 GeV). All of which are extremely rare.

We also haven’t been able to distinguish definitively gluon pair decays that are about 8.57% of the total, due to large confounding backgrounds.

We have, however, also observed (not through Higgs boson decays) some sign that the Higgs boson-top quark coupling is of the right order of magnitude. The top quark has a rest mass of about 172.8 GeV.

Observation of the Higgs boson self-coupling also remains elusive.

LikeLiked by 1 person

In my professional opinion the mere discovery of the Higgs is a huge success regardless of theories not predicting the mass correctly. The Higgs was the last missing piece of the Standard Model (of particle physics). The SM makes no precise prediction of the mass so this theory could have only been ruled out by not finding the Higgs at all (in a large mass range). Hence LHC “needed” to be built to firstly find the Higgs and secondly constrain beyond SM (BSM) theories. Both goals were spectacularly successful as has been pointed out by the other comments in regards to BSM models.

I am as sceptical towards overbounding theoretical theories and sticking to disregarding valuable theories which deviate from the norm (as is the case for MOND) as the next guy on this blog. However, IMHO, we should remain fair and statements like

“There seem to be 2 questions – apart from needing to justify the $Billions spent building CERN, why were they so eager to declare victory when the data did NOT support them and just what was it those 2 experiments actually showed?”

do not help since (to me) they seem to suggest some malice or stupidity by the scientists.

In my opinion (I am only part of the high-energy physics and not the collider community) building the LHC was absolutely worth it since the Higgs was a very precise prediction and crucial part of the SM which needed to be tested.

What might irk you a bit is the enthusiastic promises of all kinds of new physics which were followed by deafening silence when nothing was found. This is indeed problematic and I would wish that the community were to address this instead of just changing the models.

LikeLike

Being a doctor for almost 40 years, I’ve seen in Medicine much of what’s happening with MOND. All medical guidelines are full of “expert opinion level of evidence”, so “evidence-based Medicine” is what it is, notwithstanding the fact of the significant progress that we’ve achieved in health care. I’m just an ignorant interested in Cosmology but for sure able to understand that MOND makes more sense than lambdaCDM model. I remember very well an intervention of Dr. McGaugh at one of the World Science Festivals and the caution and calm way that he talked about the possibility of an alternative to lambdaCDM model in front of a panel of experts in the latter. Thanks, Dr. McGaugh, for your excellent blog.

LikeLiked by 1 person

Thanks! I have had to do lots of interventions. I sometimes wonder if I should have been more strident at the WSF, so glad to know it came across OK. The forum seems to try to encourage arguments, trying to turn science into entertainment.

LikeLike

@arcillitono8

One way to visualize what Dr. Wilson speaks of would utilize the 10-element orthomodular lattice in Figure 3 on page 330 of the paper at

https://www.semanticscholar.org/paper/Varieties-of-Orthomodular-Lattices.-II-Bruns-Kalmbach/2c2996c1e93872a2be6a2d296f0efba22d9d7a73

Instead of decorating the graph extrema with ‘0’ and ‘1’ use a down arrow and an up arrow. The 3-atom Boolean subblock may have its atoms decorated with quaternion unit vectors. Their orthocomplements may be decorated with the negated vectors. The remaining two elements of the graph may be decorated with the scalars ‘1’ and ‘-1’.

A Cartesian product of this with any other order having similarly decorated extrema will yield a “model” of paired spins. Two up arrows on top. Two down arrows on bottom. And two labels with mixed arrows in a “central” position relative to order inversion across a horizontal axis of reflection.

Between the total positivity of energy and the role of quadratics, quantum formalism only distinguishes similar pairs and dissimilar pairs.

If you take the Cartesian product of this ortholattice with a 2-element order, one obtains a 20-element ortholattice by which one may “understand” the orthogonality of space and time without algebraic notions.

The 100-element Cartesian product of the 10-element ortholattice with itself is the interesting case. Its “identity diagonal” relates two copies of the 20-element ortholattice to the quaternionic decoration.

“Shut up and calculate ” is neither thinking or mathematics.

Look at Richardson’s theorem,

https://en.m.wikipedia.org/wiki/Richardson%27s_theorem

belief in real numbers is problematic

LikeLike

So my crazy idea/ dream goes like this.

There are so many gluons out there. (I don’t know how to count them.) If the temperature/ energy gets low enough they can couple to the lowest energy state and make a Bose-Einstein condensate. The lowest energy state of the universe is something like the radius or diameter, of the observable universe. That’s the lowest k-vector… R_universe.

LikeLike

“socio-economic conditions”

Yes, for standardized research (e.g. the evaluation of astronomical images) money is helpful.

And attention and advertising are helpful to get telescope time.

But money cannot buy good ideas.

The next good idea will come from outside.

The one (he or she) must be very good in mathematics.

He or she does not have to be good at differential calculus. There are hundreds of them, all treading water.

The one will have to invent new mathematics.

It will not come out of the mainstream. The mainstream only allows questions that can be answered with differential calculus.

Newton invented the differential calculus to predict the position of the planets in the solar system. Planets are very well points or point masses. Electrons are not. Therefore, differential calculus is not appropriate for objects like electrons, protons, etc.

But does anyone know a physicist who has gone that far into the problem?

LikeLike