I was on vacation last week. As soon as I got back, the first thing I did was fall off my bike onto a tree stump, breaking my wrist. I’ll be okay, but I won’t be typing a lot. This post is being dictated to software; I hope I don’t have to do too much editing. I let the software generate the image above based on the prompt “dark matter properties illustrated” and I don’t think we should hold our breath for AI to help us out with this.

There were some good questions to the last post that I didn’t get to address. I went back and tried to answer some of them. Siriusactuary asked about the properties required for dark matter for galaxies vs. large scale structure. That’s a very deep question that requires a long answer with some historical perspective. Please bear with me as I attempt a quasi-coherent, off-the-cuff narrative that doesn’t invite a lot of editing, which it surely will.

I thought about this long and hard when I first encountered the problem. Which was almost thirty years ago now. So it is probably worth a short refresher.

We have been assuming all along, I think reasonably, that cosmological dark matter and galaxy dark matter are the same stuff, just different manifestations of the same problem. Perhaps they’re not, but there is a huge range of systems that show acceleration discrepancies, and it isn’t always trivial to split them into one camp or another. It seems common to talk about large and small scale problems, but I don’t think size is the right way to think about it. It’s more a difference between gravitationally bound systems that are in equilibrium and the dynamics of the expanding universe as an evolving entity that contains structures that develop within it.

The problem in bound systems is not just galaxy dynamics. It’s also clusters of galaxies. It’s also a star clusters that don’t show a discrepancy. The problem extends over a dynamic range of at least a billion in baryonic mass. It involves all sorts of dynamical questions where we do sometimes need to invoke dark matter or MOND or whatever. The evidence in bound systems is inevitably that when we apply the law of gravity as we know it to the stuff we can see, the visible baryons, then the dynamical mass doesn’t add up. We need something extra to explain the data.

The simple answer early on was that there was simply more mass there, i.e., dark matter. But that much is ambiguous. It could be that we infer the need for dark matter because the equations are inadequate and need to be generalized, i.e., something like MOND. But to start, at the beginning of the dark matter paradigm, there was no particular restriction on what the dark matter needed to be or what its properties needed to be. It could be baryonic, it could be non-baryonic. It could be black holes, brown dwarfs, all manner of things.

From a cosmological perspective, it became apparent in the early 1980s that we needed something extra – not just dark, but non-baryonic. By this time it was easy to believe because people like Vera Rubin and Albert Bosma had already established that we needed more than meets the eyes in galaxies. So dark matter was no longer a radical hypothesis, which it had been in 1970. The paradigm kinda snowballed – it had been around as a possibility since the 1930s, but it was only in the 1970s that it became firmly established dynamically. Even then it was like a factor of two and could be normal if hard to see baryons like brown dwarfs. By the early 1980s it was clear we needed more like a factor of ten, and it had to be something new: the cosmological constraint was that the gravitating mass density is greater than the baryon density allowed by big bang nucleuosynthesis. That means that there is a requirement on the nature of dark matter beyond there just being more mass.

The cosmic dark matter has to be something non-baryonic. That is to be say, it has to be some new kind of beast, presumably some kind of a particle that is not already in the standard model of particle physics. This was received with eagerness by particle physicists who felt that their standard model was complete and yet unsatisfactory and there should be something deeper and more to it. This was an indication in that direction. From a cosmological perspective, the key fact was that there was something more out there than met the eye. Gravitation gave a mass density then was higher than allowed in normal matter. Not only did you need dark matter, but you needed some kind of novel, new particle that’s not in the standard model of particle physics to be that dark matter.

The other cosmological imperative was to grow large scale structure. The initial condition that we see in the early universe is very smooth. That is the microwave background on the sky, with its very small temperature fluctuations, only one part in a hundred thousand. That’s the growth factor reached by redshift zero: structure has grown by a factor of a hundred thousand. Normal gravity will grow structure at a rate that is proportional to the rate at which the universe expands, which is basically a factor of a thousand since the microwave background was imprinted.

So we have another big discrepancy. We can only grow structure by a factor of a thousand, but we observe that it has grown by a factor of a hundred thousand. So we need something to goose the process. That something can be dark matter, provided that it does not interact with photons directly. It can be a form of particle that does not interact via the electromagnetic force. It can interacts through gravity and perhaps through the weak nuclear force, but not through the electromagnetic force.

Those are properties that are required of dark matter by cosmology. It has to be non-baryonic and not interact through electromagnetism. These properties are not necessary for galaxies. And that’s basically the picture that persists today. One additional constraint that we need from a cosmological perspective is that the dark matter needs to be slow-moving – dynamically cold so that structure can form. If you make it dynamically hot, like neutrinos that are born moving at very nearly the speed of light, those are not going to clump up and form structure even if they have a little mass.

So that was the origin of the cold dark matter paradigm. We needed some form of completely novel particle that had the right relic density – this is where the wimp miracle comes in. That worked fine for galaxies at the time. All you needed for galaxies early on was extra mass. It was cosmology that gave us these extra indications of what the dark matter needs to be.

We’ve learned a lot more about galaxies since then. I remember in the early nineties when I was still a staunch proponent of cold dark matter being approached at conferences by eminent dynamicists who confided in hushed tones so that the cosmologists wouldn’t hear that they thought the dark matter had to be baryonic, not non-baryonic.

I had come to this from the cosmological perspective that I had just described above. The total mass density had to be a lot bigger than the baryonic mass density. Therefore the dark matter had to be non-baryonic. To say otherwise was crazy talk, which is why they were speaking about it in hushed tones. But here were these very eminent people who were very quietly suggesting to me that their work on galaxies suggested that the dark matter had to be made a baryons not something non-baryonic. I asked why, and basically it boiled down to the fact that they could see clear connections between the dynamics and the baryons. It didn’t suffice just to have extra mass; the dark and luminous component seemed to know about each other*.

The data for galaxies showed that the stuff we could see, the distribution of stars and gas, was clearly and intimately related to the total distribution of mass, including the dark matter. This led to a number of ideas, that do not sit well with the cold dark matter paradigm. One was HI scaling: basically, if you took the distribution of atomic gas, and scaled it up by a factor of roughly 10, then that was a decent predictor of what the dark matter was doing. Given that, one could imagine that maybe the dark matter was some form of unseen baryons that follow the same distribution as the atomic gas. There was even an elaborate paradigm built up around very cold molecular gas to do this. That seemed problematic for me, because if you have cold molecular gas, it should clump up and form stars, and then you see it. Even if you didn’t see it in it’s cold form you need a lot of it. Interestingly, you do not violate the BBN baryon density, just in galaxies. But you would on a cosmic scale, if that was the only form of dark matter. So then we we need multiple forms of dark matter, which violates parsimony.

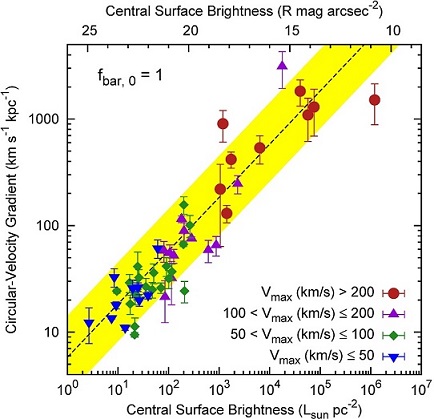

Another important and frequent point is the concept of maximum disk. This came up last time in the case of NGC 1277, where the inner regions of that galaxy have its dynamics completely explained by the stars that you see. This is a very common occurrence in high surface brightness galaxies. In regions where the stars are dense, that’s all the mass that you need. It’s only when you get out to a much larger radius, where the accelerations become low, that you needed something extra, the dark matter effect.

It was pretty clear and widely accepted that the inner regions of many bright galaxies were star dominated. You did not need much dark matter in the center, only at the edges. So you had this picture of a pseudoisothermal halo with a low density central core. But by the mid-nineties, a lot of simulations all showed that cold dark matter halos should have cusps: they predicted there to be a lot of dark matter near the centers of galaxies.

This contradicted the picture that had been established. And so people got into big arguments as to whether or not high-surface brightness galaxies were indeed maximal. The people who actually worked on galaxies said Yes, we have established that they are maximal – we only need stars in the central regions; the dark matter only becomes necessary farther out. People who were coming at it from the cosmological perspective without having worked on individual galaxies saw the results of the simulations, saw that there’s always a little room to trade off between the stellar mass and the dark mass by adjusting the mass to light ratio of the stars, and said galaxies cannot be maximal.

I was perplexed by this contradiction. You had a strong line of evidence that galaxies were maximal and their centers. You had a completely different line of evidence, a top down cosmological view of galaxies that said galaxies should not and could not be maximal in nurse centers. Which of those interpretations you believe seemed to depend on which camp you came out of.

I came out of both camps. I was working on low surface brightness galaxies at the time and was hopeful that they would help to resolve the issue. Instead they made it worse, sticking us with a fine-tuning problem. I could not solve this fine-tuning problem. It caused me many headaches. It was only after I had suffered those headaches that I began to worry about the dark matter paradigm. And then by chance, I heard a talk by this guy Milgrom who, in a few lines on the board, derived as a prediction all of the things that I was finding problematic to interpret in terms of dark matter. Basically, a model with dark matter has to look like MOND to satisfy the data.

That’s just silly, isn’t it?

MOND made predictions. Those predictions came true. What am I supposed to report? That it had these predictions com true – therefore it’s wrong?

I had made my own prediction based on dark matter. It failed. Other people had different predictions based on dark matter. Those also did not come true. Milgrom was only the only one to correctly predict ahead of time what low surface brightness galaxies would do.

If we insist on dark matter, what this means is that we need, for each and every galaxy, the precise that looks like MOND. I wrote the equation for the required effects of dark matter in all generality in McGaugh (2004). The improvements in the data over the subsequent decade enable this to be abbreviated to

gDM = gbar/(e√(gbar/a0) -1).

This is in McGaugh et al. (2016), which is a well known paper (being in the top percentile of citation rates). So this should be well known, but the implication seems not to be, so let’s talk it through. gDM is the force per unit mass provided by the dark matter halo of a galaxy. This is related to the mass distribution of the dark matter – its radial density profile – through the Poisson equation. The dark matter distribution is entirely stipulated by the mass distribution of the baryons, represented here by gbar. That’s the only variable on the right hand side, a0 being Milgrom’s acceleration constant. So the distribution of what you see specifies the distribution of what you can’t.

This is not what we expect for dark matter. It’s not what naturally happens in any reasonable model, which is an NFW halo. That comes from dark matter-only simulations; it has literally nothing to do with gbar. So there is a big chasm to bridge right from the start: theory and observation are speaking different languages. Many dark matter models don’t specify gbar, let alone satisfy this constraint. Those that do only do so crudely – the baryons are hard to model. Still, dark matter is flexible; we have the freedom to make it work out to whatever distribution we need. But in the end, the best a dark matter model can hope to do is crudely mimic what MOND predicted in advance. If it doesn’t do that, it can be excluded. Even if it does do that, should we be impressed by the theory that only survives by mimicking its competitor?

The observed MONDian behavior makes no sense whatsoever in terms of the cosmological constraints in which the dark matter has to be non-baryonic and not interact directly with the baryons. The equation above implies that any dark matter must interact very closely with the baryons – a fact that is very much in the spirit of what earlier dynamicist had found, that the baryons and the dynamics are intimately connected. If you know the distribution of the baryons that you can see, you can predict what the distribution of the unseen stuff has to be.

And so that’s the property that galaxies require that is pretty much orthogonal to the cosmic requirements. There needs to be something about the nature of dark matter that always gives you MONDian behavior in galaxies. Being cold and non-interacting doesn’t do that. Instead, galaxy phenomenology suggests that there is a direct connection – some sort of direct interaction – between dark matter and baryons. That direct interaction is anathema to most ideas about dark matter, because if there’s a direct interaction between dark matter and baryons, it should be really easy to detect dark matter. They’re out there interacting all the time.

There have been a lot of half solutions. These include things like warm dark matter and self interacting dark matter and fuzzy dark matter. These are ideas that have been motivated by galaxy properties. But to my mind, they are the wrong properties. They are trying to create a central density core in the dark matter halo. That is at best a partial solution that ignores the detailed distribution that is written above. The inference of a core instead of a cusp in the dark matter profile is just a symptom. The underlying disease is that the data look like MOND.

MONDian phenomenology is a much higher standard to try to get a dark matter model to match than is a simple cored halo profile. We should be honest with ourselves that mimicking MOND is what we’re trying to achieve. Most workers do not acknowledge that, or even be aware that this is the underlying issue.

There are some ideas to try to build-in the required MONDian behavior while also satisfying the desires of cosmology. One is Blanchet’s dipole or dark matter. He imagined a polarizable dark medium that does react to the distribution of baryons so as to give the distribution of dark matter that gives MOND-like dynamics. Similarly, Khoury’s idea of superfluid dark matter does something related. It has a superfluid core in which you get MOND-like behavior. At larger scales it transitions to a non-superfluid mode, where it is just particle dark matter that reproduces the required behavior on cosmic scales.

I don’t find any of these models completely satisfactory. It’s clearly a hard thing to do. You’re trying to mash up two very different sets of requirements. With these exceptions, the galaxy-motivated requirement that there is some physical aspect of dark matter that somehow knows about the distribution of baryons and organizes itself appropriately is not being used to inform the construction of dark matter models. The people who do that work seem to be very knowledgeable about cosmological constraints, but their knowledge of galaxy dynamics seems to begin and end with the statement that rotation curves are flat and therefore we need dark matter. That sufficed 40 years ago, but we’ve learned a lot since then. It’s not good enough just to have extra mass. That doesn’t cut it.

So in summary, we have two very different requirements on the dark matter. From a cosmological perspective, we need it to be dynamically cold. Something non baryonic that does not interact with photons or easily with baryons.

From a galactic perspective, we need something that knows intimately about what the baryons are doing. And when one does one thing, the other does a corresponding thing that always adds up to looking like MOND. If it doesn’t add up to looking like MOND, then it’s wrong.

So that’s where we’re at right now. These two requirements are both imperative – and contradictory.

* There is a knee-jerk response to say “mass tells light where to go” that sound wise but is actually stupid. This is a form of misdirection that gives the illusion of deep thought without the bother of actually engaging in it.