The results from the high redshift universe keep pouring in from JWST. It is a full time job, and then some, just to keep track. One intriguing aspect is the luminosity density of the universe at z > 10. I had not thought this to be problematic for LCDM, as it only depends on the overall number density of stars, not whether they’re in big or small galaxies. I checked this a couple of years ago, and it was fine. At that point we were limited to z < 10, so what about higher redshift?

It helps to have in mind the contrasting predictions of distinct hypotheses, so a quick reminder. LCDM predicts a gradual build up of the dark matter halo mass function that should presumably be tracked by the galaxies within these halos. MOND predicts that galaxies of a wide range of masses form abruptly, including the biggest ones. The big distinction I’ve focused on is the formation epoch of the most massive galaxies. These take a long time to build up in LCDM, which typically takes half a Hubble time (~7 billion years; z < 1) for a giant elliptical to build up half its final stellar mass. Baryonic mass assembly is considerably more rapid in MOND, so this benchmark can be attained much earlier, even within the first billion years after the Big Bang (z > 5).

In both theories, astrophysics plays a role. How does gas condense into galaxies, and then form into stars? Gravity just tells us when we can assemble the mass, not how it becomes luminous. So the critical question is whether the high redshift galaxies JWST sees are indeed massive. They’re much brighter than had been predicted by LCDM, and in-line with the simplest models evolutionary models one can build in MOND, so the latter is the more natural interpretation. However, it is much harder to predict how many galaxies form in MOND; it is straightforward to show that they should form fast but much harder to figure out how many do so – i.e., how many baryons get incorporated into collapsed objects, and how many get left behind, stranded in the intergalactic medium? Consequently, the luminosity density – the total number of stars, regardless of what size galaxies they’re in – did not seem like a straight-up test the way the masses of individual galaxies is.

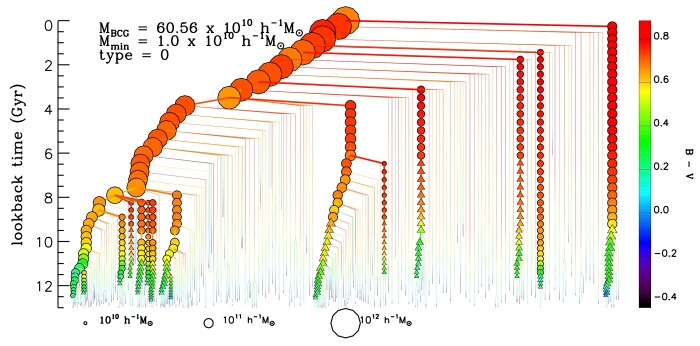

It is not difficult to produce lots of stars at high redshift in LCDM. But those stars should be in many protogalactic fragments, not individually massive galaxies. As a reminder, here is the merger tree for a galaxy that becomes a bright elliptical at low redshift:

At large lookback times, i.e., high redshift, galaxies are small protogalactic fragments that have not yet assembled into a large island universe. This happens much faster in MOND, so we expect that for many (not necessarily all) galaxies, this process is basically complete after a mere billion years or so, often less. In both theories, your mileage will vary: each galaxy will have its own unique formation history. Nevertheless, that’s the basic difference: big galaxies form quickly in MOND while they should still be little chunks at high z in LCDM.

The hierarchical formation of structure is a fundamental prediction of LCDM, so this is in principle a place it can break. That is why many people are following the usual script of blaming astrophysics, i.e., how stars form, not how mass assembles. The latter is fundamental while the former is fungible.

Gradual mass assembly is so fundamental that its failure would break LCDM. Indeed, it is so deeply embedded in the mental framework of people working on it that it doesn’t seem to occur to most of them to consider the possibility that it could work any other way. It simply has to work that way; we were taught so in grad school!

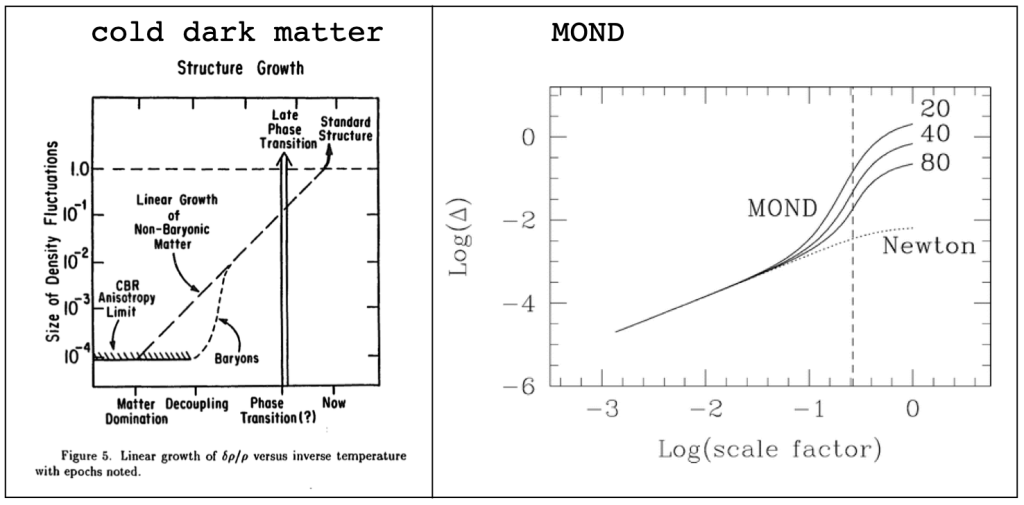

A principle result in perturbation theory applied to density fluctuations in an expanding universe governed by General Relativity is that the growth rate of these proto-objects is proportional to the expansion rate of the universe – hence the linear long-dashed line in the left diagram. The baryons cannot match the observations by themselves because the universe has “only” expanded by a factor of a thousand since recombination while structure has grown by a factor of a hundred thousand. This was one of the primary motivations for inventing cold dark matter in the first place: it can grow at the theory-specified rate without obliterating the observed isotropy% of the microwave background. The skeletal structure of the cosmic web grows in cold dark matter first; the baryons fall in afterwards (short-dashed line in left panel).

That’s how it works. Without dark matter, structure cannot form, so we needn’t consider MOND nor speak of it ever again forever and ever, amen.

Except, of course, that isn’t necessarily how structure formation works in MOND. Like every other inference of dark matter, the slow growth of perturbations assumes that gravity is normal. If we consider a different force law, then we have to revisit this basic result. Exactly how structure formation works in MOND is not a settled subject, but the panel at right illustrates how I think it might work. One seemingly unavoidable aspect is that MOND is nonlinear, so the growth rate becomes nonlinear at some point, which is rather early on if Milgrom’s constant a0 does not evolve. Rather than needing dark matter to achieve a growth factory of 105, the boost to the force law enables baryons do it on their own. That, in a nutshell, is why MOND predicts the early formation of big galaxies.

The same nonlinearity that makes structure grow fast in MOND also makes it very hard to predict the mass function. My nominal expectation is that the present-day galaxy baryonic mass function is established early and galaxies mostly evolve as closed boxes after that. Not exclusively; mergers still occasionally happen, as might continued gas accretion. In addition to the big galaxies that form their stars rapidly and eventually become giant elliptical galaxies, there will also be a population for which gas accretion is gradual^ enough to settle into a preferred plane and evolve into a spiral galaxy. But that is all gas physics and hand waving; for the mass function I simply don’t know how to extract a prediction from a nonlinear version of the Press-Schechter formalism. Somebody smarter than me should try that.

We do know how to do it for LCDM, at least for the dark matter halos, so there is a testable prediction there. The observable test depends on the messy astrophysics of forming stars and the shape of the mass function. The total luminosity density integrates over the shape, so is a rather forgiving test, as it doesn’t distinguish between stars in lots of tiny galaxies or the same number in a few big ones. Consequently, I hadn’t put much stock in it. But it is also a more robustly measured quantity, so perhaps it is more interesting than I gave it credit for, at least once we get to such high redshift that there should be hardly any stars.

Here is a plot of the ultraviolet (UV) luminosity density from Adams et al. (2023):

The lower line is one+ a priori prediction of LCDM. I checked this back when JWST was launched, and saw no issues up to z=10, which remains true. However, the data now available at higher redshift are systematically higher than the prediction. The reason for this is simple, and the same as we’ve discussed before: dark matter halos are just beginning to get big; they don’t have enough baryons in them to make that many stars – at least not for the usual assumptions, or even just from extrapolating what we know quasi-empirically. (I say “quasi” because the extrapolation requires a theory-dependent rate of mass growth.)

The dashed line is what I consider to be a reasonable adjustment of the a priori prediction. Putting on an LCDM hat, it is actually closer to what I would have predicted myself because it has a constant star formation efficiency which is one of the knobs I prefer to fix empirically and then not touch. With that, everything is good up to z=10.5, maybe even to z=12 if we only believe* the data with uncertainties. But the bulk of the high redshift data sit well above the plausible expectation of LCDM, so grasping at the dangling ends of the biggest error bars seems unlikely to save us from a fall.

Ignoring the model lines, the data flatten out at z > 10, which is another way of saying that the UV luminosity function isn’t evolving when it should be. This redshift range does not correspond to much cosmic time, only a few hundred million years, so it makes the empiricist in me uncomfortable to invoke astrophysical causes. We have to imagine that the physical conditions change rapidly in the first sliver of cosmic time at just the right fine-tuned rate to make it look like there is no evolution at all, then settle down into a star formation efficiency that remains constant in perpetuity thereafter.

Harikane et al. (2023) also come to the conclusion that there is too much star formation going on at high redshift (their Fig. 18 is like that of Adams above, but extending all the way to z=0). Like many, they appear to be unaware that the early onset of structure formation had been predicted, so discuss three conventional astrophysical solutions as if these were the only possibilities. Translating from their section 6, the astrophysical options are:

- Star formation was more efficient early on

- Active Galactic Nuclei (AGN)

- A top heavy IMF

This is a pretty broad view of the things that are being considered currently, though I’m sure people will add to this list as time goes forward and entropy increases.

Taking these in reverse order, the idea of a top heavy IMF is that preferentially more massive stars form early on. These produce more light per unit mass, so one gets brighter galaxies than predicted with a normal IMF. This is an idea that recurs every so often; see, e.g., section 3.1.1 of McGaugh (2004) where I discuss it in the related context of trying to get LCDM models to reionize the universe early enough. Supermassive Population III stars were all the rage back then. Changing the mass spectrum& with which stars form is one of those uber-free parameters that good modelers refrain from twiddling because it gives too much freedom. It is not a single knob so much as a Pandora’s box full of knobs that invoke a thousand Salpeter’s demons to do nearly anything at the price of understanding nothing.

As it happens, the option of a grossly variable IMF is already disfavored by the existence of quenched galaxies at z~3 that formed a normal stellar population at much higher redshift (z~11). These galaxies are composed of stars that have the spectral signatures appropriate for a population that formed with a normal IMF and evolved as stars do. This is exactly what we expect for galaxies that form early and evolve passively. Adjusting the IMF to explain the obvious makes a mockery of Occam’s razor.

AGN is a catchall term for objects like quasars that are powered by supermassive black holes at the centers of galaxies. This is a light source that is non-stellar, so we’ll overestimate the stellar mass if we mistake some light from AGN# as being from stars. In addition, we know that AGN were more prolific in the early universe. That in itself is also a problem: just as forming galaxies early is hard, so too is it hard to form enough supermassive black holes that early. So this just becomes the same problem in a different guise. Besides, the resolution of JWST is good enough to see where the light is coming from, and it ain’t all from unresolved AGN. Harikane et al. estimate that the AGN contribution is only ~10%.

That leaves the star formation efficiency, which is certainly another knob to twiddle. On the one hand, this is a reasonable thing to do, since we don’t really know what the star formation efficiency in the early universe was. On the other, we expected the opposite: star formation should, if anything, be less efficient at high redshift when the metallicity was low so there were few ways for gas to cool, which is widely considered to be a prerequisite for initiating star formation. Indeed, inefficient cooling was an argument in favor of a top-heavy IMF (perhaps stars need to be more massive to overcome higher temperatures in the gas from which they form), so these two possibilities contradict one another: we can have one but not both.

To me, the star formation efficiency is the most obvious knob to twiddle, but it has to be rather fine-tuned. There isn’t much cosmic time over which the variation must occur, and yet it has to change rapidly and in such a way as to precisely balance the non-evolving UV luminosity function against a rapidly evolving dark matter halo mass function. Once again, we’re in the position of having to invoke astrophysics that we don’t understand to make up for a manifest deficit the behavior of dark matter. Funny how those messy baryons always cover up for that clean, pure, simple dark matter.

I could go on about these possibilities at great length (and did in the 2004 paper cited above). I decline to do so any farther: we keep digging this hole just to fill it again. These ideas only seem reasonable as knobs to turn if one doesn’t see any other way out, which is what happens if one has absolute faith in structure formation theory and is blissfully unaware of the predictions of MOND. So I can already see the community tromping down the familiar path of persuading ourselves that the unreasonable is reasonable, that what was not predicted is what we should have expected all along, that everything is fine with cosmology when it is anything but. We’ve done it so many times before.

Initially I had the cat stuffed back in the bag image here, but that was really for a theoretical paper that I didn’t quite make it to in this post. You’ll see it again soon. The observations discussed here are by observers doing their best in the context they know, so it doesn’t seem appropriate to that.

%We were convinced of the need for non-baryonic dark matter before any fluctuations in the microwave background were detected; their absence at the level of one part in a thousand sufficed.

^The assembly of baryonic mass can and in most cases should be rapid. It is the settling of gas into a rotationally supported structure that takes time – this is influenced by gas physics, not just gravity. Regardless of gravity theory, gas needs to settle gently into a rotating disk in order for spiral galaxies to exist.

+There are other predictions that differ in detail, but this is a reasonable representative of the basic expectation.

*This is not necessarily unreasonable, as there is some proclivity to underestimate the uncertainties. That’s a general statement about the field; I have made no attempt to assess how reasonable these particular error bars are.

&Top-heavy refers to there being more than the usual complement of bright but short-lived (tens of millions of years) stars. These stars are individually high mass (bigger than the sun), while long-lived stars are low mass. Though individually low in mass, these faint stars are very numerous. When one integrates over the population, one finds that most of the total stellar mass resides in the faint, low mass stars while much of the light is produced by the high mass stars. So a top heavy IMF explains high redshift galaxies by making them out of the brightest stars that require little mass to build. However, these stars will explode and go away on a short time scale, leaving little behind. If we don’t outright truncate the mass function (so many knobs here!), there could be some longer-lived stars leftover, but they must be few enough for the whole galaxy to fade to invisibility or we haven’t gained anything. So it is surprising, from this perspective, to see massive galaxies that appear to have evolved normally without any of these knobs getting twiddled.

#Excess AGN were one possibility Jay Franck considered in his thesis as the explanation for what we then considered to be hyperluminous galaxies, but the known luminosity function of AGN up to z = 4 couldn’t explain the entire excess. With the clarity of hindsight, we were just seeing the same sorts of bright, early galaxies that JWST has brought into sharper focus.

I don’t yet see why the universe can’t get older the further you look back in time. Lookback time is based on the speed of light, but the apparent age . . . what really is that?

I see your point though, why muck up the waters if MOND already predicted what we now see?

LikeLike

I’m just an “outside” observer, a stranger to astronomy and physics (and also to the English-speaking world ;-). This perhaps explains my surprise at seeing hypotheses like MOND considered by most of the astronomers too fanciful to mention, while the existence of “dark stars” powered by “Dark Matter heating” seems to be taken seriously. https://arxiv.org/abs/2304.01173 The title of this article is quite strange : what does it mean to observe a “a dark star candidate “? To me it looks like a conjectured ghost fed on supposed food. But once again I’m not a scientist at all. Sincerely, Jean

LikeLiked by 1 person

I heard somewhere about Einstein that he quickly realized that the people in his talks were more interested in the mysteries than in the scientific results.

Perhaps it is our love of fairy tales and mysteries that makes dark matter (and energy) so popular…

LikeLike

“just the right fine-tuned rate to make it look like there is no evolution at all”I am convinced there will be a law.Similar to the planets orbiting the sun.Here, too, gravity is finely tuned to the centrifugal force.The planets neither fall on the sun, nor do they disappear into the universe…

LikeLike

True, but those laws were discovered by firm believers. I think that without their faith in a supernatural entity ordering all things they would have lacked the required perseverance for the discovery. It did go entirely against common belief (epicycles and circles) back then.

LikeLike

Is there any MoND fit to the data? Does MoND predict that star formation happens earlier? At what red shift (age of the universe) do MoND effects start to matter, (or when does the average density become such that most of space experiences a gravitational acceleration less that a_sub_0.) The whole not fitting so well in clusters may make the final question somewhat pointless…

As always thanks so much for the blog.

LikeLike

Depends. My answer is z = 200, which is thermal decoupling. Before this time, it doesn’t matter, as the growth of structure in the baryonic gas is held in check by the radiation background. After decoupling, the entire universe finds itself in the low acceleration regime, and things start to happen. Small things like globular clusters form first, but larger objects quickly follow, so one quickly transitions from the dark ages (z > 200) where everything is highly uniform to there being lots going on already by z=50. 21 cm tomography should easily see the difference from standard structure formation, if we can ever manage to do it.

LikeLike

Re: “As it happens, the option of a grossly variable IMF is already disfavored by the existence of quenched galaxies at z~3 that formed a normal stellar population at much higher redshift (z~11). These galaxies are composed of stars that have the spectral signatures appropriate for a population that formed with a normal IMF and evolved as stars do. This is exactly what we expect for galaxies that form early and evolve passively. Adjusting the IMF to explain the obvious makes a mockery of Occam’s razor.”

I wonder whether this argument is not itself making a mockery of the razor. There appears to be more going for the suggestion of a top-heavy IMF than you make out. See Sneppen et al 2022 and Cameron et al 2023.

LikeLike

I don’t buy it. People were making the same arguments in the ’80s to explain the fact that the ionizing radiation field of low metallicity HII regions was harder than that of high-Z HII regions. Turned out that the variation was entirely explicable by the metallicity of the stars themselves: low Z stars emit harder radiation fields than high Z stars of the same mass, just because there are fewer metals in their atmospheres.

That was over 30 years ago. Since then, I’ve seen this idea come up over and over and over and it has never once panned out. Maybe this time!

LikeLike

As much as I also hate to admit additional parameters, from the standpoint of star formation physics I think both SFE changes and IMF weirdness are on the table here.

The IMF has been studied in the closest thing we have to the dense, high-pressure conditions in the early Universe: the super star clusters in the LMC (R136) and in the Galactic center (e.g. Arches). Those both seem to look rather top-heavy, and R136 hosts the most massive known star. Here‘s my latest compilation plot of measured IMF slopes for different mass ranges, with clickable points that will take you to the ADS page.

As for SFE: metal-poor cooling physics can retard star formation, but it’s not clear that it’s actually the rate-limiter for how many stars you can form in the long run. SFE could be higher in these dense, high-pressure environments because it’s well established (at least in terms of qualitative agreement between simulations) that stellar feedback cannot work as well under such conditions. But I still see no clear reason for a “break” from the fiducial model at z~10. Cheers.

LikeLike

These things will always be on the table. The issue is how seriously we should take them.

When it comes to the IMF, I’m talking about the galaxy-wide, averaged IMF. There’s no doubt that individual star forming events do different things. Leo P, a galaxy with a stellar mass less than a giant molecular cloud, managed to form an O star for goodness sake. (Thanks for the link to the plot; that’s really cool.)

Nevertheless, a 1E10 solar mass galaxy is the sum over many star forming events; the central limit theorem seems to drive the net realization of all the individual star forming events to a common form. That has to be in place by z=0, or we’d see a lot more scatter in Tully-Fisher. Whether it was true way back when is a good question.

LikeLike

The mass-to-light ratio of present-day stellar populations measured traced by NIR comes a range of stellar masses that is very different from the range responsible for the rest-frame UV being measured. One doesn’t directly follow from the the other, especially because those two bits of the IMF are probably dominated by different processes.

LikeLike

For sure. Neither have I ever seen a compelling empirical reason to believe that the galaxy-averaged IMF varies from galaxy to galaxy. So I can believe that perhaps the UV flux of high-z galaxies can be the happenstance of temporarily elevated massive star formation at z > 10, but that doesn’t help explain the already old & still overly massive galaxies at z=4. So what are you getting at? That I was right not to put too much weight on the evolution of the luminosity density?

LikeLike

(sorry for the thread break above)

Was just responding to your point re: scatter in Tully-Fisher. You can mess around with what the UV looked like without breaking present-day galaxy properties too bad.

LikeLike

Dr. McGaugh,

You have, in the past, been asked about applying MOND techniques at different scales. I understand your reluctance to this idea in the sense that it is unprincipled in certain respects.

However, you have also stated that any comprehensive theory must accommodate MONDian successes regardless of its final form.

Wouldn’t calculation of MONDian constants at different scales simply establish benchmarks that must be satisfied? It seems to be a question of the difference between how the cosmos evolves against what the cosmos is doing.

Of course, perhaps there are only the two scales of galactic dynamics and large scale dynamics. So, one additional constraint would be fairly uninformative. And, then, there are probably a dozen other reasons not “dreamt of in my philosophy.”

LikeLike

I’m not sure what you have in mind. There are indeed many scales in the universe, and it is a very crude approximation to break them into “galaxies” and “large scale structure.” The formation of galaxies, as opposed to their equilibrium kinematics, is actually part of large scale structure as the term is commonly employed. (No, I don’t think we could make the terminology more confusing.) So my experience has been that MOND has more “wins” on scales where it is widely believed that CDM is presumptively superior. LCDM has wins on issues for which MOND makes no prediction at all (cosmic scales and expansion history).

LikeLike

could any one comment ?

[Submitted on 20 Feb 2024]

Examining Baryonic Faber-Jackson Relation in Galaxy Groups

Pradyumna Sadhu, Yong Tian

We investigate the Baryonic Faber-Jackson Relation (BFJR), examining the correlation between baryonic mass and velocity dispersion in galaxy groups and clusters. Originally analysed in elliptical galaxies, the BFJR is derivable from the empirical Radial Acceleration Relation (RAR) and MOdified Newtonian Dynamics (MOND), both showcasing a characteristic acceleration scale g†=1.2×10−10ms−2. Recent interpretations within MOND suggest that galaxy group dynamics can be explained solely by baryonic mass, hinting at a BFJR with g† in these systems. To explore this BFJR, we combined X-ray and optical measurements for six galaxy clusters and 13 groups, calculating baryonic masses by combining X-ray gas and stellar mass estimates. Simultaneously, we computed spatially resolved velocity dispersion profiles from membership data using the biweight scale in radial bins. Our results indicate that the BFJR in galaxy groups, using total velocity dispersion, aligns with MOND predictions. Conversely, galaxy clusters exhibit a parallel BFJR with a larger acceleration scale. Analysis using tail velocity dispersion in galaxy groups shows a leftward deviation from the BFJR. Additionally, stacked velocity dispersion profiles reveal two distinct types: declining and flat, based on two parallel BFJRs. The declining profile, if not due to the anisotropy parameters or the incomplete membership, suggests a deviation from standard dark matter density profiles. We further identify three galaxy groups with unusually low dark matter fractions.Comments: 12 pages, 4 figures, published in MNRASSubjects: Cosmology and Nongalactic Astrophysics (astro-ph.CO); Astrophysics of Galaxies (astro-ph.GA)Cite as: arXiv:2402.13320 [astro-ph.CO]

LikeLike

Yeah, that sounds right. Crudely speaking, groups measured with kinematics follow the mass-velocity relation of MOND while X-ray emitting groups & clusters parallel it – same slope but a shift in normalization. This is the origin of the residual missing baryon problem that MOND suffers in clusters – there should be no shift. In LCDM, there should also be a different slope to the relation, so this observation is also problematic, albeit easier to explain away.

Compare their Fig. 3 to Fig. 4 of my review for CJP, https://arxiv.org/abs/1404.7525. The first plot in https://tritonstation.com/2021/02/05/the-fat-one-a-test-of-structure-formation-with-the-most-massive-cluster-of-galaxies/ is a version of the same thing with the slope of the relation divided out.

LikeLike

thanks for answering

would a different ao value work for all galaxy clusters?

LikeLike

Perhaps. The scatter is larger in the cluster data, but that appears to mostly be a data quality issue rather than an intrisic difference between clusters.

LikeLike

do galaxy clusters also have radial acceleration relation, Renzo’s rule and similar relationship to individual galaxy?

LikeLike

Not really – clusters kinda parallel the RAR, but I wouldn’t say they have their own version of the relation. It’s a mess – see, e.g., https://arxiv.org/abs/2303.10175. They might follow a version of Renzo’s rule insofar as lensing maps produce potential contours that usually hug the light distribution.

LikeLike

should galaxy clusters have something like RAR they have their own version of the relation is dark matter doesn’t exist and gravity is modified from Newton ?

LikeLike

What about primordial magnetic fields as an enhancement to SFE? Would you need to invoke MOND or Dark Matter then? Some recent work (Spontaneous magnetization of collisionless plasma, by Zhou, Kunz, Uzdensky) supports the idea that magnetic fields could predate star formation.

LikeLike

Magnetic fields are always a challenge in astronomy, to the point that you question is a literal trope of unanswerable post-colloquium questions: “What about magnetic fields?” Usually we hope they don’t matter, though in the case of star formation they almost certainly do. However, they are also part of the astrophysics that is gonna be the same in either dark matter or MOND, so I doubt that the issue is pertinent to distinguishing between them. The big issue is the timing of structure formation, which is different in the two cases. What comes after to form stars is just what happens… it only matter here to the extent that we have freedom in theory to make one look like the other, which is what all the discussion of SF enhancement boils down to: sure, it looks nothing like what LCDM predicted a priori, and exactly what MOND predicted a priori, but we can make LCDM look like the data if we boost the star formation.

LikeLike

I was wondering about missing mass in galaxy clusters under mond. Could it be as simple as self interaction of gravity being constrained to different geometries?

In a spiral galaxy we could have a planar configuration. In a cluster the gravity between galaxies would become more linearly constrained hence appear stronger than otherwise expected. Elliptical galaxies are approximately spherical so you’d get Newtonian gravity.

LikeLike

There are geometric effects, but we [think we] know how to calculate them. A classic result is that a flattened object like a thin disk (e.g., a spiral galaxy) rotates faster than the spherically equivalent mass distribution. However, this is a modest effect, typically of order 20%. So that’s not enough to do it, even if we hadn’t already accounted for it, which we have.

LikeLike

It would appear that the Cosmos takes a certain perverse pleasure in confounding the expectations of the Expanding Universe model’s believers.

LikeLike

Personally, I expect a simple and subtle solution.

LikeLike

The solution is indeed simple and subtle. All you have to do is give up the 100 year old expanding universe model and suddenly the need for DM, DE, Inflation, expanding spacetime and an inexplicable creation event evaporate like a night fog in the morning sun.

That solution is unacceptable, of course, because everybody knows the EU model is correct since it is based on Friedmann’s assumptions of mathematical convenience – a unitary, homogenous and isotropic “universe” with a “universal” metric. Those assumptions are now treated as if handed down on stone tablets by some god of mathematicism. The repeated face-plants of the model are treated as opportunities for “new physics”, a euphemism for we get to make stuff up. The assumptions are sacrosanct even as the results are nonsensical.

At the time those assumptions were first made the nature and scale of the Cosmos was completely unknown. Fast forward 100 years and despite an exponential growth in our cosmological knowledge all of that new knowledge has been force-fit to the EU model despite the fact that the model’s assumptions don’t make physical sense in light of our current knowledge.

It is no disgrace that the EU model exists but its unquestioned acceptance as the only allowable scientific model of the Cosmos within the academic community surely is.

LikeLike

It would seem that the only thing distinguishing the dark matter hypothesis from some modified gravity theory is that dark matter might interact with other non-gravitational fields:

https://arxiv.org/abs/2402.11716v1

The authors write in the conclusion:

LikeLike

The whole point of particle dark matter (WIMPs, Axions, et cetera) is that particle physicists might be able to directly detect them non-gravitationally through whatever experiments they come up with. The idea of cold dark matter as modified gravity directly threatens the livelihoods of particle physicists because their experiments will be a complete waste of time, money, and effort, and funding agencies will refuse to fund particle physics any more. This is already in an era where the future of their field is under threat because nothing has been discovered at the LHC except for the Higgs.

By Occam’s razor, the debate between cold dark matter vs MOND becomes a debate between Deffayet-Woodard gravity vs MOND in the realm of modified gravity, since particle dark matter theories are more complex than simply inserting the CDM stress tensor into gravity as a separate component.

LikeLike

Particle physicists would certainly prefer that their field not to be moribund.

LikeLike

Reminds me of one of the best physicists of the last century:

“The job of theorists, especially in …, is to suggest new experiments.

A good theory makes not only predictions, but surprising predictions

that then turn out to be true.

If its predictions appear obvious to experimentalists, why would they need a theory?

— Francis Crick”

And yes, he was bored by hydrodynamics and looked for another field of activity after the war – as we all know.

LikeLike

This is completely overblown. Particle physicists will still have all their neutrino experiments to fund, especially because they have beyond standard model physics to explain as neutrino oscillations should not occur in the standard model.

It may actually be better that funding be redirected to neutrino experiments, so that particle physicists may sooner discover whether neutrinos are Dirac or Majorana, or whether neutrinos are sterile or not, and so on, and finally extend the standard model with beyond standard model physics to explain neutrino oscillations.

Neutrinos are a more promising direction for particle physicists than dark matter because there is no evidence dark matter should interact with anything except via gravity. Thus, there should be no expectation that any dark matter detection experiments reveal anything.

LikeLike

At a quick glance, what I take from this is that we need a nonlocal theory. That is a hard realization, but had to face that as a possibility already a long time ago, it does not surprise me now.

A widespread attitude (in general; not of these particular authors) is that cold dark matter, as conventionally conceived, must inevitably explain the observed MOND phenomenology. This is just silly, but the idea is so deeply embedded at this point that people are incapable of letting themselves realize it.

LikeLike

That’s not necessarily the case. The authors write in the introduction

LikeLike

The sentences immediately preceding the above quote is the following:

LikeLike

Sure, but that would very much not be traditional cold dark matter.

What I am saying is that it is practically impossible for traditional cold dark matter to do what it has to do. Where we go from there is the hard question.

LikeLike

This here is a classic example of a false dilemma:

https://physicsworld.com/a/dark-matter-vs-modified-gravity-which-team-are-you-on/

The false dilemma is by presenting dark matter vs modified gravity and making MOND the poster child of modified gravity, and presenting particle dark matter as the poster child of dark matter, and presenting these two as the only possible options on the table.

With Deffayet’s and Woodard’s new theory of gravity, all substantive content in the dark matter vs MOND debate can be rephrased as a debate between two different modified gravity theories, while the actual dark matter vs modified gravity debate becomes whether one believes that dark matter interacts non-gravitationally or not.

There is absolutely no reason for Indranil Banik to support particle dark matter anymore, even after rejecting MOND. It is perfectly consistent to hold the position that

1. MOND is wrong

2. The astrophysical and cosmological predictions of CDM, and thus those of Deffayet’s and Woodard’s theory of gravity, are right

3. Dark matter is not a particle, and stuff like CDM, WDM, SIDM, superfluid DM, etc are all wrong. Instead, the stuff attributed to dark matter is really just modified gravity.

LikeLike

Disagree. You’ll also get the other hybrid position of supporting modified gravity for cosmological observations and some sort of interacting dark matter to explain galaxy dynamics, compared to the current hybrid position of using MOND for galaxy dynamics and CDM for cosmological observations because MOND has issues at large scales.

Then you have a 4 way battle between

LikeLike

In the micro-world (quantum mechanics), non-locality means that two entangled photons communicate at faster-than-light speed when measuring polarization (or better, when coordinate and determining polarization).

Is anyone able to explain the meaning of non-locality in astronomy in simple terms?

see also the scene starting at 1:10 from “Margin Call”

LikeLike

spooky action at an astronomical distance

LikeLike

Kurt Schmidt, Madeleine Birchfield, Stacy McGaugh

Do any of you have a better, more comprehensive answer?

Who is interacting non-locally here?

And why does it help?

LikeLike

Nonlocality here means that the force a particle experiences may depend on its trajectory, not just its instantaneous position. This property is apparently necessary to conserve momentum in modified inertia theories, e.g., https://ui.adsabs.harvard.edu/abs/1994AnPhy.229..384M/abstract & https://ui.adsabs.harvard.edu/abs/2022PhRvD.106f4060M/abstract

LikeLiked by 1 person

Check out the walking droplet experiments, which behave roughly according to de Broglie’s original pilot wave theory. They are able to demonstrate interference, entanglement, etc:

https://cfsm.njit.edu/walking_droplets.php

It seems the “hidden” assumption is markovian dynamics. If that doesn’t hold and particles can be influenced by past behavior (ie, there is a “memory”) then we no longer need any quantum weirdness. Just non-markovian dynamics.

LikeLike

Is this the same as adding an imaginary time component to the laws of motion?

LikeLike

Think about some boats navigating a lake. The forces on each boat is influenced by the previous motion (the wake) of both itself and all other other boats.

https://en.wikipedia.org/wiki/Wake_(physics)

I’m sure it is possible to ignore/deny the existence of the wakes then model the forces on the boats with imaginary time or dark matter or whatever exotic mathematical concept.

But instead you can just accept that these wakes exist.

Here is another research page on this phenomenon: https://thales.mit.edu/bush/index.php/4801-2/

The funny thing is that Stacy’s blind spot is apparently hydrodynamics, which is why he missed it so far. But these are macroscopic experimental results backed by theory (indeed, the original explanation for QM) rather than just theorizing.

LikeLike

Imaginary time is a simple extension that finds application in quantum mechanics, relativity, cosmology and statistical mechanics.

The beauty of extending time to the complex plane is that it permits a description of the complementary nature of the universe at a very fundamental level.

LikeLike

Is there an emoji for blind[spot] eye-rolling?

LikeLike

@abo

Looking at these links, this looks like condensed matter physics.

One thing I have learned slogging around and trying to make sense of the various claims on physics blogs is that it is important to remember that particle physics as fundamental is distinct from condensed matter physics and quantum computing. The mathematics is the mathematics — it can have application in a multitude of applications. Economists hijacked some of it in the early 20th century and it is currently seeing application in biophysics, if opinions on “articial life” are considered. But, in large part, this is because linear algebra with respect to the separation of convex hulls is useful across mathematical applications. It appears early in physics because of describing motion simultaneously in multiple dimensions. The same is true of “duality” because linear algebra is essentially the same as projective geometry.

When reasoning with respect to these applications, the various interpretations of quantum phenomena will be invoked.

The problem is that there appears to be no means of deciding between the interpretations with respect to the foundational theories.

LikeLike

Thank you very much. I will think about it.

LikeLike

of interest

arXiv:2402.19459 [pdf, other]Anomalous contribution to galactic rotation curves due to stochastic spacetimeJonathan Oppenheim, Andrea RussoSubjects: General Relativity and Quantum Cosmology (gr-qc); Astrophysics of Galaxies (astro-ph.GA); High Energy Physics – Theory (hep-th)We consider a proposed alternative to quantum gravity, in which the spacetime metric is treated as classical, even while matter fields remain quantum. Consistency of the theory necessarily requires that the metric evolve stochastically. Here, we show that this stochastic behaviour leads to a modification of general relativity at low accelerations. In the low acceleration regime, the variance in the acceleration produced by the gravitational field is high in comparison to that produced by the Newtonian potential, and acts as an entropic force, causing a deviation from Einstein’s theory of general relativity. We show that in this “diffusion regime”, the entropic force acts from a gravitational point of view, as if it were a contribution to the matter distribution. We compute how this modifies the expectation value of the metric via the path integral formalism, and find that an entropic force driven by a stochastic cosmological constant can explain galactic rotation curves without needing to evoke dark matter. We caution that a greater understanding of this effect is needed before conclusions can be drawn, most likely through numerical simulations, and provide a template for computing the deviation from general relativity which serves as an experimental signature of the Brownian motion of spacetime.

LikeLiked by 1 person

That idea in this paper sounds great IMO. Funny thought, modifying GR from Einstein “God does not play dice” by making it inherently stochastic. Although I believe God does not play dice, stochastics is useful in many ways. Dice rolls are, if you completely know the system, deterministic, yet stochastics gives a nicer framework for analysis because of its simplicity.

LikeLike

how does it compare with other gravity theoretical approaches-they to MOND?

like Deur

LikeLike

Why would it all work differently on what we designated micro- and macroscopic scales? Because we are in the middle and we are somehow important? That ‘universe only exists because conscious agents perceive it’ nonsense? But cheers to whomever came up with that, was a good chuckle.

The universe is a bowl of unimaginably tasty minestrone. And one can not stir it, take a spoonful, or even a single bean, out of it without the whole knowing about one’s action. The above people would probably add ‘and hate you for it’.

But all that is of course way too complicated for mildly sentient ape. We need answers and we need them now, godammit! The fact that there are problems that are more important and solvable and in the field is obviously SEP.

If you were the universe would you go on about it like that? First setting up some rules for your initial stage; subatomic particles and forces governing them, then atoms and baryogenesis and another force and then to the grand design of stars and stuff? Never mind, of course you would. That’s how we operate, don’t we? Messy af.

Taking current standing cosmological model at face value; initial state was near zero entropy. Or in other words, it had near zero memory capacity. How does one encode SM of particle physics onto something that has at most just few bytes? Unless of course you think that ‘rules’, whatever they may be, are something that’s outside the universe. Like god?

Then again, it all might be just somebody messing around with level design in pre-alpha stage in which case, all bets are off.

P.S: Another unimportant thing. I strongly dislike this new editor.

LikeLike