A common refrain I hear is that MOND works well in galaxies, but not in clusters of galaxies. The oft-unspoken but absolutely intended implication is that we can therefore dismiss MOND and never speak of it again. That’s silly.

Even if MOND is wrong, that it works as well as it does is surely telling us something. I would like to know why that is. Perhaps it has something to do with the nature of dark matter, but we need to engage with it to make sense of it. We will never make progress if we ignore it.

Like the seventeenth century cleric Paul Gerhardt, I’m a stickler for intellectual honesty:

“When a man lies, he murders some part of the world.”

Paul Gerhardt

I would extend this to ignoring facts. One should not only be truthful, but also as complete as possible. It does not suffice to be truthful about things that support a particular position while eliding unpleasant or unpopular facts* that point in another direction. By ignoring the successes of MOND, we murder a part of the world.

Clusters of galaxies are problematic in different ways for different paradigms. Here I’ll recap three ways in which they point in different directions.

1. Cluster baryon fractions

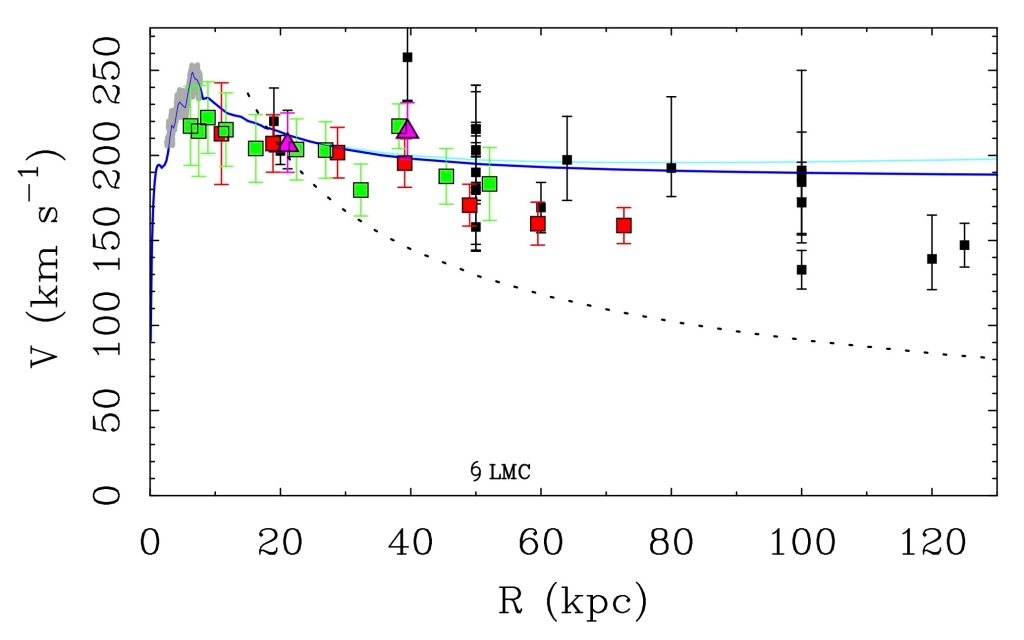

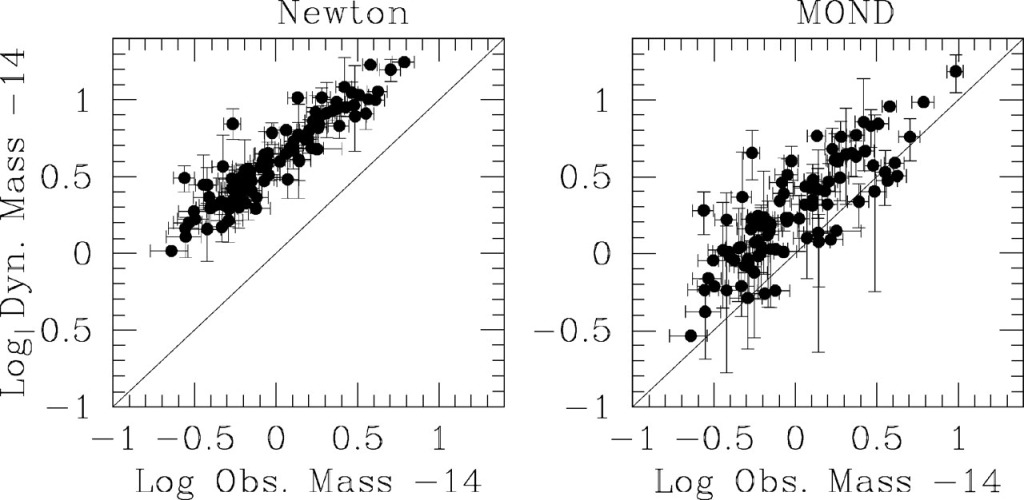

An unpleasant fact for MOND is that it does not suffice to explain the mass discrepancy in clusters of galaxies. When we apply Milgrom’s formula to galaxies, it explains the discrepancy that is conventionally attributed to dark matter. When we apply MOND clusters, it comes up short. This has been known for a long time; here is a figure from the review Sanders & McGaugh (2002):

The Newtonian dynamical mass exceeds what is seen in baryons (left). There is a missing mass problem in clusters. The inference is that the difference is made up by dark matter – presumably the same non-baryonic cold dark matter that we need in cosmology.

When we apply MOND, the data do not fall on the line of equality as they should (right panel). There is still excess mass. MOND suffers a missing baryon problem in clusters.

The common line of reasoning is that MOND still needs dark matter in clusters, so why consider it further? The whole point of MOND is to do away with the need of dark matter, so it is terrible if we need both! Why not just have dark matter?

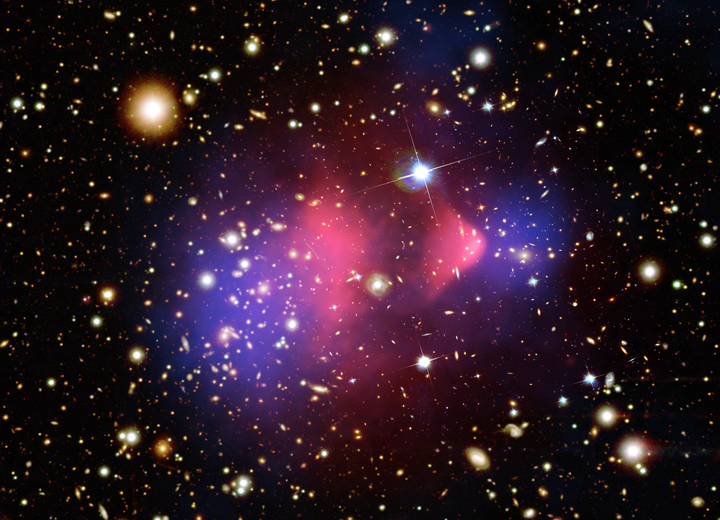

This attitude was reinforced by the discovery of the Bullet Cluster. You can “see” the dark matter.

Of course, we can’t really see the dark matter. What we see is that the mass required by gravitational lensing observations exceeds what we see in normal matter: this is the same discrepancy that Zwicky first noticed in the 1930s. The important thing about the Bullet Cluster is that the mass is associated with the location of the galaxies, not with the gas.

The baryons that we know about in clusters are mostly in the gas, which outweighs the stars by roughly an order of magnitude. So we might expect, in a modified gravity theory like MOND, that the lensing signal would peak up on the gas, not the stars. That would be true, if the gas we see were indeed the majority of the baryons. We already knew from the first plot above that this is not the case.

I use the term missing baryons above intentionally. If one already believes in dark matter, then it is perfectly reasonable to infer that the unseen mass in clusters is the non-baryonic cold dark matter. But there is nothing about the data for clusters that requires this. There is also no reason to expect every baryon to be detected. So the unseen mass in clusters could just be ordinary matter that does not happen to be in a form we can readily detect.

I do not like the missing baryon hypothesis for clusters in MOND. I struggle to imagine how we could hide the required amount of baryonic mass, which is comparable to or exceeds the gas mass. But we know from the first figure that such a component is indicated. Indeed, the Bullet Cluster falls at the top end of the plots above, being one of the most massive objects known. From that perspective, it is perfectly ordinary: it shows the same discrepancy every other cluster shows. So the discovery of the Bullet was neither here nor there to me; it was just another example of the same problem. Indeed, it would have been weird if it hadn’t shown the same discrepancy that every other cluster showed. That it does so in a nifty visual is, well, nifty, but so what? I’m more concerned that the entire population of clusters shows a discrepancy than that this one nifty case does so.

The one new thing that the Bullet Cluster did teach us is that whatever the missing mass is, it is collisionless. The gas shocked when it collided, and lags behind the galaxies. Whatever the unseen mass is, is passed through unscathed, just like the galaxies. Anything with mass separated by lots of space will do that: stars, galaxies, cold dark matter particles, hard-to-see baryonic objects like brown dwarfs or black holes, or even massive [potentially sterile] neutrinos. All of those are logical possibilities, though none of them make a heck of a lot of sense.

As much as I dislike the possibility of unseen baryons, it is important to keep the history of the subject in mind. When Zwicky discovered the need for dark matter in clusters, the discrepancy was huge: a factor of a thousand. Some of that was due to having the distance scale wrong, but most of it was due to seeing only stars. It wasn’t until 40 some years later that we started to recognize that there was intracluster gas, and that it outweighed the stars. So for a long time, the mass ratio of dark to luminous mass was around 70:1 (using a modern distance scale), and we didn’t worry much about the absurd size of this number; mostly we just cited it as evidence that there had to be something massive and non-baryonic out there.

Really there were two missing mass problems in clusters: a baryonic missing mass problem, and a dynamical missing mass problem. Most of the baryons turned out to be in the form of intracluster gas, not stars. So the 70:1 ratio changed to 7:1. That’s a big change! It brings the ratio down from a silly number to something that is temptingly close to the universal baryon fraction of cosmology. Consequently, it becomes reasonable to believe that clusters are fair samples of the universe. All the baryons have been detected, and the remaining discrepancy is entirely due to non-baryonic cold dark matter.

That’s a relatively recent realization. For decades, we didn’t recognize that most of the normal matter in clusters was in an as-yet unseen form. There had been two distinct missing mass problems. Could it happen again? Have we really detected all the baryons, or are there still more lurking there to be discovered? I think it unlikely, but fifty years ago I would also have thought it unlikely that there would have been more mass in intracluster gas than in stars in galaxies. I was ten years old then, but it is clear from the literature that no one else was seriously worried about this at the time. Heck, when I first read Milgrom’s original paper on clusters, I thought he was engaging in wishful thinking to invoke the X-ray gas as possibly containing a lot of the mass. Turns out he was right; it just isn’t quite enough.

All that said, I nevertheless think the residual missing baryon problem MOND suffers in clusters is a serious one. I do not see a reasonable solution. Unfortunately, as I’ve discussed before, LCDM suffers an analogous missing baryon problem in galaxies, so pick your poison.

It is reasonable to imagine in LCDM that some of the missing baryons on galaxy scales are present in the form of warm/hot circum-galactic gas. We’ve been looking for that for a while, and have had some success – at least for bright galaxies where the discrepancy is modest. But the problem gets progressively worse for lower mass galaxies, so it is a bold presumption that the check-sum will work out. There is no indication (beyond faith) that it will, and the fact that it gets progressively worse for lower masses is a direct consequence of the data for galaxies looking like MOND rather than LCDM.

Consequently, both paradigms suffer a residual missing baryon problem. One is seen as fatal while the other is barely seen.

2. Cluster collision speeds

A novel thing the Bullet Cluster provides is a way to estimate the speed at which its subclusters collided. You can see the shock front in the X-ray gas in the picture above. The morphology of this feature is sensitive to the speed and other details of the collision. In order to reproduce it, the two subclusters had to collide head-on, in the plane of the sky (practically all the motion is transverse), and fast. I mean, really fast: nominally 4700 km/s. That is more than the virial speed of either cluster, and more than you would expect from dropping one object onto the other. How likely is this to happen?

There is now an enormous literature on this subject, which I won’t attempt to review. It was recognized early on that the high apparent collision speed was unlikely in LCDM. The chances of observing the bullet cluster even once in an LCDM universe range from merely unlikely (~10%) to completely absurd (< 3 x 10-9). Answers this varied follow from what aspects of both observation and theory are considered, and the annoying fact that the distribution of collision speed probabilities plummets like a stone so that slightly different estimates of the “true” collision speed make a big difference to the inferred probability. What the “true” gravitationally induced collision speed is is somewhat uncertain because the hydrodynamics of the gas plays a role in shaping the shock morphology. There is a long debate about this which bores me; it boils down to it being easy to explain a few hundred extra km/s but hard to get up to the extra 1000 km/s that is needed.

At its simplest, we can imagine the two subclusters forming in the early universe, initially expanding apart along with the Hubble flow like everything else. At some point, their mutual attraction overcomes the expansion, and the two start to fall together. How fast can they get going in the time allotted?

The Bullet Cluster is one of the most massive systems in the universe, so there is lots of dark mass to accelerate the subclusters towards each other. The object is less massive in MOND, even spotting it some unseen baryons, but the long-range force is stronger. Which effect wins?

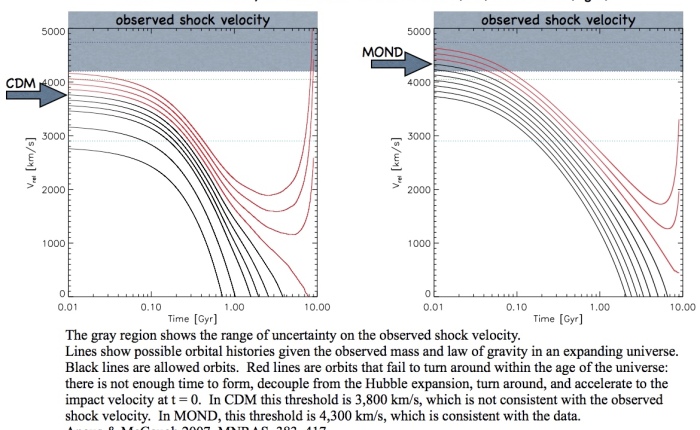

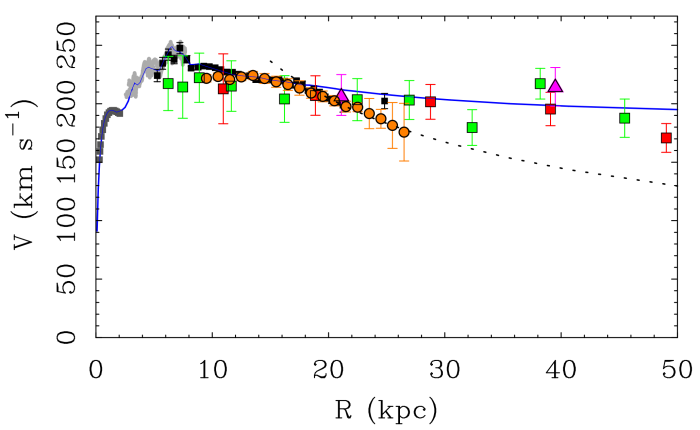

Gary Angus wrote a code to address this simple question both conventionally and in MOND. Turns out, the longer range force wins this race. MOND is good at making things go fast. While the collision speed of the Bullet Cluster is problematic for LCDM, it is rather natural in MOND. Here is a comparison:

A reasonable answer falls out of MOND with no fuss and no muss. There is room for some hydrodynamical+ high jinx, but it isn’t needed, and the amount that is reasonable makes an already reasonable result more reasonable, boosting the collision speed from the edge of the observed band to pretty much smack in the middle. This is the sort of thing that keeps me puzzled: much as I’d like to go with the flow and just accept that it has to be dark matter that’s correct, it seems like every time there is a big surprise in LCDM, MOND just does it. Why? This must be telling us something.

3. Cluster formation times

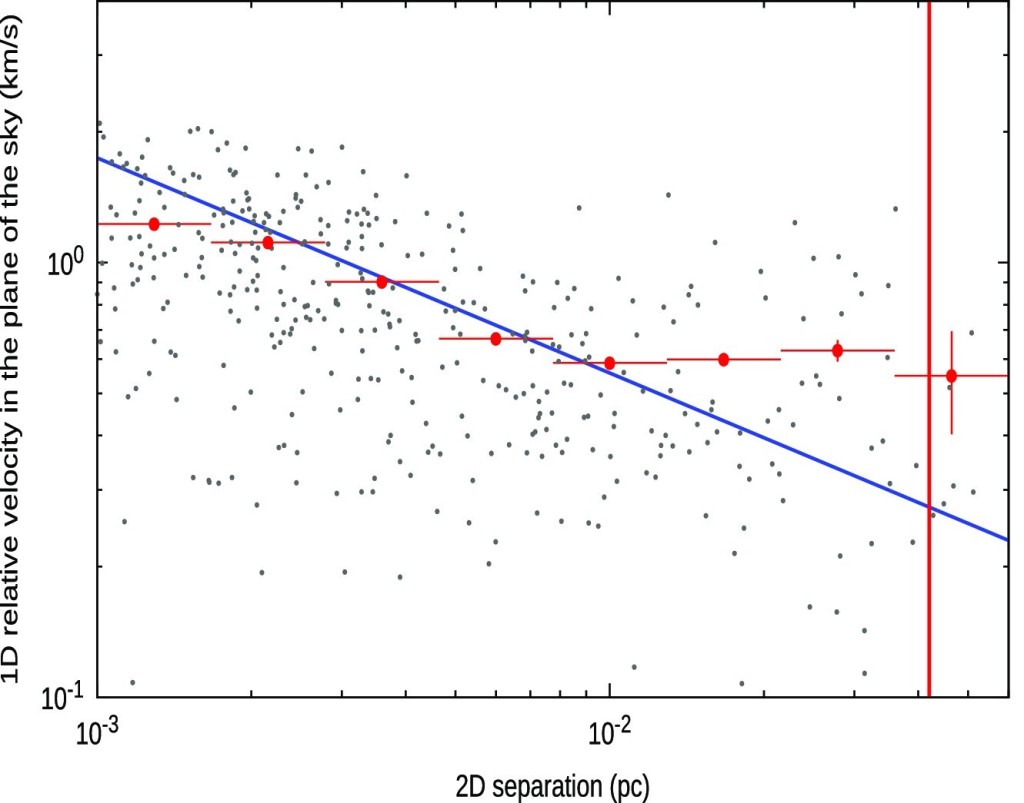

Structure is predicted to form earlier in MOND than in LCDM. This is true for both galaxies and clusters of galaxies. In his thesis, Jay Franck found lots of candidate clusters at redshifts higher than expected. Even groups of clusters:

The cluster candidates at high redshift that Jay found are more common in the real universe than seen with mock observations made using the same techniques within the Millennium simulation. Their velocity dispersions are also larger than comparable simulated objects. This implies that the amount of mass that has assembled is larger than expected at that time in LCDM, or that speeds are boosted by something like MOND, or nothing has settled into anything like equilibrium yet. The last option seems most likely to me, but that doesn’t reconcile matters with LCDM, as we don’t see the same effect in the simulation.

MOND also predicts the early emergence of the cosmic web, which would explain the early appearance of very extended structures like the “big ring.” While some of these very large scale structures are probably not real, there seem to be a lot of such things being noted for all of them to be an illusion. The knee-jerk denials of all such structures reminds me of the shock cosmologists expressed at seeing quasars at redshifts as high as 4 (even 4.9! how can it be so?) or clusters are redshift 2, or the original CfA stickman, which surprised the bejeepers out of everybody in 1987. So many times I’ve been told that a thing can’t be true because it violates theoretician’s preconceptions, only for them to prove to be true, ultimately to be something the theorists expected all along.

Well, which is it?

So, as the title says, clusters ruin everything. The residual missing baryon problem that MOND suffers in clusters is both pernicious and persistent. It isn’t the outright falsification that many people presume it to be, but is sure don’t sit right. On the other hand, both the collision speeds of clusters (there are more examples now than just the Bullet Cluster) and the early appearance of clusters at high redshift is considerably more natural in MOND than In LCDM. So the data for clusters cuts both ways. Taking the most obvious interpretation of the Bullet Cluster data, this one object falsifies both LCDM and MOND.

As always, the conclusion one draws depends on how one weighs the different lines of evidence. This is always an invitation to the bane of cognitive dissonance, accepting that which supports our pre-existing world view and rejecting the validity of evidence that calls it into question. That’s why we have the scientific method. It was application of the scientific method that caused me to change my mind: maybe I was wrong to be so sure of the existence of cold dark matter? Maybe I’m wrong now to take MOND seriously? That’s why I’ve set criteria by which I would change my mind. What are yours?

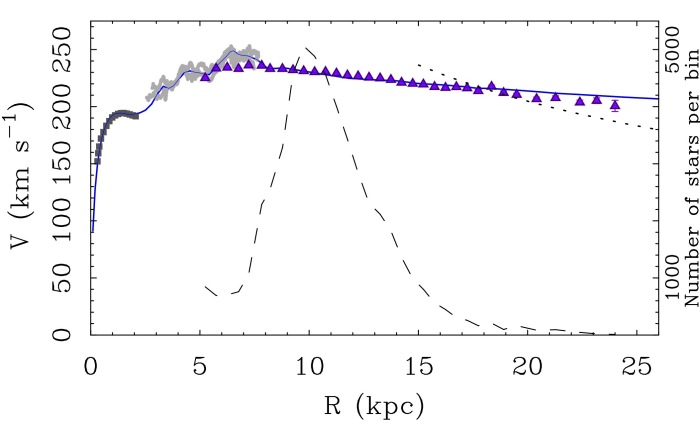

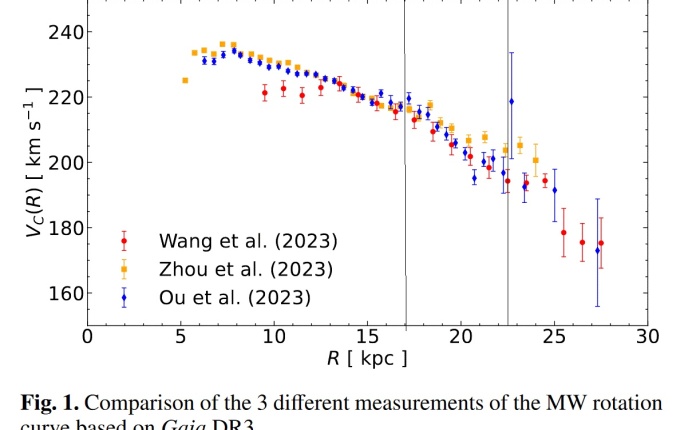

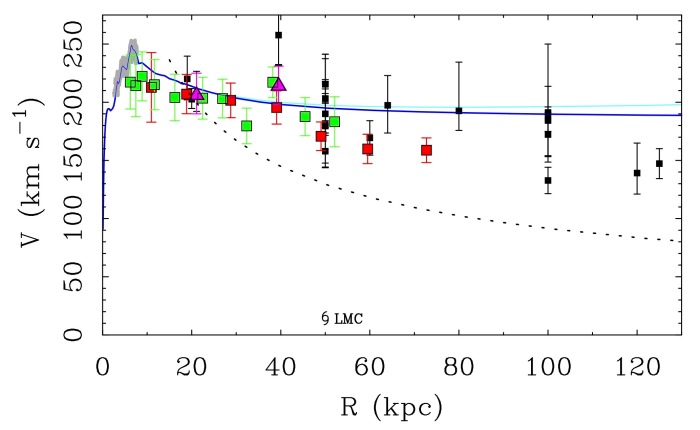

*In the discussion associated with a debate held at KITP in 2018, one particle physicist said “We should just stop talking about rotation curves.” Straight-up said it out loud! No notes, no irony, no recognition that the dark matter paradigm faces problems beyond rotation curves.

+There are now multiple examples of colliding cluster systems known. They’re a mess (Abell 520 is also called “the train wreck cluster“), so I won’t attempt to describe them all. In Angus & McGaugh (2008) we did note that MOND predicted that high collision speeds would be more frequent than in LCDM, and I have seen nothing to make me doubt that. Indeed, Xavier Hernandez pointed out to me that supersonic shocks like that of the Bullet Cluster are often observed, but basically never occur in cosmological simulations.